Advanced Reasoning with Large Language Models with Chain of Thought Prompting | Paper explained!

Вставка

- Опубліковано 14 січ 2023

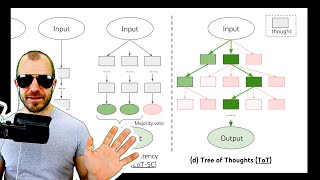

- Paper link: arxiv.org/abs/2201.11903

Abstract: We explore how generating a chain of thought -- a series of intermediate reasoning steps -- significantly improves the ability of large language models to perform complex reasoning. In particular, we show how such reasoning abilities emerge naturally in sufficiently large language models via a simple method called chain of thought prompting, where a few chain of thought demonstrations are provided as exemplars in prompting. Experiments on three large language models show that chain of thought prompting improves performance on a range of arithmetic, commonsense, and symbolic reasoning tasks. The empirical gains can be striking. For instance, prompting a 540B-parameter language model with just eight chain of thought exemplars achieves state of the art accuracy on the GSM8K benchmark of math word problems, surpassing even finetuned GPT-3 with a verifier.

#artificialintelligence #nlproc #nlp #deeplearning #ml - Наука та технологія

She's a robot, right? She's good. It was her occasionally unnatural inflection that tipped me off.

Yes indeed! I'm using www.synthesia.io/

It wasn't the inflections that got me it was the unnatural movements.

That uncanny valley consistency. So weird.

Great videos! I have question regarding the data, did they actually added the chain of thoughts to all of the training data, or only some of them?

UA-cam will add subtitles if we need them. No need to add them to the videos - they are distracting

CoT prompting seems to be a logical solution to getting the LLM to do what you want it to do.

What are the limitations of CoT prompting?

Thanks for the comment! The limitations might be that for some tasks CoT might potentially be overcomplicating / diluting the target problem. Also, CoT increases the input/output sequence lengths, leading to slower inference / greater cost. But it's definitely a great technique to test for your problem!

is the host also computer generated using AI...!!🤔

What no human?

B should be read as Billion not as B.

Thanks for the comment! Yeah, that's because the video is actually created with AI! Check out: www.synthesia.io/?via=nlplab

Bra go read that actual paper it's only 43pgs. Whoops I meant pages! 😬

nothing new, She didnt even bother to run a test herself lol waste of time.