Chain-of-Thought Prompting Elicits Reasoning in LLMs

Вставка

- Опубліковано 10 чер 2024

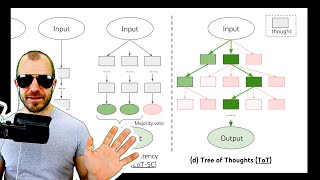

- Chain of thought (CoT) is a series of intermediate natural language reasoning steps that lead to the final output. It has become a very common way of prompt engineering today. In this video, I will talk about experiments with CoT. We observe that CoT prompting improves performance by a large margin on arithmetic reasoning, commonsense reasoning and symbolic reasoning. CoT is robust to different annotators, exemplars, and language models. CoT prompting facilitates out of domain generalization to longer sequence lengths. No language models were finetuned. CoT prompts were used only at inference time. CoT helps the most when three conditions are met: (1) the task is challenging and requires multi-step reasoning, (2) a large LM is used, and (3) the scaling curve is relatively flat.

In this video, I will briefly provide an overview of these models.

Here is the agenda:

00:00:00 What is chain of thought prompting?

00:02:15 What are advantages of chain of thought prompting?

00:04:59 How does CoT work for Arithmetic Reasoning tasks?

00:12:46 How does CoT work for Commonsense Reasoning?

00:14:38 How does CoT work for Symbolic Reasoning?

00:17:35 Errors in the chain of thought

For more details, please look at

Wei, Jason, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Ed Chi, Quoc Le, and Denny Zhou. "Chain of thought prompting elicits reasoning in large language models." arXiv preprint arXiv:2201.11903 (2022). - Наука та технологія

Thanks Manish sir for doing this.