Chain of Thought Prompting | Self Consistency Prompting Explained | Prompt Engineering 101

Вставка

- Опубліковано 23 лис 2023

- 👉Large Language Models are a very powerful tool. And to elicit desired information from LLMs, effective prompts are a must. And that's what Prompt Engineering is.

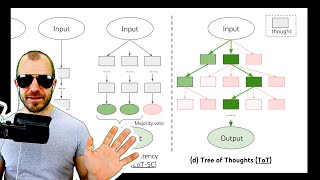

👉Two intermediary prompting engineering techniques: Chain of Thought Prompting and Self Consistency Prompting will improve your prompting skills.

👉Chain of Thought Prompting: Chain of Thought prompting is about guiding the LLM to think step by step!

👉Self Consistency Prompting: Self-consistency aims to simulate this process by providing the model with diverse perspectives and encouraging it to critically evaluate its own reasoning.

--------------------------------------------------------

Generative AI Learning Roadmaps 🔥

--------------------------------------------------------

1️⃣ GenAI Roadmap#1 👉 • Generative AI Roadmap ...

2️⃣ GenAI Roadmap#2 👉 • Complete Generative AI...

--------------------------------------------------------------

Enroll in the BlackBelt Plus Program 🔥

--------------------------------------------------------------

👉 Become a Data Scientist without leaving your job

👉 1️⃣ Hands-on 50+ Industry Projects,

👉 2️⃣ 1:1 Mentorship Sessions, and

👉 3️⃣ Dedicated Interview Preparation along with Placement Support

👉 Enroll Here 🔗 bit.ly/40Aa71g

-------------------------------------------

🔥 Important Video Links

-------------------------------------------

👉 AI Tools to Build Resume: • How to Write Resume wi...

👉 AI Tools to Build LinkedIn: • Build Powerful LinkedI...

👉 AI tools to Crack Interviews: • Land a Job with ChatGP...

--------------------------------------------------------------

Free Certification Courses 🔥

--------------------------------------------------------------

👉 Python for GenAI in 3 Hours 🔗 • Python Full Course - i...

👉 Tableau in 3 Hours 🔗 • Tableau Full Course - ...

👉 Microsoft Excel - 3 Hours 🔗 • Excel Full Course - in...

👉 SQL in 3 Hours 🔗 • SQL Full Course - in 3...

👉 Statistics & EDA - 2 Hours 🔗 • Statistics for Data Sc...

👉 Machine Learning - 8 Hours 🔗 • Machine Learning Full ...

👉 Deep Learning - 2 Hours 🔗 • Deep Learning Tutorial...

more prompt please love your explanation.

Very well explained!! Thank you!

Glad you liked it 👍

thank you for sharing. what types of prompting techniques would you recommend to incorporate empathy and increase the model's understanding of nuance in a LLM?

Adding memory (via RAG) is a great way to make LLM responses nuanced.

lol.. up the prompting game.

arithmetic, logical reasoning,- chain of thoughts. -step by step

reasoning is coming in answers, not in prompt. where is this info added at model in run time?

Can you please mention the time stamp which you are referring to for this query?

Self-Consistency Prompting is really just a poor mans version of Stacked Ensemble with Diverse Reasoning... why not called it Ensemble Prompting or extend the concept to Sparse Stacked Ensemble Prompting

Dear Sir, thank you for your generous explanation. I understood to use "step-by-step" to apply CoT. But is there any keyword I can apply the "self-consistency" method in combination with CoT?

Self-consistency prompting is a technique used to improve the performance of CoT prompting on tasks involving arithmetic and common sense reasoning [18:22]. It works by calling the language model multiple times on the same prompt and taking the result that is most consistent as the final answer. This essentially simulates how humans explore different reasoning paths before arriving at a well-informed decision.

So yes, you can use self-consistency prompting in combination with CoT prompting by calling the language model multiple times on the same prompt and taking the most consistent answer as the final output.

@@Analyticsvidhya Thank you teacher. I'm clear now.