[ML News] Chips, Robots, and Models

Вставка

- Опубліковано 14 тра 2024

- OUTLINE:

0:00 - Intro

0:19 - Our next-generation Meta Training and Inference Accelerator

01:39 - ALOHA Unleashed

03:10 - Apple Inks $50M Deal with Shutterstock for AI Training Data

04:28 - OpenAI Researchers, Including Ally of Sutskever, Fired for Alleged Leaking

05:01 - Adobe's Ethical Firefly AI was Trained on Midjourney Images

05:52 - Trudeau announces $2.4billion for AI-related investments

06:48 - RecurrentGemma: Moving Past Transformers for Efficient Open Language Models

07:15 - CodeGemma - an official Google release for code LLMs

07:24 - Mistral AI: Cheaper, Better, Faster, Stronger

08:08 - Vezora/Mistral-22B-v0.1

09:00 - WizardLM-2, next generation state-of-the-art-LLM

09:31 - Idefics2, the strongest Vision-Language-Model (VLM) below 10B!

10:14 - BlinkDL/rwkv-6-world

10:50 - Pile-T5: Trained T5 on the Pile

11:35 - Model Card for Zephyr 141B-A39B

12:42 - Parler TTS

13:11 - RHO-1: Not all tokens are what you need

14:59 - Ferret-UI: Grounded Mobile UI Understanding with Multimodal LLMs

References:

/ 1780263768968273923

ai.meta.com/blog/next-generat...

soumithchintala/s...

deepnewz.com/tech/apple-inks-...

TolgaBilge_/statu...

javilopen/status/...

paulg/status/1781...

/ 1776706907295846628

www.cbc.ca/news/politics/fede...

arxiv.org/pdf/2404.07839

huggingface.co/blog/codegemma

mistral.ai/news/mixtral-8x22b/

MistralAILabs/sta...

huggingface.co/Vezora/Mistral...

huggingface.co/Vezora/Mistral...

WizardLM_AI/statu...

_philschmid/statu...

huggingface.co/BlinkDL/rwkv-6...

blog.eleuther.ai/pile-t5/

huggingface.co/HuggingFaceH4/...

huggingface.co/MaziyarPanahi/...

reach_vb/status/1...

arxiv.org/pdf/2404.07965

arxiv.org/pdf/2404.05719

sambanova.ai/blog/samba-coe-t...

www.microsoft.com/en-us/resea...

twelve_labs/statu...

drive.google.com/file/d/1Av5j...

arxiv.org/pdf/2404.12387

arxiv.org/abs/2404.12241

arxiv.org/pdf/2404.12241

/ 1780650122382049596

audiodialogues.github.io/

os-world.github.io/

ai.meta.com/blog/openeqa-embo...

arxiv.org/pdf/2404.07503

arxiv.org/pdf/2404.06654

amanrsanger/statu...

huggingface.co/datasets/xai-o...

github.com/PygmalionAI/aphrod...

github.com/jina-ai/reader/?ta...

r.jina.ai/x.com/elonmusk

r.jina.ai/github.com/...

github.com/rogeriochaves/lang...

mvpatel2000/statu...

github.com/databricks/megablocks

github.com/nus-apr/auto-code-...

github.com/nus-apr/auto-code-...

karpathy/status/1...

karpathy/status/1...

github.com/BasedHardware/Friend

argmaxinc/status/...

awnihannun/status...

/ 1776399292036501898

pytorch.org/blog/torchtune-fi...

If you want to support me, the best thing to do is to share out the content :) - Наука та технологія

wow you are really productive on UA-cam lately, good for us

Couldn't agree more. I find that my appetite for machine learning research is a strictly monotonically increasing function of Kilcher's productivity on UA-cam. As a postdoc, that's quite significant lol

@@EdFormer I find Kilcher's videos to be the path of least resistance for me to keep up with ML research. As a working person trying to get into a PhD programme, that's extremely valuable :D

@@lone0017 you're getting the right skeptical and evidence-based perspective for your goals while being brought up to date too, which most other channels in this day and age really don't provide. Best of luck with your applications!

Don't jinx it

1:58 No, Yannic, the difference between high cost robots and low cost robots is their price.

Here the missing chapter timings!

15:42 Samba

17:06 Vasa-1

17:55 Pegasus-1

18:17 Reka

19:58 AI safety introduction v0.5

20:20 Longer context benchmark x post

21:17 New Audio Dialogue Dataset

22:33 OSWorld: Agents Environment

24:39 OPENEQA AR QA Dataset

26:41 Best Practices and Lessons Learned on Synthetic Data for Language Models

27:07 Ruler: Real Context size paper

29:57 Aman's post: SWE-bench contaminated supposition

31:28 Realwor1dQA Dataset

32:34 Repositories

36:13 X Posts

38:09 torchtune: fine tuning library from Pytorch

Thx

❤

A scientific channel, looking at papers. Yessss sir

"UA-cam, Audio, No"

The content I'm here for.

Holy cow, you're turning out the videos. Thank you so much for sharing your expertise

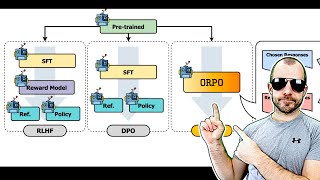

I would be really interested in a video about the different cutting-edge fine tuning methods and how they differ.

Thank you Mr Yannik for giving us the latest updates in this edition of Machine Learning news.

Keep it up! Forgot about this series but like it!

Best AI youtube channel!

Another certified Tuesday banger 😎

Thx yan!

I would love to have your point of view on what Extropic is building.

Very informative video

An excellent video. I wonder if the RHO concept is more profound than you give credit for, it seems inherently better if you could filter out bad tokens, identify the most meaningful tokens, and not necessarily need synthetic data? Token quality is more important than token quantity for humans, why shouldn't it be the same for LLMs?

You are fun Yannic 😎

I am looking for a paper review video about Rho-1!

25:20 😂😂said so casually!

39:00 Well you see... the difference between high cost robots and low cost robots is the cost.

@0:33 Int operations are ops, not flops.

very good video

Gpt2-chatbot could be openAI gpt5, I guess it automatically pre-process input text as low colordepth image (containing the text). So any text prompt is feed into the model as text+image. The ascii-art performance is a tell of something like that.

Yes!

8:10 the 22b model is nonsense, it performs worse than a 3b model. makes sense since averaging the experts just gets you a randomly initialized FFN with some extreme outlier weights

Since i began following here - my AI comprehension lately skyrocketed.

Any chance you can release these as podcasts again? I have to rip the youtube videos and convert to mp3 every time to get them on my music player.

Who owns a music player in 2024? Not trying to be a dick. But can’t you use your phone?

The robot arm used by Aloha is the TrossenRobotics ViperX 300. Costs $6129.95

I just wish there were better options for DIY local/edge inference. Basically nothing since the Google Coral Edge TPU 5+ years ago.

OpenAI has signed deal with Microsoft I think in 2018, it took them really long to create their own chip

So, the typical UI is optimized for non-technical human users for accesibility, since they are the expect end-users. We have UI for technical people with a mix of performance and usability

How would a UI designed for models/machines and optimized for performance look like? How much performant could a model trained on it become? It's a bit of a loop thinking about an UI optimized for a model and a model optimized for an UI. At the end the model could become a more powerful UI getting closer to both the user through natural language and conversation and also closer to the machine doing the actual work

Basically another layer of high-low language

16:04

gpt2-chatbot is a top performing not (yet) public new model. A recent (30.04) X post from Sam Altman hints at exactly this. It is currently being tested in the Hugging Face Chatbot Arena.

It appears to be a GPT-2 model trained using OpenAI's industry-leading training methods.

RHO-1 seems like a perfect application for mobile ai's

good show today.

The last fabric manipulation demo I saw maybe last year looked pretty similar... but the video was sped up 64x. The Google demo was actual speed, tremendously better.

In most of cases we don’t know what are the training data for LLMs. How can we be sure those benchmarks were not part of their training data?

You can’t, that’s why you need to come up with novel evals of your own creation, which you never publish anywhere.

Teaching to the test we used to call it in K12 education.

Mmmm, chips. Oh, those kind of chips. Can't eat those.😞

TLC will find someone to challenge that notion.

Did you hear that George Hotz hacked the RTX 4090 driver to enable P2P? I'm pretty interested of the implications this has for running local LLMs, really fascinating stuff. It was on Hacker News' front page a few weeks ago!

GPT-2 solves the Aunt Agatha riddle correctly. Even Claude Opus fails to do that.

Using well-known riddles and puzzles is a really terrible test of intelligence. The model could easily just be recalling the solution from its training data.

vroom vroom vroom gpu vroom vroom vroom

1. Low cost robots: Wake me up when they become good enough in sorting garbage to make plastic recycling profitable.

Could someone with money to burn on dataacenter waste heat please test if it would work to have a model that is trained to re-write a certain fraction of it's own weights at inference time (half, a third, 10 percent etc); sorta like if instead of predicting tokens directly, it first predicts some of it's own weights based on the inputs; possibly also comparing if it's better to keep just certain layers unfrozen, just certain neurons unfrozen, let it decide what to freeze at training time, or even not freeze anything in specific and just let it "predict". not only the weights values but also which neurons to change as well Or some variation of this idea that makes a little more sense if what I described has some details that don't really match how the pieces fit together or whatever.

just tried idefics, kinda suck on zero shot task to be honest...

Oh, and talking about ideas I don' t have the money to test (see my other comment here for another crazy idea); could someone please try to train a model with training-time random ablation of both parameter count and quantization level, all on the same training, so that the final model from the get go is already optimized to work at whatever ablation level of parameter count and quantization derived versions end up having, essentially training not only for the outputs but also for sorting it''s own parameters and bits by how important they are?

"Has anyone heard of Tensorflow lately?" Google engineers who is still using TF: crying behind the scene.

5:59 $2.4 billion for some ai programm, $100 million for house problems, I guess what are the priorities of the governemnt

Can you please make a video on AI Report 2024

and so on and what not

Canada investing in EhI

Ilja used to make cool tutorials, hope he hasnt been Jack Ma-ed :D

just casually calling out tensorflow lol

I like therefore I am…

I don't get Shutterstock? Arent they digging their own grave? Selling a photo for 1€ so that nobody has to ever buy it again from them? That doesnt make sense to me.

I think their logic is like : if someone is gonna dig my grave, might as well be paid for it

@@AvastarBin with $875 million in revenue, one would assume they would focus on building that thing themselves. Their financial targets for 2027 is $1.2 billion according to the Q4/2023 earnings call. The first thing I would do is to find my biggest competitor, suggest a merger and bet everything on the card that they will be the ones with the larges high quality corpus and they would sue anyone out there who uses their pictures for training.

How many Google robots does it take to hang a shirt...

The aloha project is pretty neat. If I had an extra $20k laying around I'd build my own and tinker too. It's all open source

Dear Yannic, with all due respect: This green screen causes eye cancer. Please just put _ANYTHING_ there :) Thanks!

Some of the news seems old

I think the whole "Ally" of suskever is thrown in the title cause our brains autocorrect to "Ilya suskever" stupid clikbaiters

I think bro misunderstands the concept of green screen 😂😂😂😎🟩