- 455

- 126 434

Brunei Math Club

Japan

Приєднався 25 гру 2020

I'm Dr. Akira. In this channel, I mainly explain introductory university-level maths, including univariate and multivariate calculus, (some) linear algebra, probability, statistics, stochastic processes, and some abstract algebra.

I am NOT a mathematician, but a mathematical/computational biologist. I have an M.Sc. in Chemistry and a Ph.D. in Genetics. Although I'm not a mathematician, I enjoy studying and teaching math. (I no longer teach in Brunei.)

このチャンネルは合同会社アニマ・マキナ(所在地:大阪市)によって運営されています。

This channel is managed by Anima Machina G.K. (Osaka, Japan).

I am NOT a mathematician, but a mathematical/computational biologist. I have an M.Sc. in Chemistry and a Ph.D. in Genetics. Although I'm not a mathematician, I enjoy studying and teaching math. (I no longer teach in Brunei.)

このチャンネルは合同会社アニマ・マキナ(所在地:大阪市)によって運営されています。

This channel is managed by Anima Machina G.K. (Osaka, Japan).

The sum of independent Gaussian random vectors is Gaussian.

We show that the sum of independent Gaussian random vectors is again a Gaussian vector. The proof is similar to the univariate Gaussian case but a bit more tedious due to the handling of vectors and matrices.

Subscribe:

www.youtube.com/@BruneiMathClub?sub_confirmation=1

Twitter:

BruneiMath

Subscribe:

www.youtube.com/@BruneiMathClub?sub_confirmation=1

Twitter:

BruneiMath

Переглядів: 125

Відео

Linear transformation of a Gaussian vector is Gaussian

Переглядів 1728 місяців тому

We show that the linear transformation of a Gaussian random vector is again a Gaussian random vector. Subscribe: www.youtube.com/@BruneiMathClub?sub_confirmation=1 Twitter: BruneiMath

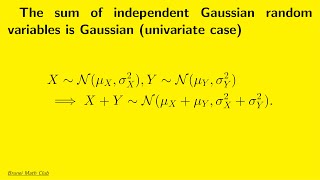

The sum of Gaussian random variables is Gaussian (univariate case)

Переглядів 1528 місяців тому

We prove that the sum of Gaussian random variables is Gaussian for the univariate case. (The multivariate case will be presented in another video.) Subscribe: www.youtube.com/@BruneiMathClub?sub_confirmation=1 Twitter: BruneiMath

Kernel method and classification problem

Переглядів 1029 місяців тому

We study how the kernel method can be applied to nonlinear classification problems. Subscribe: www.youtube.com/@BruneiMathClub?sub_confirmation=1 Twitter: BruneiMath

Proving a Representer Theorem

Переглядів 2869 місяців тому

Herein we prove a Representer Theorem, which gives a justification for applying the kernel method to regression problems in general. Subscribe: www.youtube.com/@BruneiMathClub?sub_confirmation=1 Twitter: BruneiMath

Applying the kernel method to polynomial regression

Переглядів 1099 місяців тому

By appropriately choosing a kernel function, we can readily solve the polynomial regression problem. Subscribe: www.youtube.com/@BruneiMathClub?sub_confirmation=1 Twitter: BruneiMath

Kernel method for regression: The basic idea

Переглядів 1569 місяців тому

By choosing some appropriate kernel function and the usual linear regression technique, we can solve nonlinear regression problems. The resulting approximating function is represented as a linear combination of reproducing kernels. Subscribe: www.youtube.com/@BruneiMathClub?sub_confirmation=1 Twitter: BruneiMath

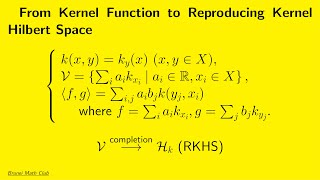

From kernel function to reproducing kernel Hilbert space

Переглядів 2159 місяців тому

Given a kernel function k(x,y), we can construct a unique reproducing kernel Hilbert space (the Moore-Aronszajn theorem). To do this, we first regard the bivariate kernel function as a parameterized univariate function k_{x}(y) = k(y,x), then consider a vector space of function spanned by those parametrized univariate (kernel) functions. We can introduce an inner product in this space, which in...

Examples of kernel functions

Переглядів 2649 місяців тому

The function k: X×X → R(real numbers) is called a kernel function (on X) if it is symmetric and positive semi-definite. In this video, we show some examples of kernel functions and how to construct a kernel function from other functions. Subscribe: www.youtube.com/@BruneiMathClub?sub_confirmation=1 Twitter: BruneiMath

Riesz representation theorem

Переглядів 1869 місяців тому

Given a Hilbert space H and an element x0 in H, we can define a map φ_{x0}(x) on H in terms of the inner product between x and x0. This map is linear and bounded (in some sense). The Riesz Representation Theorem claims that the converse is true. That is, any linear map (functional) in H can be uniquely represented as an inner product. As a consequence, function application (such as f(x)) can be...

Cauchy's integral formula and L^2 inner product

Переглядів 11110 місяців тому

Cauchy's integral formula and L^2 inner product

Orthonormal basis in a function space.

Переглядів 17110 місяців тому

Orthonormal basis in a function space.

A continuous function on a closed interval is Riemann-integrable

Переглядів 189Рік тому

A continuous function on a closed interval is Riemann-integrable

A continuous function on a closed interval is uniformly continuous

Переглядів 384Рік тому

A continuous function on a closed interval is uniformly continuous

Marginal distributions of the multivariate Normal distribution are normal.

Переглядів 637Рік тому

Marginal distributions of the multivariate Normal distribution are normal.

Deriving the multivariate normal distribution from the maximum entropy principle

Переглядів 610Рік тому

Deriving the multivariate normal distribution from the maximum entropy principle

Maximum entropy and the normal distribution

Переглядів 911Рік тому

Maximum entropy and the normal distribution

Why is the gamma matrix invertible?

Good question! Short answer: That's an assumption. Specifically, we are (implicitly) assuming that there are n degrees of freedom for the n-variate normal distribution. If that's not the case (i.e., there are only m (< n) degrees of freedom), the gamma matrix is NOT invertible. However, we can change the variables by some linear transformation to do the same with m-variate normal distribution.

@@BruneiMathClub Thanks for your reply. I understand the assumption that there are n degrees of freedom for the n-variate normal distribution. However, it seems that the assertion of the gamma matrix being invertible is made before establishing any connection between the gamma matrix and the degrees of freedom. In other words, the gamma matrix appears to have no direct relationship with the degrees of freedom of the n-variate normal distribution when you complete the square using the inverse of the gamma matrix. I think perhaps we can prove the invertibility of the gamma matrix by showing that it has only positive eigenvalues. If any eigenvalue were zero or negative, wouldn't the integral of p(x) along the corresponding eigenvector become infinite?

I see. You are saying that although I said the assumption was that there were n degrees of freedom, that doesn't immediately imply the Gamma matrix is invertible. You are right! How about this: First, we assume gamma's invertibility without connecting it to the degrees of freedom. Then, the gamma matrix turns out to be the inverse of the covariance matrix. Thus, the gamma has an inverse if and only if the covariance matrix is full rank (i.e., has n degrees of freedom) and, equivalently, all its eigenvalues are positive. By the way, the eigenvalues of a covariance matrix cannot be negative. When some eigenvalues are 0, we can eliminate redundant random variables to make the covariance matrix full rank (with fewer degrees of freedom) and repeat the same argument. I don't think we can prove the invertibility of the gamma matrix as their elements are just Lagrange multipliers (no further assumptions). But I may be wrong. If you find out, please post a video and let me know!

14:11 Correction: ||f|| and ||g|| should be ||f||^2 and ||g||^2. Thanks to @BJ_HACK-mv9yg for pointing this out.

13:59 , you used norm form and not the norm squared form cause the norm of f and g in L2 has a square-root

You are right! The last norms should be squared. Thanks for the correction.

thanks a bunch

You are welcome!

Thanks 4 the explanation

You're welcome!

Voice??

Voice what??

@BruneiMathClub its low..

I see. Sorry, but I can't fix it in that video. I'll be careful in the coming ones. Thanks for letting me know.

First

X is a vector, right? You're using X to refer to the vector and Xindex to refer to its components?

That's correct.

Very good video!!! I have been looking for some literature that has this result. Do you know of any?

I don't recall any specific literature (I learned this long ago). But it's shown in this video, and you supposedly learned it, so shouldn't it be "obvious" now?

masterpiece,but I still failed my exam

Sorry, but that's life. Keep trying.

It should've been pointed out that the Jacobian of transformation y -> z is 1, since U is orthonormal.

You are right! Thanks for pointing that out.

❤❤❤

32:16 sir, how do you choose y =x+ sqrt2 /M cleverly? or is there a reason y is chosen as such ?

Here, the goal is to find a number (i.e., y) between x and b. For that, it suffices to define y as such. By the way, there's a typo: the last "=" at 33:40 should be "<" as √2 < (b - x)M. Sorry...

Thanks bro

Welcome

Teacher, you can do Video Analysis year 3 in University ?

Sorry, but there is no plan for Year-3 Analysis soon. But some parts of "Mathematical Methods I" ua-cam.com/play/PLyuCphY_oem8iWozOQgCkXfiqv4Ck_fa-.html&si=WDwnxYPVdaKP6PY8 and "Mathematical Methods II" ua-cam.com/play/PLyuCphY_oem9ot_dukEFxWxbpKC4_ZdIL.html&si=AN89tjL3LwS9kFvm may help. Thanks for the request anyway.

In which module will we learn about the nth root of numbers? ie finding all the 5 roots to the fifth roots of 1?

I don't know your university's curriculum, but we usually teach it when the polar form of complex numbers is introduced. That can be Calculus I ("Mathematical Methods I" in this channel) and/or Complex Analysis.

@BruneiMathClub ahh i see I've check the complex analysis's course description, seems to be in there. Oh and may i ask is this play list ( mathematical methods 1) follows exact syllabus of sm1201? And if so, where can i find ( or which textbook) to do more practices?

@@Wingwing-by5me , I suppose you are a UBD student. Actually, I designed the current SM-1201 module. You can find my lecture notes at bruneimathclub.blogspot.com/p/mathematical-methods-i.html

Very helpful video! I was trying to understand the proof in my textbook, but there was a step that I didn't really understand

Glad it helped!

great! thanks a lot

You are welcome!

King real King!

Thank you.

You're welcome!

Perplexity forwarded me here :) Thank you for the proof.

Welcome!

Geometric process have discrete time and continuous space 01:35

Right! Thanks for pointing it out. c.f., Wikipedia: en.wikipedia.org/wiki/Geometric_process

Best video i could find for this topic!

Thanks!

How can I study the Uniform Convergence for the series of function ∑(x/(x^2+1))^k Where x is from R

I'm not sure what you mean exactly. Why don't you make a video and let me know when you figure it out?

The intent of my statement is that I want a way to study the Uniform Convergence of the above series of functions

Thank's a lot for your excellent explanation Dr. Akira, maybe you lost a factor of 2 in the denominator in exp to get the following result: ρ_z(z) = 1⧸√(2π(σ_x^2+σ_y^2)) exp[-(z-(μ_x+μ_y))^2/2(σ_x^2+σ_y^2)] TJ

King

Very good thanks

Most welcome!

The P(X) should be P(Xi)*P(Xj) in the variance term, still using P(X) could be a mistake?

It is P(X) = P(X1, X2, ..., Xn) (joint probability density), not P(Xi)*P(Xj). Note Xi and Xj may not be independent.

@@BruneiMathClub Thank you so much. I finally get it.

Why is there a 1/2 timed to the covariance constraint? Should the degree of freedom of the covariance matrix be D(D+1)/2?

That 1/2 in the covariance constraint is not essential. It's there mostly for an aesthetic reason (it looks nicer after differentiation). You get the same result without the 1/2 factor (try it!), as it can be absorbed in the Lagrange multipliers (γ's).

@@BruneiMathClub Yes indeed. Thank you for your reply and fantastic videos! I’ve been working on the exercise of the Pattern Recognition and Machine Learning book and your videos helped a lot!

@@BruneiMathClub BTW you can also evaluate the stationary point in full matrix form using the trace operator for the quadratic term, which I find is pretty neat.

And what is it used for?

For example, the regression problem can be cast as finding a projection onto a subspace "generated" by a dataset. Future videos will explain such applications.

Can you please tell me what is the referencee to this demonstration?

It's in quite a few textbooks. For example, in "Elements of Information Theory" by Cover and Thomas, See also the Wikipedia page: en.wikipedia.org/wiki/Jensen%27s_inequality

@@BruneiMathClub thank's a lot.

You are grate mannn, thanks, god bless you

You're welcome! God bless you, too.

Great video! I was looking for a video about uniform convergence of the Fourier Series and your video really helped. Thanks.

Glad it was helpful!

thank you for accessible explanation!

Glad it was helpful!

It's nice to see someone doing proper math in a short. By proper math, I just mean something beyond basic calc/multivariable calc.

Thanks. Shorts can be helpful sometimes.

nice, thanks alot for sharing

You are welcome.

very clear ♥ new fan😍

Thanks and welcome!

I Love it

Thanks!

❤

The eigen basis is dual to the standard basis -- conjugacy is dual, spectral decomposition. The integers are self dual as they are their own conjugates. "Always two there are" -- Yoda. Real is dual to imaginary -- complex numbers are dual. Antipodal points identify for the rotation group SO(3) -- stereographic projection.

You really love duality!

@@BruneiMathClub Yes, duality means that there is a 4th law of thermodynamics. Anything which is dual to entropy is by definition the 4th law of thermodynamics:- Syntropy (prediction) is dual to increasing entropy -- the 4th law of thermodynamics! Teleological physics (syntropy) is dual to non teleological physics (entropy). Syntax is dual to semantics -- languages, communication. If mathematics is a language then it is dual. All observers make predictions to track targets, goals and objectives and this is a syntropic process -- teleological. The Einstein reality criterion:- "If, without in any way disturbing a system, we can predict with certainty (i.e., with probability equal to unity) the value of a physical quantity, then there exists an element of reality corresponding to that quantity." (Einstein, Podolsky, Rosen 1935, p. 777) Internet Encyclopedia of Philosophy:- www.iep.utm.edu/epr/ According to Einstein reality is predicted into existence -- a syntropic process, teleological. Your brain/mind creates models or predictions of reality hence your mind is syntropic (convergent). Here is a video of some well known physicists talking about duality, watch at 1 hour 4 minutes:- ua-cam.com/video/UjDxk9ZnYJQ/v-deo.html Mathematics is full of dualities (see next comment). Once you accept the 4th law this means that that there is a 5th law of thermodynamics:- Symmetry is dual to conservation -- the duality of Noether's theorem. Duality is a symmetry and it is being conserved according to Noether's theorem. Energy is duality, duality is energy -- the 5th law of thermodynamics! Potential energy is dual to kinetic energy -- gravitational energy is dual.

@@BruneiMathClub Here are some examples of duality in mathematics:- Points are dual to lines -- the principle of duality in geometry. The point duality theorem is dual to the line duality theorem. Homology is dual to co homology -- the word co means mutual and implies duality. Sine is dual to cosine or dual sine -- perpendicularity. Sinh is dual to cosh -- hyperbolic functions. Addition is dual to subtraction (additive inverses) -- abstract algebra. Multiplication is dual to division (multiplicative inverses) -- abstract algebra. Integration (summations, syntropy) is dual to differentiation (differences, entropy). Convergence (syntropy) is dual to divergence (entropy). Injective is dual to surjective synthesizes bijective or isomorphism. The word isomorphism actually means duality. Subgroups are dual to subfields -- the Galois correspondence. Positive is dual to negative -- electric charge or numbers. Positive curvature is dual to negative curvature -- Gauss, Riemann geometry. Curvature or gravitation is dual. "Perpendicularity in hyperbolic geometry is measured in terms of duality" -- Professor Norman J. Wildberger, universal hyperbolic geometry:- ua-cam.com/video/EvP8VtyhzXs/v-deo.html All observers have a syntropic or hyperbolic perspective of reality. The tetrahedron is self dual. The cube is dual to the octahedron. The icosahedron is dual to the dodecahedron. Waves are dual to particles -- quantum duality or pure energy is dual. Symmetric wave functions (Bosons, waves) are dual to anti-symmetric wave functions (Fermions, particles) -- the spin statistics theorem. Bosons are dual to Fermions -- atomic duality. Pure energy is dual and it is being conserved -- the 5th law of thermodynamics!

@@BruneiMathClub Concepts are dual to percepts -- the mind duality of Immanuel Kant. Mathematicians create new ideas or concepts all the time from their perceptions, measurements, observations or intuitions -- they are using duality! The bad news is that Immanuel Kant has been completely ignored for over 200 years and this is why you need new laws of physics! Antinomy (duality) is two truths that contradict each other -- Immanuel Kant. Enantiodromia is the unconscious opposite or opposame (duality) -- Carl Jung.

Projections imply two dual perspectives. Increasing or creating new dimensions or states is an entropic process -- Gram-Schmidt procedure. Decreasing or destroying dimensions or states is a syntropic process. Divergence (entropy) is dual to convergence (syntropy) -- increasing is dual to decreasing. "Always two there are" -- Yoda.

Perpendicularity, orthogonality = Duality! "Perpendicularity in hyperbolic geometry is measured in terms of duality" -- universal hyperbolic geometry. Orthonormality is dual. "Always two there are" -- Yoda. Vectors are dual to co-vectors (forms) -- vectors are dual. Space is dual to time -- Einstein.

Sine is dual to cosine or dual sine -- the word co means mutual and implies duality. "Always two there are" -- Yoda.

For this and the coming videos, I use typeset notes instead of handwritten notes for presentation. I'd appreciate it if you let me know which one you prefer.

Thank you

You're welcome.

Is it's f'(x) is signum function right?

Almost, but not exactly. They differ at x = 0. For f(x) = |x|, f'(0) = 1, whereas signum(0) = 0.

Great thanks

You are welcome.

Hello sir.. I'm sorry Bangladesh.

I swear I was so god damn confused about the definition of the ball (N_epsilon) set, and how it is used to determine whether a set is open or closed before your video. For some reason our material lacks any visualisation, so you video really really helped me out :)

Wow, I'm thrilled to hear that! Thanks, and enjoy your study.