Deriving the multivariate normal distribution from the maximum entropy principle

Вставка

- Опубліковано 9 лют 2025

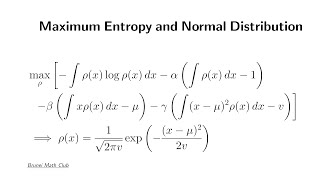

- Just like the univariate normal distribution, we can derive the multivariate normal distribution from the maximum entropy principle. But in this case, we need to specify the whole covariance matrix (not just variances).

For the univariate version, see • Maximum entropy and th...

For the basic properties of multivariate Gaussian integrals, see • Multivariate Gaussian ...

Why is there a 1/2 timed to the covariance constraint? Should the degree of freedom of the covariance matrix be D(D+1)/2?

That 1/2 in the covariance constraint is not essential. It's there mostly for an aesthetic reason (it looks nicer after differentiation). You get the same result without the 1/2 factor (try it!), as it can be absorbed in the Lagrange multipliers (γ's).

@@BruneiMathClub Yes indeed. Thank you for your reply and fantastic videos! I’ve been working on the exercise of the Pattern Recognition and Machine Learning book and your videos helped a lot!

@@BruneiMathClub BTW you can also evaluate the stationary point in full matrix form using the trace operator for the quadratic term, which I find is pretty neat.

Why is the gamma matrix invertible?

Good question! Short answer: That's an assumption. Specifically, we are (implicitly) assuming that there are n degrees of freedom for the n-variate normal distribution. If that's not the case (i.e., there are only m (< n) degrees of freedom), the gamma matrix is NOT invertible. However, we can change the variables by some linear transformation to do the same with m-variate normal distribution.

@@BruneiMathClub Thanks for your reply. I understand the assumption that there are n degrees of freedom for the n-variate normal distribution. However, it seems that the assertion of the gamma matrix being invertible is made before establishing any connection between the gamma matrix and the degrees of freedom. In other words, the gamma matrix appears to have no direct relationship with the degrees of freedom of the n-variate normal distribution when you complete the square using the inverse of the gamma matrix.

I think perhaps we can prove the invertibility of the gamma matrix by showing that it has only positive eigenvalues. If any eigenvalue were zero or negative, wouldn't the integral of p(x) along the corresponding eigenvector become infinite?

I see. You are saying that although I said the assumption was that there were n degrees of freedom, that doesn't immediately imply the Gamma matrix is invertible. You are right! How about this: First, we assume gamma's invertibility without connecting it to the degrees of freedom. Then, the gamma matrix turns out to be the inverse of the covariance matrix. Thus, the gamma has an inverse if and only if the covariance matrix is full rank (i.e., has n degrees of freedom) and, equivalently, all its eigenvalues are positive. By the way, the eigenvalues of a covariance matrix cannot be negative. When some eigenvalues are 0, we can eliminate redundant random variables to make the covariance matrix full rank (with fewer degrees of freedom) and repeat the same argument.

I don't think we can prove the invertibility of the gamma matrix as their elements are just Lagrange multipliers (no further assumptions). But I may be wrong. If you find out, please post a video and let me know!

The P(X) should be P(Xi)*P(Xj) in the variance term, still using P(X) could be a mistake?

It is P(X) = P(X1, X2, ..., Xn) (joint probability density), not P(Xi)*P(Xj). Note Xi and Xj may not be independent.

@@BruneiMathClub Thank you so much. I finally get it.

X is a vector, right? You're using X to refer to the vector and Xindex to refer to its components?

That's correct.

HUGE!

Is it?