Simple reverse-mode Autodiff in Python

Вставка

- Опубліковано 13 чер 2024

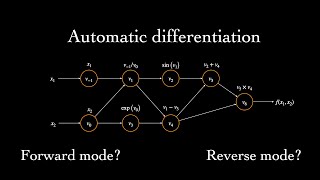

- Ever wanted to know how automatic differentiation (the general case of backpropagation for training neural networks in deep learning) works? Let's have an easy tutorial in Python. Here is the code: github.com/Ceyron/machine-lea...

-----

👉 This educational series is supported by the world-leaders in integrating machine learning and artificial intelligence with simulation and scientific computing, Pasteur Labs and Institute for Simulation Intelligence. Check out simulation.science/ for more on their pursuit of 'Nobel-Turing' technologies (arxiv.org/abs/2112.03235 ), and for partnership or career opportunities.

-------

📝 : Check out the GitHub Repository of the channel, where I upload all the handwritten notes and source-code files (contributions are very welcome): github.com/Ceyron/machine-lea...

📢 : Follow me on LinkedIn or Twitter for updates on the channel and other cool Machine Learning & Simulation stuff: / felix-koehler and / felix_m_koehler

💸 : If you want to support my work on the channel, you can become a Patreon here: / mlsim

🪙: Or you can make a one-time donation via PayPal: www.paypal.com/paypalme/Felix...

-------

⚙️ My Gear:

(Below are affiliate links to Amazon. If you decide to purchase the product or something else on Amazon through this link, I earn a small commission.)

- 🎙️ Microphone: Blue Yeti: amzn.to/3NU7OAs

- ⌨️ Logitech TKL Mechanical Keyboard: amzn.to/3JhEtwp

- 🎨 Gaomon Drawing Tablet (similar to a WACOM Tablet, but cheaper, works flawlessly under Linux): amzn.to/37katmf

- 🔌 Laptop Charger: amzn.to/3ja0imP

- 💻 My Laptop (generally I like the Dell XPS series): amzn.to/38xrABL

- 📱 My Phone: Fairphone 4 (I love the sustainability and repairability aspect of it): amzn.to/3Jr4ZmV

If I had to purchase these items again, I would probably change the following:

- 🎙️ Rode NT: amzn.to/3NUIGtw

- 💻 Framework Laptop (I do not get a commission here, but I love the vision of Framework. It will definitely be my next Ultrabook): frame.work

As an Amazon Associate I earn from qualifying purchases.

-------

Timestamps:

00:00 Intro

00:18 Our simple (unary) function

00:35 Closed-Form symbolic derivative

01:16 Validate derivative by finite differences

01:55 What is automatic differentiation?

02:53 Backprop rule for sine function

04:27 Backprop rule for exponential function

06:06 Rule library as a dictionary

07:14 The heart: forward and backward pass

11:17 Trying the rough autodiff interface

13:15 Syntactic sugar to get a high-level interface

14:10 Compare autodiff with symbolic differentiation

14:59 Outro

Very clear explanation! Thanks and hope you'll get more views

Thanks for the kind feedback 😊

Feel free to share it with your network :)

Thanks for the great content as always. One question and one comment. How would you handle it if it is a DAG instead of a chain? Any reference (book/paper) that you can share? I noted that for symbolic differentiation, you pay the price of redundant calculation (quadratic in the length of chains) but with constant memory. On the other hand, the auto-diff caches the intermediate values and has linear calculation but also linear memory.

Hi,

Thanks for the kind comment :), and big apologies for the delayed reply. I just started working my way through a longer backlog of comments; it's been a bit busy in my private life the past months.

Regarding your question: For DAGs the approach is to either record a separate Wengert list or overload operations. It's a bit harder to truly find a taxonomy for this because different autodiff engines all have their own style (source transformation at various stages in the compiler/interpreter chain vs. pure operator overloading in the high-level language, restrictions to certain high-level linear algebra operations, etc.). I hope I can finish the video series with some examples of it in the coming months.

These are some links that directly come to my mind: The "Autodidact" repo is one of the earlier tutorials of simple (NumPy-based) autodiff engines in Python, written by Matthew Johnson (co-author of the famous HIPS autograd package): github.com/mattjj/autodidact . The HIPS autograd authors are also involved in the modern JAX package (that is featured quite often on the channel). There is a similar tutorial called "autodidaX": jax.readthedocs.io/en/latest/autodidax.html

The microgrid package by Andrey Karpathy is also very insightful: github.com/karpathy/micrograd . It is based on "PyTorch-like" perspective. His video on "Becoming a backdrop Ninja" can also be helpful.

In the Julia world: you might find the documentation of the "Yota.jl" package helpful: dfdx.github.io/Yota.jl/dev/design/

Hope that gave some first resources. :)

Thank you for the clear explanation! I wonder where does the term "cotangent" come from? A google search shows it comes from differential geometry, do I need to learn differential geometry to understand it ...?

You're welcome 🤗

Glad you liked it. The term cotangent is borrowed from differential geometry, indeed. If you are using reverse-mode autodiff to compute the derivative of a scalar-valurd loss, you can think of the cotangent associated with a node in the computational graph to be the derivative of the loss wrt that node. More abstractly it is just the auxiliary quantity associated with each node.

Hi, thanks for the video. At 4:20 you say you link to some videos in the top right, but I do not see them.

You're welcome 😊

Thanks for catching that, I will add the link later today. This should have been linked to this video: ua-cam.com/video/Agr-ozXtsOU/v-deo.html

More generally, there is also a playlist with a larger collection of rules (also for tensor-level autodiff): ua-cam.com/play/PLISXH-iEM4Jn3SEi07q8MJmDD6BaMWlJE.html

And I started collecting them in a simple accessible website (let me know if you spot a error there, that's still an early version): fkoehler.site/autodiff-table/

can you please tell your recommend resources to learn maths? or how did you learned math?

Hi,

it's a great question, but very hard to answer. I can't pinpoint it to this one approach, this one text book etc.

I have an engineering math background (I studied mechanical engineering for my bachelor degree). Generally speaking, I prefer the approach taken in engineering math classes, being more algorithmically focused than theorem-proof focused. Over the course of my undergrad, I used various UA-cam resources (which also motivated me doing this channel). The majority were in German, some English-speaking include the vector calculus videos by Khan academy (which werde done by grant Sanderson) and of course 3b1b.

For my graduate education, I figured that I really liked reading documentation and seeing API interfaces of various numerical Computer programs. JAX and tensorflow have amazing docs. This is also helpful for PDE simulations. Usually, I guide myself by Google, forum posts and a general sense of curiosity. 😊

@@MachineLearningSimulation thanks for the reply

You're welcome 😊

Good luck with your learning journey