JEPA - A Path Towards Autonomous Machine Intelligence (Paper Explained)

Вставка

- Опубліковано 17 тра 2024

- #jepa #ai #machinelearning

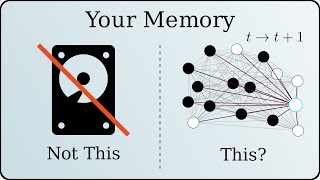

Yann LeCun's position paper on a path towards machine intelligence combines Self-Supervised Learning, Energy-Based Models, and hierarchical predictive embedding models to arrive at a system that can teach itself to learn useful abstractions at multiple levels and use that as a world model to plan ahead in time.

OUTLINE:

0:00 - Introduction

2:00 - Main Contributions

5:45 - Mode 1 and Mode 2 actors

15:40 - Self-Supervised Learning and Energy-Based Models

20:15 - Introducing latent variables

25:00 - The problem of collapse

29:50 - Contrastive vs regularized methods

36:00 - The JEPA architecture

47:00 - Hierarchical JEPA (H-JEPA)

53:00 - Broader relevance

56:00 - Summary & Comments

Paper: openreview.net/forum?id=BZ5a1...

Abstract: How could machines learn as efficiently as humans and animals? How could machines learn to reason and plan? How could machines learn representations of percepts and action plans at multiple levels of abstraction, enabling them to reason, predict, and plan at multiple time horizons? This position paper proposes an architecture and training paradigms with which to construct autonomous intelligent agents. It combines concepts such as configurable predictive world model, behavior driven through intrinsic motivation, and hierarchical joint embedding architectures trained with self-supervised learning.

Author: Yann LeCun

Links:

Homepage: ykilcher.com

Merch: ykilcher.com/merch

UA-cam: / yannickilcher

Twitter: / ykilcher

Discord: ykilcher.com/discord

LinkedIn: / ykilcher

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar: www.subscribestar.com/yannick...

Patreon: / yannickilcher

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n - Наука та технологія

OUTLINE:

0:00 - Introduction

2:00 - Main Contributions

5:45 - Mode 1 and Mode 2 actors

15:40 - Self-Supervised Learning and Energy-Based Models

20:15 - Introducing latent variables

25:00 - The problem of collapse

29:50 - Contrastive vs regularized methods

36:00 - The JEPA architecture

47:00 - Hierarchical JEPA (H-JEPA)

53:00 - Broader relevance

56:00 - Summary & Comments

Hoping for Paper review with author Yann LeCun 🤞🤞🤞

It's not everyday you see such big-idea papers authored by one person. LeCun knows how to aim high..

Well... Schmidhuber begs to differ!

Well you can do that if your name is LeCun (or Hinton)

That's exactly what I thought for a long time. People are not learning from experience all the time, they are modeling a lot, so they don't need large datasets. You need just learn how things work, then you can imagine how they work in every particular case, even if you didn't see it before.

But how you can know if your imagination about a unseen scenario (a car crash in the Moon) is correct?

@@danielscrooge3800 There are many known parts in the equation. Weight of different parts of the car, gravity and how dust rises and settles. It will be helpful if you already seen something falling on the Moon.

@@danielscrooge3800 You don't until you test it. Then, you can correct your assumptions to the situation; this is how science works fundamentally. But really, would you need to know if that scenario is true in any meaningful sense? The exact mechanics of a car crash on the moon are of no import to a self-driving car A.I. They are on Earth, after all.

@@danielscrooge3800 You don't need the model to be 100% accurate; you only need an approximation and then you can correct it as the events develop.

@@danielscrooge3800 if you know that gravity is lower on the moon, you have a rough understanding of the physics on the moon.

If you know how the materials of the car behave under stress, then you can imagine how a car is dedtroyed on the moon.

You probably imagined two cars crashing in slow motion.

That’s wrong.

The cars crash like normal, but the trajectory and motion of the parts that fly off the cars are different.

How can you know? You can’t you just imagine based on what you already know.

Thanks for explaining. This is way over my head, but I am learning. Thanks

Love these in-depth reviews complete with humor and thoroughly explained ideas!

The basic idea seems really similiar to the concept of the Kalman filter in control engineering! At a lot of points it seemed to me like the vocabulary to describe parts of JEPA were missing in the paper which can be directly taken from the Kalman filter

There you also have an internal approximation of the 'real' world (as a state x) but you don't really trust your inputs (sensors) too much. Instead you have an simulation running as well and your actions are based on the 'best guess' of this simulation. You then need to perform feedback: Apply the action in the simulation, gather new measurements and observe the difference to you internal simulation, and then slightly correct the simulation to better agree with the observation

It's crazy how quickly this model converges upon a good state estimate, even if your inputs are noisy as hell. Since its invention and application in the Apollo program it has been -the- workhorse of modern avionics, so I'm excited to see the principles applied in the field of AI!

While it certainly has some similarities, it has fundamental differences. First, Kalman filter and state space models expect one to have a good physical/mathematical definition of the model (e.g. the world), which we know if not impossible extremely hard to do. Therefore, for each particular task we need to build a model, that might be why Kalman best applications are in navigational systems (since good physics around velocity, acceleration etc. exists). Second, noise usually considered to be white and has zero mean. That is why most of the recent developments in time series, signal processing have moved towards deep learning and nature inspired learning similar to this paper where rather than coming up with a good model we try to extract the right information from observations, this is done by applying smart regularization on learning such abstract information as Yannic described here...

@@hoomansedghamiz2288 You've hit the hammer on the nail with this comment. Models like JEPA are NOT glorified Kalman filters. However, I can see why OP used the analogy of a Kalman filter to help them understand hidden states and conceptualise what was being discussed. Thanks for the clear breakdown.

Solid review of an accessibly written paper. At this point there should be few reasons why someone shouldn't hop into this stuff.

It would be cool if you reviewed the I-JEPA paper 🥺.

So I just barely discovered your channel a few weeks ago. I must say I’m impressed. Your really smart. And you definitely are able to go through these papers from arvix and explain things that I struggle with. So thank you.

This was a great and focused resume, thanks!

This content you make here is the best

Thank you in advance, i'm starting watching it right now!

i like the model and the approach. especially the hierarchy levels of jepa with different levels of abstraction are really life like. having the right configuration may be more of an issue.

im wondering what the world model should look like. how do you even define that.

at some point ppl need google like glasses filming everything they do, just so machines can learn from it

Great video and greatly explained. Thanks a lot!

Thank for making great contents. You are doing a lot to democratize AI not only though UA-cam but also with your opensource AI project!

Yann LeCun is at it again

Hi Yannich! Love oyur content. I watch almost all of it. Where is the best place to put video idéas? I would love a video on the Yolov6 and v7 that reasently came out. Seems like a mess. And also, a video on Metas new translation model. Had a look at the paper and saw that it was over 100 pages, so I didn't bother reading it myself ;)

Thanks for pre-digesting this for us. It's a big paper to tackle. I was especially glad to hear your "that escalated quickly comment" wrt the emotions. Every time he mentions training emotional AI and gets no reaction from the audience, i think to myself: "I can't be the only person who thinks emotional AI may be problematic"

Artificial emotions certainly have ethical implications, if it's given that they would actually arise from such a model.

thanks for the video!

is there any discussion about diffusion/noise models - I guess it's a form of data augmentation and suffers from the curse of dimensionality. however they can take images from noise to high detail which is quite surprising and suggests the curse is not that strong

Transformer attention & graph data structures 🤘🏼

I feel like LeCun took great inspiration from what Karl Friston (Free Energy Principle, World Model, Active Inference) promotes.

Good job🙏

At 11:58, I am not sure you can *easily* backprop through the actions after you sample, *maybe* you can use a trick to estimate the gradient. Am I missing something?

Great explanation, but I have two questions:

1. How do we obtain the "Z" latent variable to compute the energy function of E(x,y,z) during training?

2. "Ad Figure 13: Non-contrastive training of JEPA" - we have our input X that goes through encodoer which produces the latent representation of X called S_x and this latent representation is than run through Predictor function to obtain S_y ( this prediction, I think of it as a reconstruction of some parts of the input), we also take the encoded representation of original Y and then we calculate the loss between Stilda_y and S_y - how can we measure the loss between them ? In my head the Stilda_y is the reconstructed input and S_y is the a vector that encodes Y, so how can we compare them/ or what I have misunderstood?

1. there are multiple ways, for example a vq-vae would just take z as the closest vector in the code book. you could also think of training an encoder, or even just sampling z from some distribution.

2. the loss is computed between the encoding of y, and the predicted encoding of y. the idea is that we want to predict the encoding of y from the encoding of x (that's what the pred function is doing), and there is not explicit part about reconstructing any of the inputs.

@@YannicKilcher how do you not get bored or distracted watching your thisbsince it's so long. Hope you can share..thanks for sharing.

@@YannicKilcher Fantastic, now I understand this architecture, thank you so much ! Especially the second point, now the idea of JEPA seems much more interesting

Every russian be like: lmao jepa

true man

The thing that got me here was the "final super saiyyan form of the model" 🤣🤣

Thanks for the explanation @Yannic

51:56 Robots dream of Electric sheep, as a flow diagram?

Took me two days to digest this one. Seems important. Lots of good ideas.

Stats:

The spoken duration of the video is: 3577 seconds - 663.0048499999996 of silence

10331 total words spoken in HMS: 00:48:33 of total time: 00:59:37 excluding 00:11:03 silence

212 de-silenced wpm

Amazing, thx

51:50 🤯 … different continuations of your world model: yes! 🙌🏼 (figure 17)

What do you think about Schmidhuber's comment on this paper? It looks like he is really angry this time.

Inference time optimization like MAML?

I don't work in AI, but on mental health. It's interesting how people also suffer from "function collapse" at some point in their lives (i.e.: "I always believed my husband was faithful, but yesterday I found him with my best friend"). In those cases, people have a hard time fitting their model of the world with a new reality and it takes a lot of time for them to accept it (or retrain their models). At least from an intuitive point of view the contents of this paper make sense to me.

Mal ne Frage Yannic:

Gibt es irgendein NN Modell, das nach der

Trainingsphase, im Einsatz - in der Inferenz -

in der Lage ist, Neues dazu zu lernen?

Zum Beispiel meinen Namen und alle meine

persönlichen Dinge zu lernen ...

Also neue Daten zu wissen, die ihm im

Training nicht gezeigt wurden?

Also ein NN Modell, dass über seine

Trainings Parameter hinaus nach Abschluss

des Training ihm Unbekanntes behalten kann?

Und dann dieses Neue in sein permanentes

Wissen integriert?

very cool at 41:42! so we need to make sure Z does not go crazy and become Y directly and also want the latent rep-s of x and y to be robust. I guess it is to capture the variation notions of them (w.r.t. constrastive learning reps)

Very cool

This seems super simple. We just train a recurrent predictor on observations from the real world.

The best thing about this paper is that we know its 100% correct because yann lecun is never wrong! You can check his social media and you will see!

Might be a stupid and naive question but could we use it for text based applications, without video/images? Will it be better than autoregressive llms?

I am really surprised there is no mention of Karl Friston and his Free Energy Principle. LeCun's ideas sound so similar, yet not even a hint of FEP

I was just thinking the same thing about Friston. This probably was "recommended" to me because I've spent a lot of time on Friston videos?

friston's FEP is not a substantive model of anything; it's word salad.

@@kvazau8444 To be fair he at least has the idea of predictive coding, even though the formalization seems both limited and intentionally obfuscated.

I think the regularization of the z and the training approach could be further expanded, right?

This would take the notion of the world going back to a resting state after an action world state is X1 -> action -> X2 -> X3 but X3 is often ~~X1. E.g. I am launching a ball, it flies, then it falls and almost everything is back the way it was before I performed the action. in that case the model can perform another action into X1 and see the output X2' -> X3'.. in that sense the model can really see the multiple possible futures after X1 and be better at predicting the energy function. I don't know if that makes sense to anyone.

It seems that this z could be more connected to the action of the actor.

So the (only?) difference between the loss function and the energy function is that the loss is calculated during training, while the energy is calculated during inference?

I get that it's a different formulation, but isn't the "maximisation of information in the encoded latent" essentially the exact same thing as contrastive learning? When you described taking a minibatch and ensuring that the latents are different, that's virtually the exact same thing?

I think I one level simpler explanation about ehat is Z. :D

Is Z like a embedding matrix, but outside of the model, where for each training example would have a randomly initialized and backpropagation modified entry. Kinda like a pressure release valve for loss backpropagation?

Is there a small jepa implementation that we can play with?

3:47;

reminds you of the model of the philosopher

Thomas Metzinger, whose 'Naturalistic Theory of Mind'

from 1997 draws exactly this graph in a

more abstract sketchy way.

Does he have new developments on the theory of mind?

@@LKRaider It doesn't look to me like new

development in this area of scientific research.

This may be a good time to say that we are

now on safe ground with the understanding of

our mind and the Theory of Mind.

CLIP=Contrastive Language Image Pre-training sounds pretty contrastive to me:)

Nice 😁

Doesn’t the C in CLIP stand for Contrastive haha?

The part at around 14:30 reminds me of iterated amplification and distillation

It's a bit like Dreamer v2 actor training

Allow me to ask one dumb question that is not related to this topic. What is the iPad app he uses to mark notes while reading?

Looks like a Surface PC

This seems reasonable enough, but, it seems like it ought to be feasible for someone to implement a toy version of this.. (or at least, the hierarchical jepa part, not the configurator)

has anyone done so?

So, for the toy model to work, the environment should have some hierarchical structure to its tasks,

so how about:

the play field has some large-ish regions with different colors (or labels of some kind), with some very basic “just go around it” obstacles scattered in each region, and the agent’s goal is to be in regions of given colors in a given order (being in regions with other colors inserted in-between isn’t penalized, so if the goal is “red then green”, and the agent enters red, then goes from red to blue, and then from blue to green, that is succeeding at the goal just as much as going directly from red to green. But the goal is to succeed quickly, in addition to just succeeding.)

So then like, the large scale planning could be like “go from red to blue then from there to green” (e.g. because there’s no green region directly next to the red region, and passing through blue seems the shortest route), and the small scale planning could be along the lines of “move in this direction, then this direction, then this direction, because that will bring me to where I’ll enter the next region in the overall plan”.

Maybe make the position floating point (instead of a grid world) in order to keep things differentiable and make coming up with representations easier?

And like, make movement consist of choosing an angle (as a continuous variable), and then moving a distance of 1 in that direction (or until reaching a wall, whichever is shorter), but then maybe adding a little bit of noise from the environment for where it ends up. Also maybe add a little noise from the environment in its perception of where it is?

It feels like implementing enough of what he describes for the model to be able to succeed at this toy task, ought to be doable.

Like, I imagine a model with under 1000 parameters doing this?

Maybe that’s too low of a guess, as I don’t have any practical experience with this kind of thing.

But, what does it have to do?

It needs the representation of the states of the world, which, seems like, as the main part is a 2d position, could likely be just a handful of dimensions, but lets say 20,

It needs to ... ok yeah 1000 was probably way too low an estimate unless you are hand-wiring every weight, and weights of zero don’t count towards parameter count.

(Because just like, one fully connected layer between two layers of 20 nodes, already costs 400 connections, more than a third of the 1000 estimate. Feels silly to have suggested 1000 in retrospect.)

But,

Let’s say, under, 2000*4*2*2*3

so, under 100,000 parameters I think probably.

(Attempting to overestimate)

Idk.

This was amazing!

Impact Maximization via Hebbian Learning is an approach for AGI and ASI. The approach posits 3 main points

1) Making an impact is the objective function of all life forms n so the objective function of AGI is to maximise impact. Living thing have more impact potential than non-living things and highly intelligent beings haveeven more potential for impact. ANything we do is a kind of impact whether is self-preservation, procreation, meme propogation, DDAO and other derived objectives, and so maximising impact is what an AGI system should do.

2)Impact maximization can happen this way - If an agent relaxes(suspends output action) while perceiving something impactful and action when it perceives a lack of impact/novelity/interesting thing, so as to bring about a change in the environment, it will be more efficient in maximising impact compared to a dumb agent acting randomly. Also,Impact is a very subjective concept: what is impact for one may not be for someone else, what is impactful today for you may not be so tomorrow. hence we cant have a solid mathematical framework for impact based on a measure of activity or entropy or anything else. hebbian rule automatically results in impact maximising behaviour as, when presented with the same input again and again (usually associated with a lack of activity or impact) the same pathways get excited leading to quicker flow of activation to the o/p region whereas hen something new is perceived, activation travels through a newer pathway and hence takes longer to go to output therefore temporarily suspending o/p action. So conversely, its is not an impactful input that makes the system relax, but what makes the system relax is what is considered impactful for the system at that moment and vice versa.

3) emergence of various modules and useful and complex intelligent information processing and behavior, in a large neural network using just a variation of the hebbian rule for synaptic weight updation in addition with formulas/rules for learning rate, threshold,activation loss, neurogenesis and death updation. People talk about evolution and forming of regions taking millions of years and so we would need the same tim3efrme for this pproach, but thats wrong on 2 accounts. a) we can start with 30 billion neurons and 150 trillion synapses on day 1 ( which didnt happen in nature) and on day 1 its an impact maximising system with just hebbian rule. b) the formation of different region via evolution is not an accidental change that got permanent, the regions were shaped by biological rules such as hebbian and other homeostatic factors. So no random/accident component here thats required to form the different regions in the brain, just based on what its exposed to, the brain region can form so many different associations, representations, and other desired qualities such as remembering, abstraction, etc as demonstrated here.

The approach involves building prototypes and incrementally adding input and output devices while increasing the size of the neural network to get to high levels of intelligence with the same underlying framework and learning rules.

superintelligencetoken.wordpress.com/2021/11/13/our-approach/

If you aim to minimize the information content of some random process, doesn’t this always lead to the trivial normal distribution, which always has the maximal entropy among the continuous distributions?

Also the latent variable doesn’t seem to be causally distilling the relation between x and y.

Is it a lifelong training concept? The assessment of such a system would be quite time consuming. I’m just speculating.

Oh this seems like a really cool architecture.

Looking forward to actually seeing it in action. Somebody is certainly gonna try.

Imo contrastive losses still make sense because they seem to be the only way to train a concept of "not". Like, they will give clear examples of what isn't the case, rather than just telling the model what is. Kinda difficult to do this without sampling negative examples in this way I think...

Combining both approaches is perhaps the way to go. Perhaps it would be possible to regulate how negative samples are picked with those same methods:

Make sure the examples mutually have low information (so they are very diverse with a broad, flat distribution) although biasing towards edge cases (i.e. Samples that the model tends to claim to be similar when they are not) is perhaps also a good idea

Either way, contrastive loss or no, I'm looking forward to this in the wild

Agreed.

It kind of seems to me that how you describe the samples should be are similar to how good jokes are made.

Good jokes are close to reality, yet there is a poiante that sets it in juxtaposition from the mundane, or in other words from the world model we have. Good jokes are often just slightly outside of what we see as a correct representation of the world.

did Yann try to train this architecture or was it a purely theoretical exercise?

Purely theoretical, a thought experiment on how a self motivated AGI might be constructed.

AGI might be dangerous, and lab safety should be held at high standards.

🙏

TBH, the idea of the world model is not new, but how to train this model is definitely an issue. Well, got to say, it is Yann Lecun, a man who always tries to do something to catch people's eyes.

Odd tangent: "Maximizing the information of the encoded sy" isn't the only option for a regularizer on the encoders :0 For worlds that follow rules and compositionality - instead of maximizing *information* , one might seek representations 'sy' with dynamics which can be collapsed into a symbolic formula, such that timesteps in the world-model can be unrolled according to those discovered rules operating on the *latent* space vectors. Find a "physics" for the latent space dynamics, and you have a shorthand to use for rapidly searching the best actions. (if xt is the world-model at time t, we want to reward a latent space that makes x(t+1) follow from a simple set of operators on xt in that /latent/ space.)

Aren't such symbolic representations simply ones with very high information?

Like, without friction, relativistic, or quantum effects, it takes like a handful of symbols to perfectly describe the motion of any possible configuration of particles. It's incredibly high information content. Arguably infinite information as no uncertainty at all remains.

Relativity adds more symmetries which also means extra information

And quantum mechanics adds more symmetries as well but introduces a fundamental level of uncertainty.

And if you add gravity to quantum mechanics, we don't yet have the right symbolic representation but either way, all of that is the very peak of information density that we managed to date.

And we aim to increase it even further

lol @ energy inference for the trolley problem

Very good Idea! Use a human-like models (GPT3) and some hardcoded staff for critic or configurator, and robot will be human-like.

This is not related to LeCun's model and probably won't be a very reliable as well as flexible layout for a general actor, but I found this approach from a DIY-minded individual quite interesting: ua-cam.com/video/F83aTGuNyrM/v-deo.html

wow

I like to imagine, that I'm really good at prompt design, but that's probably just my natural survival instinct. 😂

Lol indeed it escalated quickly

Mesa-optimization and AI alignment?

54:30 oh yes you can. At some scale, GPT can tell you how ;)

43:00 covariance loss is sorta contrastive loss over batch. Without it, JEPA must collapse.

YO DAWG I HERD YOU LIKE MODE COLLAPSE SO WE PUT A MODE COLLAPSE IN YOUR MODE COLLAPSE SO U CAN MODE COLLAPSE WHILE U MODE COLLAPSE

This paper is spot-on. Correct solutions to the greatest philosophical questions. Self-regulated training will embody empathy, create social bonds, and allow value systems to propagate throughout a language model's latent space. Making the training environment more humanlike will make it easier to teach AI but also easier to misuse AI.

00:04 Jepa is a new architecture proposed by Young Laka for autonomous machine intelligence.

02:27 JEPA proposes predictive world models for hierarchical planning under uncertainty.

07:10 The actor module in autonomous machine intelligence acts on perception without considering the cost.

09:36 Using gradient descent, we can optimize the actions in real-time to improve the reward.

14:13 Using a planning and optimization process, actions can be improved to reach optimal performance

16:37 Create an energy function to measure how well two things fit together

21:06 The variable z is introduced to capture information about the continuation of the trolley car problem.

23:13 Latent variable energy-based models capture unobserved structure in the world.

27:40 Three architectures discussed: Predictive, Autoencoder, and Joint Embedding

29:46 Designing loss to prevent collapse and formulating as an energy-based model

34:05 Contrastive training and regularized methods are two approaches for training autonomous machine intelligence.

36:18 Using deterministic encoders and a predictor to map latent representations of x to y

40:33 Regularizing the latent representation helps prevent collapse

42:49 The framework aims to minimize the information content of the latent variable and maximize the information content of the encoded signals.

47:16 Hierarchical planning using a multi-layer predictive model in latent space.

49:20 Planning and optimizing high and low-level actions to achieve goals.

53:41 Machine emotions can emerge from intrinsic cost or anticipation of outcomes from a trainable critic, leading to machine common sense.

55:52 The paper proposes using energy-based models for planning and inference in autonomous machine intelligence.

Crafted by Merlin AI.

This is getting closer and closer to real AI.

I'm guessing you mean like AGI?

@@codiepetersen8198 One doesn't need to create a brain to have a machine that is similarly effective and efficient

lecun is stuck in the 90s

This paper comes just after the Lamda debacle 😂😂😂

Go see Josh Bach for a much more precise theory.

it a Teletubby! first thing that came to my mind as well lolol

A remain question is whether the type of reasoning proposed here can encompass all forms of reasoning that humans and animals are capable of... "and that certainly is the case" - Good joke, it's not even a form of reasoning! :)

These papers never show their proof that their method works. This is like creepy pasta.

Bitch now you can read I-JEPA

Are humans really all that efficient when we got billions of years of evolution "data" stored in our genes. That and humans aren't really unsupervised for the first how ever many years of our lives. Those are just some thoughts and perspectives, take what you will.

Well, so far yeah. Most efficient. So far.

Babies do a ton of unsupervised learning, playing with blocks you can watch them investigate the causal laws of physics and building a world model. Evolution doesn't instill actual useful data in our genes except for some animalistic essentials, and priors that guide the learning process throughout life afaik. The vast, vast majority of knowledge that you have was not stored in your genes.

@@joshuasmith2450 YES! But there's "wisdom" in time. The 1% are very important.

2 meter steal ants who read the internet and have high level intelligence, what could possible go wrong.

Better stick to Hafners Daydreamer. JEPA just seems to copy a lot of ideas from it.

It's pronounced, "Yan Lickoon".

JEPA - is an ASS in Russian. Лицо русских, которые открыли видос и прочитали название, представили?

Unfortunately, AFAIK this paper states the obvious and doesn't contribute anything meaningful. Any follower of the subject matter knows this, and knows the problem is hardware, and we need hardware to compute it dynamically and quickly. I mean, Hawkins and Numenta made this obvious a few years ago. I've been teaching it for three years to my team.

I don't do anything.

How should I put this to be inflammatory...

If you're going to publish a paper about a route to general intelligence, you should exhibit much more interest in where the hell the cost function is supposed to come from. Just saying "first we're going to build a generally intelligent AI, and figuring out how to get it to do good things rather than bad things is future me's problem" is irresponsible.

it is not responsible nor irresponsible, just simply the constant forward march of science

@@nootgourd3452 In the style of OP I'll make this as inflammatory as possible:

Something tells me you wouldn't apply this level of laissez-faire dissmisiveness if were talking about gain-of-function research.

The wellbeing of humanity matters more than bowing down to a vague sense "we can pursue this research so we must"

It will do what’s it’s trained to do, nothing more

@@rowannadon7668 Search up "List of specification gaming examples".

Time and time again we find that if you incentivise an agent to maximise a reward it discovers degenerate strategies.

Look up the paper: "The Surprising Creativity of Digital Evolution: A Collection of Anecdotes"

Again we see strong pressure to maximise an objective leading to undesired solutions. Many so bizzare you can only patch the issue in hindsight. We're talking rowhammer levels of crazy.

The lesson is clear. No. You will not be able to stop a system under strong optimisation pressure from exploiting clever loopholes and doing things you don't intend. Yet somehow a more intelligent autonomous agents with stronger predictive abilities will be even easiest to constrain?

@@danielalorbi 'degenerate strategies' reveal creativity of the ai and a lack of foresight of its creators.

The way you say Yan LeCun is annoying

It looks like a confused stab at my salience theory of dynamic cognition and consciousness.

Thankfully I presented what I think is the correct approach over 11 years ago.

The major flaw with JEPA is that like many of these models for whatever reason the critical importance of the ability for kicking the current convergence of an a prediction(action) into a new domain is not done because the prediction is not interacted with an emotional/autonomic sub unit.

What he calls actor, I called "comparison" this is where you would take an internal understanding of what the event being experienced is and you would make a suggestion as to how to optimize for a prediction given that limited intermediate State it's very much like what they describing here but critically the actor likes the necessary depth in the in the module not having a emotional Factor.

If you haven't heard of the salience theory read here:

sent2null.blogspot.com/2013/11/salience-theory-of-dynamic-cognition.html

Is your model differentiable?

@@codiepetersen8198 I didn’t say it “has to be” differentiable. But this paper has, as an explicit goal, differentiability. Therefore, if someone claims to have achieved the same thing 10 years ago, but what they did isn’t differentiable, then they did not accomplish a particular thing that this paper set out to (and did) accomplish, which would (hypothetically, if their model isn’t differentiable) refute the claim that what was done 10 years ago achieved everything that this paper did.

(Also, the differentiable methods have made much possible that people don’t know how to accomplish otherwise, so I don’t see how you justify pooh-pooh-ing it.)

@@codiepetersen8198 math is just what you get when you start being sufficiently precise with your abstractions. Complaining that a model is too mathematical is just complaining that it is too carefully/precisely defined.

@@codiepetersen8198 if we want to run this artificial intelligence on a computer it better be able to be described as a mathematical model lol

@@codiepetersen8198 I’m aware of clustering methods, the general concept of graphs (though I imagine you mean something more specific than “the entire topic of graph theory”. Maybe something about spectral graph theory? Ah, but that seems like it would be used for things like graph NNs, which would be too differentiable for your tastes.. unless you were using it to do clustering on sets of graphs?), the idea of spiking NNs, and other things other than the common differentiable NN stuff.

I was responding generally to the complaint about “thinking that everything has to be a math function” (quote is from memory and may be misremembered).

Those things you mentioned are all also math.