Vision Transformer and its Applications

Вставка

- Опубліковано 27 тра 2024

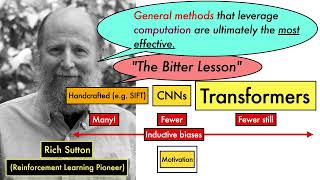

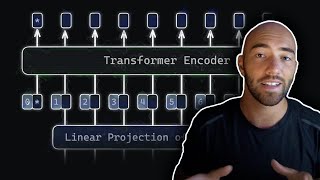

- Vision transformer is a recent breakthrough in the area of computer vision. While transformer-based models have dominated the field of natural language processing since 2017, CNN-based models are still demonstrating state-of-the-art performances in vision problems. Last year, a group of researchers from Google figured out how to make a transformer work on recognition. They called it "vision transformer". The follow-up works by the community demonstrated superior performance of vision transformers not only in recognition but also in other downstream tasks such as detection, segmentation, multi-modal learning and scene text recognition to mention a few.

In this talk, Rowel Atienza will go into a deeper understanding of the model architecture of vision transformers. Most importantly, Rowel will focus on the concept of self-attention and its role in vision. Then, he will present different model implementations utilizing the vision transformer as the main backbone.

Since self-attention can be applied beyond transformers, Rowel Atienza will also discuss a promising direction in building general-purpose model architectures. In particular, networks that can process a variety of data formats such as text, audio, image and video.

→ To watch more videos like this, visit aiplus.training ←

Do You Like This Video? Share Your Thoughts in Comments Below

Also, You can visit our website and choose the nearest ODSC Event to attend and experience all our Trainings and Workshops:

odsc.com/california/

odsc.com/apac/

Sign up for the newsletter to stay up to date with the latest trends in data science: opendatascience.com/newsletter/

Follow Us Online!

• Facebook: / opendatasci

• Instagram: / odsc

• Blog: opendatascience.com/

• LinkedIn: / open-data-science

• Twitter: / odsc - Наука та технологія

Very very very nice explanation!!! I like learning the foundation/origin of the concepts where models are derived..

This video provides a clear step by step explanation how to get from images to input features for Transformer encoders, which has proven hard to find anywhere else.

Thank you.

10:46 - this is a mistake; the convolution is not equivariant to scaling - if the bird is scaled, the output of the convolution will not be simply a scaling of the original output. That would only be true if you also rescale the filters.

Super helpful. Was very lost on the process from image patch to embedded vector until I watched this.

amazing lecture, thank you sir!

20:17 I think the encoder blocks are stacked in parallel fashion rather than sequential?

Do you have a video about beit or dino?

you said 196 patches in imagenet data. No of matches will depend on the input image size and the patch size. For eg: if the input image is of 400X400 and patch size of 8X8, then no of patches will be (400X400/8X8) = 50X50 =2500.

thanks for sharing , it was extremely helpful 💯

Thank you!

What exactly is happening in the self-attention and MLP blocks of the encoder module? Could you describe it in a simplistic way?

Thanks for sharing.

thanks for sharing

Great resource!

Thank you, sir

Are you the channel owner??

Thank you for making such a great video

Thank you for this genuine knowledge.

Fantastic Video! Really loved the detailed explanation step-by-step.

awesome

thankyou much clearer

Tax evader 🤮

🤮