Lenka Zdeborová - Statistical Physics of Machine Learning (May 1, 2024)

Вставка

- Опубліковано 6 тра 2024

- Machine learning provides an invaluable toolbox for the natural sciences, but it also comes with many open questions that the theoretical branches of the natural sciences can investigate.

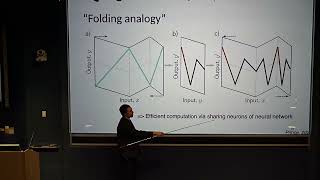

In this Presidential Lecture, Lenka Zdeborová will describe recent trends and progress in exploring questions surrounding machine learning. She will discuss how diffusion or flow-based generative models sample (or fail to sample) challenging probability distributions. She will present a toy model of dot-product attention that presents a phase transition between positional and semantic learning. She will also revisit some classical methods for estimating uncertainty and their status in the context of modern overparameterized neural networks. More details: www.simonsfoundation.org/even... - Наука та технологія

wow, if all teachers explain things like her, complexities are simplified.

Excellent talk. Love the connections and insights.

Fantastic lecture! Very clear and well structured! Thank you, diky🇨🇿!

As a physicist, but non expert in AI, viewer: Very interesting insights. Over-parameterization (size) "compensates" for sub-optimal algorithm. Also non trivial that it doesn't lead to getting stack in fitting the noise. Organic neural brains (human or animal) obviously don't need so much data, and also are actually not that large in number of parameters (if I am not mistaken). So for sure there is room for improvement in the algorithm and structure, which is exactly her direction of research. A success there will be very impactfull.

FWIW iIf you consider a brain's neurons as analogs to neurons in an ANN then the human brain, at least, has more complexity by far. Jeffrey Hinton points out that the mechanism of backprop (chain rule) to adjust parameters is more efficient by far than biological organisms in its ability to store patterns.

That efficiency is what worries him and also points to a need for a definition of sentience arising under different learning mechanisms than our own.

You will be a good mother please make many babies 😊😊😊

想要理解机器学习内部黑箱,要靠更进一步的人工智能,由更先进的人工智能反过来解析黑箱,破解黑箱的机理,靠现在用人力去理解黑箱内部机制是不可能的。

huh?