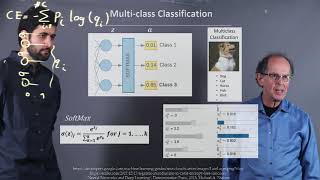

Intuitively Understanding the Cross Entropy Loss

Вставка

- Опубліковано 3 лип 2021

- This video discusses the Cross Entropy Loss and provides an intuitive interpretation of the loss function through a simple classification set up. The video will draw the connections between the KL divergence and the cross entropy loss, and touch on some practical considerations.

Twitter: / adianliusie

This is the only video that's made the connection between KL Divergence and Cross Entropy Loss intuitive for me. Thank you so much!

This subject has confused me greatly for nearly a year now, your video and the kl-divergence video made it clear as day. You taught it so well I feel like a toddler could understand this subject.

Great video. It is always good to dive into the math to understand why we use what we use. Loved it!

Super useful and insightful video which easily connects KL-divergence and Cross Entropy Loss. Brilliant! Thank you!

Fantastic video and explanation. I just learned about the KL divergence and the cross entropy loss finally makes sense to me.

The best explanation I have ever seen about Cross Entropy Loss. Thank you so much 💖

Excellent expositions on KL divergence and Cross Entropy loss within 15 mins! Really intuitive. Thanks for sharing.

Great explanation! I’m enjoying all of your “intuitively understanding” videos.

This one I would say is a very nice explanation of Cross Entropy Loss.

This is an amazing explanatory video on Cross-Entropy loss. Thank you

I have no background in ML, and this plus your other video completely explained everything I needed to know. Thanks!

This video was amazing. Very clear! Please post more on ML / Probability topics. :D Cheers from Argentina.

Thanks a lot! Really helps me understand Cross Entropy, Softmax and the relation between them.

The clearest explanation. Thank you.

I love this video!! So clear and informative!

What a great and simple explanation of the topic! Great work 👏

It is a excellent explanation to make use of previous video of KL divergence in this video

Great and clear explanation!

So clear explanation! Thanks!

And no one told me that (minimizing KL is almost equivalent to minizing CLE) in 2 years studying in a University... Oh man... thank you so much...

KL = Cross Entropy - Entropy.

really superb video, you should record more !

Simple and helpful!

Nice explanation, thank you.

This is so good, thx so much

Great video!

Thank you. You should have more subscribers.

Thanks for this video

great video, thank you!

Might be a stupid question but where do we get the "true" class distribution?

Real world data bro, from annotated samples.

Human is the criteria for everything,so called AI

In the classification task, the true distribution has the value of 1 for the correct class and value of 0 for the other classes. So that's it, that's the true distribution. And we know it, if the data is labelled correctly. The distribution in classification task is called probability mass function btw

Great! keep it up.

This is so neat.

Brilliant and simple! Could you make a video about soft/smooth labels instead of hard ones and how that makes it better (math behind it)?

Intuitively, information is lost whenever discretizing a continuous variable. Said another way, the difference between a class probability of 0.51 and 0.99 is very different. Downstream, soft targets allow for more precise gradient updates.

superb!

Thank you

essence, short, great.

You the best!

Thanks for the great video. 1 question though. What happens if we swap the true and predicted probabilities in the formula?

make more videos please , you are awesome

How does the use of soft label distributions, instead of one-hot encoding hard labels, impact the choice of loss function in training models? Specifically, can cross-entropy loss still be effectively utilized, or should Kullback-Leibler (KL) divergence be preferred?

At 3:25

why don't we model it as argmax Summ P* logP (without minus sign)?

Great video, but only really clear if you know what the KL divergence is. I'd hammer that point to the viewer.

will the thing in 4:12 be negative if you use information entropy or KL divergence? are they both > 0?

As explained in the video the KL divergence is a measure of "distance", so it has to be >0. There other prerequisites for a function to be a measure of distance like symmetry, and couple other things i forget about.

Why dont we use just mean of (p-q)^2 instead of p*log(p/q) to understand dissimilarity of pdfs?

distirbution

what is true class distribution?

the frequency of occurrence of a particular class depends on the characteristics of the objects

0:08

3:30

P* is true prob

3:24 .The aha moment when you realize whta's the purpose of the negative sign in cross entrophy

4:24. do you know how golden the statement is

hello, handsome could you share the clear slides?

Volumn is low

not really a great explaination, so many terms were thrown in. that's not a good way to explain something.

Speak slower please

Your name is commonsense and you still don't use your common sense lol In every UA-cam application, there is the option to slow a video down to 75%, 50% or even 25% speed. If you have trouble with understanding his language, you should just select the 0.75 speed option.

@@Oliver-2103 Visually Impaired people have problems seeing some of the adjustments one can make on a phone even when they know that they exist.