- 6

- 304 705

Adian Liusie

Приєднався 10 лип 2020

Intuitively Understanding the Shannon Entropy

Intuitively Understanding the Shannon Entropy

Переглядів: 110 362

Відео

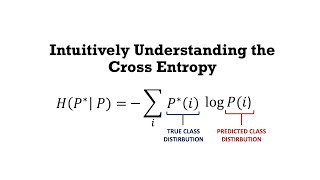

Intuitively Understanding the Cross Entropy Loss

Переглядів 96 тис.3 роки тому

This video discusses the Cross Entropy Loss and provides an intuitive interpretation of the loss function through a simple classification set up. The video will draw the connections between the KL divergence and the cross entropy loss, and touch on some practical considerations. Twitter: AdianLiusie

Beginners Overview of Machine Learning and Artificial Intelligence

Переглядів 9223 роки тому

This is a recorded talk which I created for my old school, Dubai College. This video is an introduction for complete beginners into artificial intelligence and machine learning, gives the general idea of the field and an overview of the machine learning approach.

Understanding Neural Networks Training

Переглядів 8633 роки тому

This video gives an overview of general optimisation of neural networks and explains how training is done through loss minimisation. This video will provide a foundation to then take a deeper look at more complex practical algorithms like stochastic gradient descent and backwards propagation which will be covered in future videos.

Intuitively Understanding the KL Divergence

Переглядів 96 тис.3 роки тому

This video discusses the Kullback Leibler divergence and explains how it's a natural measure of distance between distributions. The video goes through a simple proof, which shows how with some basic maths, we can get under the KL divergence and intuitively understand what it's about.

Understanding deep neural networks

Переглядів 8913 роки тому

This video gives a basic introduction to neural networks and discusses what they are, how they work and ways to see the system in a matrix framework. This is the first video in a series which will ultimately build up to programming neural networks and running the back propagation algorithm from scratch in python.

THE GOAT

I would give my life for you, fam. Thanks.

Thank you sir! After watching so many videos, reading articles and talking with chatgpt, this explanation made sense to me and will remain with me forever. Grateful 🙏

小猫到此一游

Thanks

Thanks!

Here is my thought process on why the Shannon Entropy formula makes sense. Hope it helps some of you. Also, if someone wants to use this explanation anywhere, like a blog post etc., please go ahead. No credit necessary. 1) Let’s say that X = “person x has access to site” is a random variable (RV), where P(X = yes) = 0.75 and P(X = no) = 0.25. Then why does it make sense that Entropy(X) = - 0.75 * log2(0.75) - 0.25 * log2(0.25) = 0.75 * log(1 / 0.75) + 0.25 * log(1 / 0.25)? 2) Well, entropy(X) = average_surprise(X), right? Think about it: entropy is something uncontrollable, something NOT known in advance. Put (maybe even too) simply: entropy IS surprise, and to quantify entropy, we must quantify how surprised we are by the news of whether someone, let's say Amy, has been granted access to a site. 3) Average_surprise(X) = average_information_contained_in_distribution(X). This should be intuitive. The information that we need to transmit the result of an event with probability 1 is 0, as we already know it will happen. Yes, there is information in the fact that we know, but there is no information to be extracted FROM THE RESULT of a deterministic probabilistic process. The same logic works continuously: the more average information there is to be gained from the results of a probabilistic process, the more surprise it has embedded in it. 4) By combining these results (2) and (3), we get that Entropy(“person x has access to site”) = average_information_contained_in_distribution(X). 5) But wait, what is this information you speak of? Surely it isn't a mathematical object. It isn't a number. So, how can we even calculate it? Well, P(X = yes) = 0.75 means that with probability = 0.75, we can know the result of both X = yes (happened or didn’t) and X = no (happened or didn’t). We can quantify this GAIN IN INFORMATION from a piece of news in the following way. 6) When we get the news that Amy indeed got access (p = 0.75), we get information worth 1.0 units. What are the units? Well, they are information. We don’t have a unit like Hz or Amps for it. So it’s just units of information (gained). 7) But why is the information worth exactly 1.0 units? Well, we need to have some measure for information. You can’t measure 1 cm without first deciding that “this distance is 1 cm”. So, because we need a measure, it makes sense that the information of the event that actually occurred would be the measuring stick of 1.0 units, because we are going to be using it in trying to solve whether this event was probable or not. The other events have relative magnitudes w.r.t. the event that happened. By using there relative magnitudes, we can start to reason about whether we should weigh this outcome as having high entropy = high surprise, when it happens. 8) Okay, now we know the information gained on the event X = yes. It is 1.0 units, as agreed. But we also now know that the event X = no has NOT happened. This is also information. It must be "worth" something. But how do we quantify it? 9) Quantifying the information gained from events that have NOT happened is simple now that we have a measurement stick for what 1 unit of information is. We can use this measuring stick to calculate how much an event that has NOT happened is “worth” based on ITS own probability. 10) We now know that p = 0.75 is worth 1 unit of information. Then, p = 0.25, corresponding to X = no, is only worth 0.25 / 0.75 = 0.33 units. In total, the news has given us 1.33 units of information. 11) Notice a pattern that explains WHY this approach of comparing event probabilities with the event that happened makes sense: if the event that happens has high probability, the unit of information has “higher standards”. It doesn’t accept any lower probability events as having high information. 12) More generally, the probability of the event that happens “controls” how large the information gained from all its counter events is. Similarly, if a low-probability event happens, it will make the information gained from this event larger by lowering the bar for an unit of information gained. This corresponds to the intuitive idea we humans have while reasoning about this: "a low probability event has happened, so it must mean that the information gained from this event is larger”, and vice versa. The ratios of the probabilities make the magic work, so it is no coincidence that the Shannon formula has them. Namely, 1 / p. (Please only consider the formula without the minus sign, which has log(1 / p) and not just log(p). The minus sign is just there to make the formula more concise. In reality, everything we are talking about makes much more sense without the minus sign.) 13) If you’ve made it this far, congrats. We are almost there. But just to make sure we are on the same page, let’s reiterate on what the number 1.33 is telling us. It is telling us the “number” of different "event units" we have gained information on after hearing the news, where the unit of one full event/outcome corresponds to the probability of the outcome that actually happened. (Side quest: For simplicity, you can also use numbers that only output integers for event units. For example, let’s say our probability_distribution = [0.25, 0.25, 0.5]. If p = 0.25 happens, we gain info on 1 + 1 + 2 = 4 event units, where having p = 0.25 is the measure of 1 event unit. As discussed in (6), it can also be understood as the information unit, i.e. surprise unit, i.e. the reciprocal of the event’s probability, i.e. 1 / p.) 14) As we discussed earlier, Entropy(X) = average_information_contained_in(X) = expected_information_gained_from_knowing(X). This we have already calculated for X = yes (1.33). Do the same process for X = no and we get 4. (Left as an exercise to the reader. Note: if you can't do it, you haven't understood the most important point.) 15) Now we are ready to tie it all together. Notice the log(1 / p) in the entropy formula? That’s just our 1.33 and 4 with log wrappers (1 / 0.75 = 1.33 and 1 / 0.25 = 4). (Side quest: The Log. The reason we use log, originally log2, is because The Great Shannon wanted everything to be measured in bits. That makes sense because he was a computer scientist working on quantifying the information of (binary-encoded) messages. And to be fair, it is quite neat to have a binary interpretation for the total information in a system (the expected number of bits required to represent the result of its corresponding RV). The log also makes entropy additive (this is super useful in ML, even if we use nats or some other base, which obviously doesn’t have a bit interpretation. The bit interpretation doesn't really mean anything in the larger context of information anyway. It’s just one way to ENCODE information, but it itself is not information and we can only use the number of bits as a measuring stick for information. Any other measuring stick works just as well, at least in theory. For humans, bits are a friend.). 16) So, 1 / 0.75 = 1.33 and 1 / 0.25 = 4. That is exactly what we calculated with our intuitive method. That's because we used the same exact method as is the formula. The nominator is the measuring stick. It is the 1.0 units. To drive the point home, note that 1 / p = 1 + (1 - p) / p. The (1 - p) / p part is what calculates the information units gained from leftover events (0.33 when the event that happened is X = yes), and 1 is the information gained from the event that actually happened. 17) Now we are at the finish line. We just need to quantify the EXPECTED information required to encode something in bits. This is easy, if you understand expected values, which I expect you do, because you’ve made it this far. 18) Entropy(X) = 0.75 * log2(1 / 0.75) + 0.25 * log2(1 / 0.25) = - 0.75 * log2(0.75) - 0.25 * log2(0.25)

oh wow this was simple and amazing thanks !

IMO this video gives pretty bad explanation of the essence just hiding by algebraic transformations. Found a much better video that actually explains why we use log() without "believe me or not...": ua-cam.com/video/q0AkK8aYbLY/v-deo.html

The most intelligent people are the one's who are able to explain the hardest concepts in the most intutive way possible. Thanks.

Best explanation of the KL divergence in UA-cam for sure.... Thanks...

I skipped through the video but i don't think you managed to explain how the formula itself deals with the infinites that are created when inputting log(0). That's what i don't understand.

Great Video

Thanks for creating this video is awsome

Thanks bro, that is such an elegant and concise explanation of the concept! Really Helpful

Very good!

This is so well explained. thank you so much!!! Now I know how to understand KL divergence, cross entropy, logits, normalization, and softmax.

I wish more professors can hit all the insights that you mentioned in the video.

Well done, Adian. I just found out-though I'm not surprised at all, in the Shannon sense 🤓 -that you're doing a PhD at Cambridge. Congratulations! Best wishes for everything 🙂

How does the use of soft label distributions, instead of one-hot encoding hard labels, impact the choice of loss function in training models? Specifically, can cross-entropy loss still be effectively utilized, or should Kullback-Leibler (KL) divergence be preferred?

Useful video.

great video, thank you!

Nice video

What a great and simple explanation of the topic! Great work 👏

Excellent. Short and sweet.

distirbution

thank you codexchan

Thank you for the best explanation

this is a great explanation thank you !

It does not explain the most important part - how the formula for non-uniform distribution came about

I agree. The transition from uniform distribution to ununiform distribution should be the most important and confusing part.

Great explanation. Thank you so much!

Adian. I know you're probably super busy doing PhD things, but come back & make some more videos! You're a gifted orator.

Best explanation on the interwebs!

what is true class distribution?

the frequency of occurrence of a particular class depends on the characteristics of the objects

So a Kale Divergence of zero means identical distributions? What do the || lines mean?

<3 this. 👌

wowowowo

Perfectly explained in 5 minutes. Wow.

So clear explanation! Thanks!

Three bits to tell the guy on the other side of the wall what happened, and it suddenly made sense. Thanks.

This is an incomplete hypothesis because on p(x) and ln(p(x)) come without proof is grossly insulting and it ignored the basic principle of conservation of energy meaning if pressure goes up then volume also goes up to release presured to go back to normal and that is the frustrating truth of life. The beast never dies it only change its phases or faces

Excellent video. Can someone help me understand why is it called Divergence in the first place? Why are we taking 1/N power to normalise it to sample space, I did not understand the logic behind this.

not really a great explaination, so many terms were thrown in. that's not a good way to explain something.

Thankkkk youuuuu.

Thank you so much for this video and clear explanation!

make more videos please , you are awesome

Excellent expositions on KL divergence and Cross Entropy loss within 15 mins! Really intuitive. Thanks for sharing.

Great video! Can you make a video about soft actor critic?

Thank you!

Bro really explained it in less than 10 mins when my professors don't bother even if it could be done in 5 secs, true master piece thus video keep it up man 🔥🔥🔥