Graph Node Embedding Algorithms (Stanford - Fall 2019)

Вставка

- Опубліковано 31 жов 2019

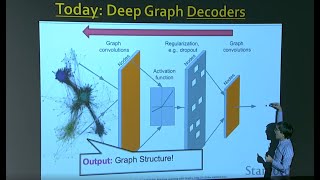

- In this video a group of the most recent node embedding algorithms like Word2vec, Deepwalk, NBNE, Random Walk and GraphSAGE are explained by Jure Leskovec. Amazing class!

- Наука та технологія

Watched it for the third time and now everything makes sense.

15:30 Basics of deep learning for graphs

51:00 Graph Convolutional Networks

1:02:07 Graph Attention Netwirks (GAT)

1:13:57 Practical tips and demos

Great Sir, Congratulations for your oustanding teaching capabilities. It really change my life and my view on Graph Network. Thank you very much, Professor

Prof. Lescovec covered a lot of material in 1.5hr!

It was very engaging because of his energy and teaching style.

;;####

Thank you for this lecture. Really changed my view about GCNs

What's the major point that strik to your head? Lets others know if it convenient for you. Thanks

Awesome! Thanks for sharing. Will the hands on session be posted?

Thank you so much for making this lecture publicly available. I have a question, is it possible to apply node embedding to dynamic graphs (temporal)? Are there any specific methods/algorithms to follow?

Thanks in advance for your answer.

Awesome video. Please share more on this topic!

Amazing lecture of gnns.

Great work..

Hello. These lectures are very interesting. Would it be possible to share the GitHub repositories so that I can get a better understanding of the code involved in the implementation of these concepts?

Classes so fun. The death here is different than the death in Computer Vision due to NSA death.

@Machine Learning TV yes, and please share the link where you shared all the graph representation learning lectures. i will be thankful..

I think it is this one: ua-cam.com/video/YrhBZUtgG4E/v-deo.html

where do I find the slides fo this lecture

My question at 31:00, what if previous layer embedding of same node is not multiply with Bk like Bk hv(k-1)...what will be the impact

on embedding...

43:40 I have a question for the slide here. How can you generalize for a new node when the model learns by aggregating the neighborhoods and the new nodes doesn't have a neighborhood yet.

Wanna learn the whole series...

ua-cam.com/play/PL-Y8zK4dwCrQyASidb2mjj_itW2-YYx6-.html

Any plans to publish lectures 17, 18 and 19?

Yep! Soon we will upload new lectures!

In GCN, we get a single output. In GraphSAGE you concatenate it to keep the info separate. So at each step, the output H^k will have 2 outputs, isn't it? If not, then how are they aggregated and still kept separate

Kanishk Mair hi, I didn’t understand either. Did you find anything about it?

I tried to work on pytorch geometric using it (SAGEConv). Not sure how it works but looking at it's source code might help

I think the concatenated output is the embedding of the target node. And it depends on the downstream task to further process it, by passing it through more layers, before having the final output.

Hi, where can I find the next lectures of him?

We will upload them soon

ua-cam.com/play/PL-Y8zK4dwCrQyASidb2mjj_itW2-YYx6-.html

Can you share the hands on link?

Where can I get slides?

you can find it at here web.stanford.edu/class/cs224w/

On behalf a people from a remote eastern country: niubi!!!!

Sir can you please share Tuesday lecture

The past Tuesday?

@@MachineLearningTV yes, and please share the link where you shared all the graph representation learning lectures. i will be thankful..

It is available now. Check the new video

Deeper networks will not always be more powerful as you may lose vector features in translation .And due to additional weight matrices the neural networks will be desensitized to feature input.Number of hidden layers should not be greater than input dimension.

How do you aggregate dissimilar features ? For example sex, temperature, education level for each node ?

ua-cam.com/video/7JELX6DiUxQ/v-deo.html "what we would like to do is here input the graph and over here good predictions will come" Yes, that is exactly it! xD

Pagan porque vean que tiene más seguidores. De echo pagas $10 pesos por cada video