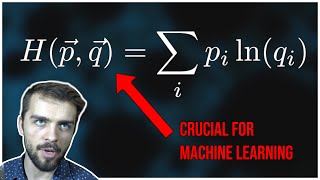

What is the difference between negative log likelihood and cross entropy? (in neural networks)

Вставка

- Опубліковано 22 сер 2024

- Full video list and slides: www.kamperh.co...

Introduction to neural networks playlist: • Introduction to neural...

Yet another introduction to backpropagation: www.kamperh.co...

This is one of the better explanations of how the heck we go from maximum likelihood to using NLL loss to log of softmax. Thanks!

Thanks Herman. I'm following some pytorch tutorial and got lost when i saw the cross entropy computation equal the NLL one. This definitely filled the gap

Very happy this helped!! :D

Good explanation. Thank you Herman

Thanks for the video

Why is it prefered to solve the problem as minimize the cross entropy over minimize de NLL? Are there more efficient properties doing that?