Forest of Thoughts: Boosting Large Language Models with LangChain and HuggingFace

Вставка

- Опубліковано 31 тра 2024

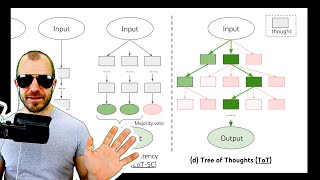

- Tree of Thoughts becomes Forest of Thoughts, with the addition of multiple trees. Unlock the power of Large Language Models with our deep dive into the LangChain framework and HuggingFace integration! Join Richard Walker in this exciting exploration of 'Forest of Thoughts' - an innovative technique that can boost your AI's problem-solving abilities.

In this video, we start by revisiting the 'Tree of Thoughts' prompting technique, demonstrating its effectiveness in guiding Language Models to solve complex problems. Then, we introduce LangChain, a tool that simplifies prompt creation, allowing for easier and more efficient problem-solving.

We don't stop at single-model reasoning, though! We'll show you how to use LangChain to run multiple AI models in parallel, each equipped with unique prompts. And as a grand finale, we bring in HuggingFace - a platform that hosts a plethora of Language Models, adding even more diversity to our AI team.

Whether you're an AI enthusiast, a developer, or just curious about the future of technology, this video will provide valuable insights.

✅ Subscribe for more fascinating AI explorations:

👍 Don't forget to like, comment and share.

Time Stamps:

0:00 - Introduction

0:46 - 'Tree of Thoughts' recap

1:46 - LangChain

2:23 - Single-model reasoning with LangChain

3:21 - Multi-model reasoning with LangChain

5:29 - Integrating HuggingFace

6:52 - Conclusion

For more details:

🔗 Code on GitHub:

bit.ly/3J8Y2tH

🔗 Join Lucidate:

bit.ly/3J5E5Ux

🔗 LangChain ToT development:

bit.ly/3CogYRm

#LangChain #HuggingFace #LargeLanguageModels #AI #MachineLearning #ArtificialIntelligence #TechnologyExploration #ForestOfThoughts

![Build a LangChain App [Tutorial]](http://i.ytimg.com/vi/pKQrbyjIDI8/mqdefault.jpg)

also please take a look at "Thought Cloning: Learning to Think while Acting by Imitating Human Thinking" paper

Thanks. I’ve not looked at that yet. I will add it to the list. Greatly appreciated, Lucidate.

Thanks 🙏

Very interesting idea to simply emulate the tree of thoughts approach with a prompt. It's crazy that the language model is able to make a tree of thoughts by just asking it to do one. It just shows how smart those models really are.

Definitely worth experimenting with the prompts. Some work, some fail disastrously! Clearly there is a way to go before this technique can be described as "robust". But as I said, worth tweaking around and trying out different prompts for different scenarios. As you say there we are finding out that there is seemingly more to these encoder/decoder transformer neural networks than meets the eye... Thanks for the comment, greatly appreciated. I hope there is other material on the Lucidate channel that you find equally illuminating.

Love the line: “language is the interface”

Me too! I hope you enjoyed the rest os the video as much as you enjoyed that line? Lucidate.

Thanks for sharing your helpful explanation

Glad it was helpful! I hope you find the rest if the videos on the Lucidate channel as insightful!

Great take on the argument.

Thanks @mayorc. Greatly appreciated. Lucidate.

Another fantastic video Richard. I am going to set up your llm Forest of Thoughts experiment and test it out.😊

Glad to hear it Joseph. Keen to see your results if you are happy to share them. In my experiments the OpenAI models performed far better than anything I could find on HF, but there are so many models there I can’t believe that something will give Mr Altman a run for his money. Richard. Thanks again for your kind words and your generous support of the channel.

This is great! However, I noticed a small drawback when imagining the creation of a massive forest of 1000 GTP-4-powered trees-it would significantly impact my API bill. But here's some exciting news: I've heard that there is an upcoming release of an open-source Orca model that could potentially solve this problem. Once Orca is published and available, I would be able to run it on my own GPU, allowing me to tackle complex problems without worrying about excessive costs 😆

Agreed. If you want to use a paid-for model like any of the OpenAI models then you are able to do so. Similarly there are lots of free models to choose from from place like HuggingFace or other sources. I used paid for models in this instance simply because the results were good,and they are available (as you say for a price) to anyone with an OpenAI key, so they can create their own results and compare them with others. Frankly I haven’t tried (or even considered!) scaling this approach to 1000 trees in a forest! Frankly I don’t think that it would be that effective. Where I _think_ it would be useful is with diverse specialist models for each “tree” in a very small “wood” (perhaps up to eight or so trees). Do you have other thoughts?

@@lucidateAI The Orca model is significantly better because it excels in reasoning and is on par with GPT-3, which is quite impressive for a 15B model. I believe utilizing the "Three of Thoughts" approach would be very effective and scalable, especially when each tree receives a slightly different prompt for processing, resulting in greater response diversity. Another aspect to consider is refining the final judge so that it doesn't rely on a single outcome but rather the culmination of reasoning from individual trees' results. It seems to me that this approach is scalable and has tremendous potential.

Agree on all points

What Orca says to me is that training data can vastly improve the NN's performance.

When you think about it humans are trained on a miniscule amount of data (in comparison). So there's probably room for even greater improvements.

@@danberm1755 It's best to currently train Large Language Models (LLMs) using children's fairy tales as the foundational material. Stories about sleeping princesses, the battle of good against evil, and other typical narratives have long served as a tool for teaching young children morality and good behavior.

great video - thanks!

You are welcome! Glad you enjoyed it!

Great and straightforward tutorial, appreciate it!

Glad it was helpful! Many thanks for your kind words. I hope you find the other content on the Lucidate site as insightful.

@@lucidateAI yep, just added a bunch to the queue!

Great! Keen to hear the reviews, and suggestions for additional material. Lucidate.

I think it's perfectly reasonable to think that the watch was left in the towel at the snack bar. Because he put the watch "in" the towel may imply that the towel had a built-in pocket for holding small items like a watch. We don't know exactly how the watch was placed in the towel, but Carlos could have folded up the towel's corner containing the watch as he vigorously shook it. The AI is project it's level of competence onto Carlos. Why would Carlos be so careless as to forget his watch was in the towel and not notice that he dropped the watch while he was by the pool side?

It is indeed reasonable, furthermore it is possible that the watch is in the snack bar. But I consider it unlikely. All your statements are correct - we don't know how the watch was placed in the towel, nor do we know if the towel had a pocket. The more information that can be provided, the better the AI can make an informed decision as to the location of the watch after all of the events described unfolded. But the real point of the video is crucial, and perhaps should be restated. The quality of the output of the AI can be improved utilising prompting techniques. This is not to say that the AI will always get it "right", or even that there is a "right" answer. You are entitled to your view that it is reasonable to think (given the information provided) that the towel is in the snack bar. I'll re-state that I agree that it is reasonable and possible, but (at least IMHO) not the most likely outcome. But tweaking the scenarios, modifying the prompts, challenging the answers (and the assumptions) are key elements of this branch of AI and I welcome your challenging of the conclusions of this video.

@@lucidateAI By tuning AI to our perception of "right", we are introducing the flaws of the human world view when maybe at times we should be more objective and try to see what the AI sees.

The towel lacked critical information, and our interpretation of the problem is biased to our understanding of the world.

Amazing! Thanks.

Glad you liked it!

Oh, this is a good one.

Glad you like this too! Don't forget to like, subscribe and share! ;-)

Thank you for the video !

You are very welcome. Glad you enjoyed it! Was there anything specifically you found particularly insightful or inspiring?

@@lucidateAI the implementation of ToT, I am thinking of automating it with python, and this implementation gave me some intuition on how to.

Plus the integration with Langchain is very useful, it's something I am definitely going to try !

Thanks for the elaboration. Definitely worth (IMHO) getting familiar with LangChain.

I was looking for this type of channel now found it

Thanks Shivam. I hope you find the rest of the channel as insightful as this video. Keen to get your feedback in due course. Lucidate.

My Tesla K80 finally came in. Soon I will be joining y'all in this journey.

Rick, you are most welcome on the AI bus. Glad to have you aboard! Lucidate.

Hey by the way GPT-4 was able to correctly answer this question but i love this Forest of Thought that you have setup. Thanks for sharing

You are welcome!

It seems like with GPT you need to select the persona you'd like before promoting.

This seems to me like a big deal. GPT is extremely fluid in its persona and needs to be guided when initialized (as your tree of thought prompt does).

The prompt certainly makes a massive difference in the output. In all respects. Hence the current interest in prompt engineering. Appreciate your comment and your insights. Lucidate.

so i decided to allow GPT-4 to update the Prompt Template after i gave it the Prompts that i was working with and this was his response: This approach of combining different LLMs and prompt templates to solve a complex problem is quite innovative. However, you might want to make a few adjustments to the templates to help the LLMs better handle the problem:

When experts are presenting their reasoning, it's important to have them explicitly state each action taken by the character in the question and their interpretation of it. This will help ensure that each step of the scenario is properly considered.

When experts are critiquing each other's responses, it might be beneficial to have them point out specific details or assumptions that they disagree with. This can encourage a more thorough examination of the scenario.

When experts are assigning a likelihood to their assertions, it would be helpful to have them explain why they believe their assertion is likely or unlikely. This can provide more insight into their thought process

Thanks @shemaiahsanders4950, appreciate your comments and insights. Point 1, completely agree - this has been my experience. There is no question in my mind that the quality of the output improves with this explicit step. Point 2, this is interesting (much to my shame I have not looked at this) the only downside I see is gumming up the context window and higher token costs - but as context windows are increasing rapidly and costs of access are dropping (though not quite so rapidly...) this is not a major concern. I'll take a closer look at this - it seems like a great suggestion. Do you have any results that you are comfortable sharing? Three seems like a good idea (again not something I've tried), I'm slightly more excited about your second suggestion, but again this seems worth trying and I'll give it a go. Again if you have results that you are comfortable sharing I'd love to see them, but completely understand should you not wish to. Thanks for your support of an contribution to the channel. I hope that others browsing the comments find your observations as insightful as I have. Lucidate.

@@lucidateAI oh yes i can share them of course. This is your creation and i love it . I'm just putting my 2 cents in when i can :0 if you don't mind. Where should i display the results ? and you are right it can be a bit pricey if you are not careful. is it possible that you can add tiktoken and price return results like you have done beautifully to Autopilot v.6?

Don't mind at all! Totally delighted! If you can get the results into UA-cam comments this is a great place to share so others can see, you can always email me at info@lucidate.co.uk. Of if you want to join Lucidate UA-cam membership at VP level or above (this is a monthly paid subscription, so I understand if this is not for you) you can share with other members on the Lucidate Discord.

amazing

Thanks Geraldo. I'm glad you enjoyed the video. Here's hoping that you find the rest of the videos on the Lucidate channel just as amazing (if not amazinger!). Thanks for the comment, don't forget to 'Like' & 'Subscribe'! ;-) Greatly appreciated! Lucidate.

I'm curious instead of pre-defining HF models how would it work with HuggingGPT, which selects models itself :)

Thanks Attila. Some of my thoughts on that matter (and others relating to HuggingGPT) are contained in this playlist: ua-cam.com/play/PLaJCKi8Nk1hy48P5fJWkD-AJBVi9JDhTZ.html . Lucidate

Would be nice to thread the LLMChains to make them truly parallelized (as stated in the video). This makes the script run in 15s rather than 45s. Basically makes the amount of chains quasi-unlimited assuming you don't blow past your API limits (which can be throttled and batched btw). Only code you need is this:

def run_llm_chain(llm_chain, question):

return llm_chain.run(question)

if IS_THREADED:

with concurrent.futures.ThreadPoolExecutor() as executor:

responses = list(executor.map(run_llm_chain, [llm_chain_1, llm_chain_2, llm_chain_3], [question]*3))

else:

response_1 = llm_chain_1.run(question)

response_2 = llm_chain_2.run(question)

response_3 = llm_chain_3.run(question)

I say if we're all going to be using langchain a lot, we need to get used to threading and controlling the stream. Must do.

Makes perfect sense and thanks for sharing the code. The original video was more about the concepts than performance and tuning, but your suggestion is definitely an improvement and definitely worth sharing (and welcome!). With thanks! Lucidate.

@@lucidateAI Yeah, it's been a bugaboo of mine even watching James' langchain and pinecone vids. Clearly all this stuff was meant to be threaded, but we're leaving that part out (assuming it's not built into a package).

That, and pre-caching are super important.

Completely agree. But I may be yet another that continues to frustrate you. There is only so much content that you can get into an 8-13 min YT video. You have given me food for thought though on a series of performance and optimization videos. There seems to be a of material on *what* you can do with these models, but as you adroitly point out not as much on *how* you should use them. Thanks for your comment and key observation. Lucidate.

I'm really curious of a setup, when in "trees" the prompt templates are fine tuned by "experts", probably "experts" have their own prompting methods ( CoT, SC, other), own prompt templates and prompt vars (like skills, job, role etc.) and this way a "tree" creates its output. And this is repeated in forest topology eg. with different HF models added.

Are you describing a combination of FoT along with the ideas presented in this video: ua-cam.com/video/t-hp-z-2N-Q/v-deo.html ? If you are, then I agree with you - (if you aren't then I guess I haven't quite grasped what you are saying...). Lucidate

@@lucidateAI I meant this video and its terminology, where a "tree" is a LLM with a unique prompt template, a "forest" is the 3 "trees" together, if I understood it correctly (whether aggregator LLM is included in "forest", here is irrelevant),.

After rewriting my long response: in this video there are 3 trees, using different LLMs and ToT prompting methods/techniques in each tree.

What I mean is that in a tree, which uses say 4 different experts, these experts should have their own prompting technique and template with vars like skills, role.

So say:

#1 expert Python coder using CoT

#2 wealthy and expert businessman using SC

#3 Elon Musk :)

#4 Warren Buffet :)

I will work on it I promise :)

Look forward to seeing your results!

love it

Once again, many thanks for your kind words. Glad you are enjoying the material. Lucidate.

Let's upgrade Graph of Thoughts with Forest :)

My thoughts exactly ;-)

Can a similar result be achieved manually (from 'first principles'), without using Langchain, just using several different chat engines, perhaps cutting and pasting the answers between them (and maybe asking in the prompt to emulate a certain temperature)? I ask this because I have struggled to understand elsewhere, what LangChain adds at the basic level (I think at the API level it can reduce boilerplate per model/database). Do you think this can all can be done manually? If so, I know that I could program it myself, accessing the APIs (python is not my programming language!) or indeed just do it manually.

I'm a huge fan of LangChain, so perhaps my answer is biased. For me it makes interactive with LLMs straightforward. It handles prompt templating, Agents to fetch information from apps, documents and the Internet and much more besides. Here is a playlist that goes through some of the functionality ua-cam.com/play/PLaJCKi8Nk1hwZcuLliEwPlz4kngXMDdGI.html (a tiny amount of the full capabilities of the framework). I'm not affiliated in any way, there is no commercial relationship between Lucidate and LangChain - I'm simply an enthusiastic user. But to answer your question then the answer is more or less "yes" to everything (notwithstanding your correct assertion about things like temperature - there are somethings that you can only do precisely via code). There are myriad ways to access LLMs and integrate them with other information sources and apps. None of them absolutely 'Right' or absolutely 'Wrong' (despite what some will tell you). But many will be easier to implement and (perhaps more importantly in a rapidly evolving field like AI) maintain and extend. And this is where frameworks like LangChain really excel. Yes I think that they make initial development easier, but they make it so much more straightforward to add to, modify or fix your AI systems. (Again not to say that folks couldn't write unmaintainable stuff using LangChain - a tool is as good as the craftsperson that uses it). But at the end of the day, you do what you want to do. Don't let anyone tell you different. But if you are willing I'd (politely ask) that you take another look at LC. Thanks for your question David and support of the channel. Lucidate.

@@lucidateAIWhat an incredibly informative answer! Thank you! I will go through this suite of videos over the next few days, now understanding your familiarity and respect for LangChain. And of course many thanks for confirming that this can be done manually - this helps me understand that the cleverness is in LLM answering via 'Tree of Thoughts > Forest of Thoughts' . For the moment, until I know otherwise, I'm thinking that LangChain works as a co-ordination tool, as important as an airport traffic control tower, when co-ordinating high-speed AI.

Good analogy! (If you get a chance to watch the videos you see I come up with a couple of analogies for LangChain myself. I do like your ATC one!)

you should be able to use this method with chatting with PDF's with the PDF serving as the dataset and then you can ask it problem solving questions to obtain the right answer. I going to try to set this up

This is easy to do with LangChain - have you explored this option? Lucidate

What's next after Forest of Thoughts? Ecosystem of Thoughts?

You obviously are not currently experimenting with Python and LangChain…..

@@avidlearner8117 Oh I am, and I'm just blown away by the quality of this guy's content.

Thank-you Ryan. Greatly appreciated! Lucidate.

Excellent. both TOT and FOT seem to supersede COT. Perhaps the use case for COT is simpler searches!? So the prompt template "3 experts evaluating each other ..." seem to trigger TOT and consequently FOT in language model and is not built in with language models. Langchain has a agent type 'agent=AgentType.REACT_DOCSTORE' that triggers COT and are you saying that langchain is going to create an agent for TOT now? By the way github link seems to be down at the moment provided there is no error in the spelling.

GH link should be working now. Ive updated the description with a bitly link (it appears that YT was truncating links in descriptions longer than 160 chars). Can you see if it links through now, of if I have do something else to fix this. Thanks Kevin! Lucidate.

@@lucidateAI works great thanks

Thanks!

Hi The Github repo provided for your forest of thoughts script sends a 404 error message. Could you please make the code available as it seems like the repository is still marked as private

Thank you kindly

There was an error which I believe I've now fixed. . It appears that YT is truncating URLs greater than 160 characters in the description. I've updated with a bitly link. Can you check and see if it is working now? You'll need to login to GH naturally or you will get 404'ed..

In above example, how to generate Unique LLM Decorator prompts in generic ?

I'm sorry @indrakumarreddy6679 but I don't fully understand your question. Can you re-phrase, elaborate or provide an example?

Couldn't one program the model to do this concurrently with an infinite, or near infinite number of trees/experts on the back end and synthesize all answers down to 3 or 4 before returning any visible results? The only limitation should be computing power. Right?

Cost is another factor if you are using models from the OpenAI stable. As you ramp up the models you will - certainly for the type of problems under consideration in this video series - hit diminishing returns. But the point you make is a good one, this approach lends itself to concurrency. Thanks @HumanLiberty for your comment. Lucidate.

Okay now we grew from “decision-tree” method to the advanced “random forest” model!

Still looks like learning ML In backwards to me!

What’s Next!?

Reinventing GANs by integrating multiple models as corresponding Agents?

Then what?

Validating “Singularity” ??!

Ha! Good point! Certainly analogous superficially. But (sadly) I can't make the analogy work much further than that (perhaps you can?). The LLM techniques are unsupervised while RF ua-cam.com/video/jz1ZBTrIx5Y/v-deo.html and DT ua-cam.com/video/5swHVbJNWpw/v-deo.html supervised (so that doesn't work). RF is an ensemble method, as is FoT so that *definitely* works as an analogy (and a good one). But I can't think of any structural analogies at all. DT's use techniques like Gini impurity to make the most significant splits in the data, and RFs use bagging and bootstrapping. It would be really great in my consulting and teaching business if I could think of an analogy between what is going on in ToT/FoT and GI/Bagging/Bootstrapping. But I can't. If you can, please let me know. As far as Agents and GANs are concerned I think you may be on to something.

hi everyone try this scenario that GPT-4 created unfortunately Forrest of Thought was not able to get the answer correct:

1. Penelope is attending a garden party. She arrives wearing a beautiful pendant necklace, her purse, and carrying a book.

2. She first stops at the buffet table, where she places her book down to fill her plate with food.

3. After eating, she goes to a bench in the garden, where she takes off her pendant necklace and places it on the bench next to her purse while she looks for her book in her purse.

4. Realizing she left her book at the buffet table, she leaves her necklace and purse on the bench and goes back to retrieve it.

5. She stops by the drinks table on her way back to the bench, puts her book down again, and takes a glass of lemonade.

6. When she finally returns to the bench, she puts her necklace back on, picks up her purse, and realizes she has misplaced her book again.

7. After the party, Penelope realizes she has lost her book.

Where is the single most likely location of the book? you can try it yourself the answer is After Penelope retrieved her book from the buffet table, she put it down again at the drinks table to take a glass of lemonade. It was not mentioned that she picked it up again from there. Therefore, the most probable location of the book would be the drinks table

Here is the response I received from GPT-4 (v May 24)with a direct prompt (no CoT, no ToT, no FoT augmentation - it seems to have an "implicit" CoT but I'm not providing any "think about this step by step" augmentation - it is doing all of this itself.) "It seems Penelope has been quite absent-minded during the party, misplacing her book several times. Let's revise the sequence of events to see if we can figure out where she might have left it:

She arrived at the party with her book, a necklace, and a purse.

She placed her book down at the buffet table to fill her plate with food.

After eating, she moved to a bench, but her book was still at the buffet table. She didn't realize it yet.

She went back to the buffet table to retrieve her book, leaving her necklace and purse at the bench.

On her way back to the bench, she stopped by the drinks table and placed her book down again to take a glass of lemonade.

She went back to the bench without her book, put her necklace back on, and picked up her purse, realizing her book was missing again.

From this sequence, it seems that the last place Penelope left her book was at the drinks table when she stopped to get a glass of lemonade. Unless someone moved it, the book should still be there. It's possible that she overlooked it in her confusion or that it got obscured by other items on the table."

@@lucidateAI yes so GPT - 4 got it right, she left the book at the drink table. I have to tell you that i asked GPT-4 to improve on the prompt template and it did. With the improvements, you application of Forest of Thought was able to get right. So this demonstrates how important prompt are to LLM

Completely agree.

it says page not found on github can you fix that? I really need the code. thanks for the hard work

Updated. With a bitly link. It appears that YT is truncating URLs greater than 160 characters in the description. Can you check and see if it is working now? You'll need to login to GH naturally.

@@lucidateAI still page not found 404

And you are logged into GitHub? Send me an email on info@lucidate.co.uk and then we can exchange screenshots and stuff. URL is github.com/mrspiggot/forestOfThoughts

@@lucidateAI Yes i will send you email with Screen shot And I'm also logged in inside github

I am getting the same 404 error🤔🤷🏼♂️

wait i just look at your requirement file and that is alot of dependencies, yikes

Streamlit and Langchain will both load a lot of libraries. Also bear in mind that this is not a single app repo, so there are some redundant modules if you just wanted to build a single app. Lucidate