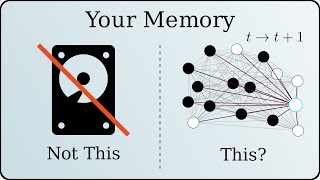

This neural network quickly forgets what it was previously trained on.

Вставка

- Опубліковано 28 вер 2024

- This is a visualization of a neural network periodically learning and forgetting information. The network is designed to learn 500 data points, but instead of training the network on all of these training data points or a random sample of these training points for each network update, after training the network on a particular data point for some period of time, we discontinue training the network on that particular data point (and train on different data points) for enough time for the network to forget that data point before resuming the training on that particular data point.

In this visualization, the loss levels for the data points that the network is currently training on are colored blue while the loss levels for all the other data points are colored red.

We observe that when the network is currently being trained on a particular data point, that particular data point has a low loss level, but the loss level for that data point quickly escalates as soon as we pause the training for that data point. We also observe that the norm of all the weight matrices and bias vectors for the network continually increases. We did not use any L1 or L2 regularization for this network, but such regularization would have decreased the norm of the network.

The neural network is of the form Chain(SkipConnection(Dense(mn,mn,atan),+),SkipConnection(Dense(mn,mn,atan),+),SkipConnection(Dense(mn,mn,atan),+),SkipConnection(Dense(mn,mn,atan),+),SkipConnection(Dense(mn,mn,atan),+),Dense(mn,20)) where mn=40. The neural network N is trained to minimize the loss where the loss level is the norm squared between N(x) and y for training data points (x,y).

The training data consists of 500 data points. The training data points (x,y) are taken from a standard Gaussian distribution with x,y independent.

The neural network was initialized where all the weights were set to zero. I initialized the weights to zero in part to demonstrate how the network increases in norm over time.

The blue interval consisting of the current points that the network is training with shifts over time. I have programmed this interval to shift as the loss level of the blue interval goes below a specific value. This means that the rate that the blue interval shifts is a measure of how quickly the network relearns the data points. We observe that as time goes along, the network needs to take more time to relearn the data points.

The tendency for a neural network to forget what it was originally trained on when it is trained on a new task is known as catastrophic forgetting. While the term 'catastrophic forgetting' has negative connotations, the phenomenon of catastrophic forgetting is sometimes considered to be a positive characteristic of neural networks. Sometimes, we want neural networks to forget what they have been trained on.

I personally consider catastrophic forgetting to be an advantage for neural networks, but I also believe that we need to research networks that forget even more than our typical neural networks do. We need to design networks that completely forget everything after retraining in the sense that if we train a network twice with different initializations, then both times, the network would converge to the same trained model because it completely forgets its initialization (the networks that completely forget their initializations can be studied more mathematically). I have developed my own machine learning algorithms where no information about the initialization is left over once the model has been completely trained.

Unless otherwise stated, all algorithms featured on this channel are my own. You can go to github.com/spo... to support my research on machine learning algorithms. I am also available to consult on the use of safe and interpretable AI for your business. I am designing machine learning algorithms for AI safety such as LSRDRs. In particular, my algorithms are designed to be more predictable and understandable to humans than other machine learning algorithms, and my algorithms can be used to interpret more complex AI systems such as neural networks. With more understandable AI, we can ensure that AI systems will be used responsibly and that we will avoid catastrophic AI scenarios. There is currently nobody else who is working on LSRDRs, so your support will ensure a unique approach to AI safety.

![The moment we stopped understanding AI [AlexNet]](http://i.ytimg.com/vi/UZDiGooFs54/mqdefault.jpg)

I, too, am a neural network that easily forgets

As long as you don't forget your cryptocurrency private key or calculus. . . But in reality, humans can generally remember how to perform tasks after learning different things, so catastrophic forgetting does not happen with humans. I have not been playing the piano much recently (though I may have to play more piano to add music to my videos), but I have not forgotten how to play the piano at all nor have I forgotten specific pieces that I have gone over in the past.

I'm no neural network scientists but I wonder if it's possible to somehow create a neural network that specializes in long term memory. Like our long term memory has the advantage of being long term but it's difficult to change and slower to access compared to short term. Then somehow connect it to this neural network.

I am only vaguely acquainted with papers that deal with networks that try to ameliorate the problem of catastrophic forgetting in neural networks. But I barely consider catastrophic forgetting a problem since if one still has the training data, one may continually train the network on that data. The problem of catastrophic forgetting is an artificial problem since one has to remove the training data in order for the problem of catastrophic forgetting to be a legitimate problem. Neural networks need to forget a lot of information in their initializations. I actually believe that neural networks do not go far enough in forgetting; even though the network forgets the specific data points, the cosine similarity between the weight matrices of the network before and after retraining is very high which indicates that the network forgets how to perform tasks, but the network still retains its general structure.

I would be interested in constructing networks for which we can recover all the training data from the network without generating any examples that are not in the training data though, and such networks do not forget the training data.

It looks so tired

The network is getting tired because it is getting fat. As the network goes through the data over and over again, it increases the norm of its weight matrices and bias vectors to the point where it has more trouble learning. You can tell that this is the case because the network slows down over time and takes more time to cycle through the data. Regularization and zeroing out nodes would have helped with this.

This is cool though! Just wonder... How you make this visualised? I'm just a normal person take interest in ai but don't know how to start... Any advice?

I make the visualization frame by frame and then I put all the frames together into an mp4 file. How to begin with AI depends on your math/CS background, amount of spare time you have, and level of interest in AI.

I would be curious to see how a network with dropout would compare :D

That is an interesting remark. Perhaps with dropout, the network is more robust against forgetfulness since it will have a lot of redundancy in order to memorize the data.

me too NN, me too

Fruits and vegetables would help though.

Is this Art?

This is not art. This is just a demonstration of the behavior of neural networks.

me during college

The solution is to take lots of math courses that build upon each other so that you don't forget. One needs calculus to compute the expected value and variance of a continuous random variable.

neural networkn't

The worst part is that if we take the cosine similarity between the weight matrices are different parts of the training, then the cosine similarity will be very high. The network does not change its structure during retraining, but it forgets how to do things. Neural knee twerk.