Cos of matrix

Вставка

- Опубліковано 7 лис 2024

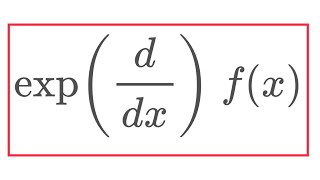

- In this video, I calculate the exponential of a matrix and other fun matrix functions, like the cosine or the sine of a matrix, and as an application, I solve systems of differential equations like x’ = Ax or x” + A^2 x = 0. This video illustrates how powerful power series actually are: they allow us to define matrix functions, which is usually a hard thing to do. And, of course, some diagonalization comes into play as well. Enjoy this beautiful mix of calculus, linear algebra, and differential equations

Why are your videos always the ones the break my notion of what math can be? I love it

I did a math degree, and didn't see this in either linear algebra or ODE courses. Great presentation.

hmm I'm doing a math degree and we're reading about this in the course "advanced linear algebra". The course is not compulsory but definitely of interest nonetheless!

Can also do the exponentiation e^iA, then take the real part.

Congrats on 15k.

Thank you!!!

Would it be the real part, or the Hermitian part?

Almost 70k now ~1 year later.

You should mention that A^n= P*D^n*P^-1 is especially useful when computers are finding the matrix exponential.

Next thing you know we are going to be doing the laplace transform of a matrix with respect to the golden ratio

Hahaha, great idea!!!

@@drpeyam You are welcome. Just give me credit when you're solving the riemann hypothesis

Lmaooooooooo

I’m so happy I found this channel, me as a student studying maths, I love doing calculus and power series, keep up the good work,

I recall this sort of thing from some of my physics coursework decades ago.

Before watching this, I'm 99% sure he's gonna use the Taylor series to define these - that gives you sums of integer powers of the (square) matrix, which are all perfectly easily defined. The only concern then, is convergence; and if that's good, actually finding the limit.

What we were shown in graduate mechanics, was that rotations in Euclidean 3-space (or any n-space, n ≥ 2) can be written as the exponential function of certain generating matrices (which we labeled J, each with two, unequal subscripts), which in turn, are antisymmetric. I seem to recall that this led to finding the properties of the Lie algebra of the generators, but I'd have to go back and review all that - I've forgotten how it goes.

Anyway, replacing the exponential function with any other function whose Taylor series is well-behaved enough, and replacing those antisymmetric generators with more a general square matrix, should be possible. The trick in carrying it out, is in finding an expression for the general n'th power of the chosen matrix...

I see from the description that Dr. Peyam is going to use this to solve certain ODEs; apparently on vectors. Should be an interesting ride!

Let's see what he does...

NB: What he does to diagonalize A, is called a "similarity transformation." It is a quite powerful technique.

Fred

We knew about cos(pi) and today learned about cos(matrix) or cos(m) in short. Now, what about cos(PI M)??

Pun intended :D

"It's not me being crazy, its has some really cool applications."

Loled that you had to clarify this. 😁

Even if it didn't have applications, it could have applications in the future

This video is very useful for statisticians (like me). For us, the big advantage is that we consider most often we apply all these operations to symmetric matrices. Needless to say the beautiful things that happen when that kind of matrices is considered.

I've been looking to learn something about matrix exponentials and before you know it, one of my favourite math channels uploads a video on that very topic. It doesn't get any better than that

This is the most underrated channel...

I loved it

Thank-you very much. A source of motivation the saturday morning. Good week-end.

Octonion power series next (they actually work because of power associativity!)

you’re interested because u show a news ideas for mathematics 🌹I enjoy your videos

Thankyou so much sir!!! Beautifully explained.

Excellent video, one of my favorite topics explored in Linear Algebra

Amazing linear algebra aplication

Does A must be square?

Super clear and informative, thank you

Thank you very much for explaining such an important concept!!!😀

I don't know what you're doing, but I love it.

But what about a matrix to the power of a matrix?

Thus begins the cycle: regular algebra but the variables are matrices, calculus but the variables are matrices, and linear algebra but the variables are matrices. Not mere vectors, but entire matrices as the objects of interest.

Dr. Peyman I love you.

But I have a question about your videos related to matrices and the application of e or trigonometric identities.

Notice that you take a special example of a second-degree square matrix on which these relationships are conducted.

? Is this correct

Beautiful video

Fantastic video as always. I have one question though. What if A is not diagonizable?

You use the definition of exp or cos as a power series, and the Jordan form

Have you ever seen Floquet theory? It's about where you solve the case when A=A(t), so the system x' = A(t)x. The matrix A(t) does have to be periodic.

Congrats with 15000. Next milestone is e^π², please let us know when you pass it!

Congrats on 15k subs!

Won't you need the square root of a matrix for the second part? How do you do this?

Mindblowing thanks

Great video Dr. Peyam. Can you please also teach SVD and its applications?

You are amazing. Thank you. Taught me a lot here. Cool stuff.

Hair is looking wild like he just wrangled this math in from the number jungle

We can do a function of a matrix if it can be represented with a power series. We know that certain power series have a radius of convergence. You used the Maclaurin series in the video, but I assume that this can be generalized to Taylor series evaluated at an arbitrary point in the function's domain.

Is there a simple test to see if a matrix is "inside" the radius of convergence of the Maclaurin series without diagonalizing it?

If an eigenvalue is outside the radius of convergence for the Maclaurin series, can we use a Taylor series evaluated at a nearby point and get the function of that matrix? (E.g. suppose matrix A has a diagonal matrix of {{1,0},{0,3}}, and the Maclaurin series for arctan(x) has a radius of convergence of 1, so arctan(A) can't be found using the Maclaurin series.)

My question is, could you take the logarithm of a matrix and if, would the radius of convergence be a problem ?

Yeah! Basically to get around this you’d require your eigenvalues to be less than 1 in absolute value

@@drpeyam Wait, I thought the radius of ln(1+x) was 1, so ln(x) would converge if an only if (x-Id) is bounded by 1 and -1 in absolute value an not (x) itself.

Do you have a source for "eigenvalues need to be less than 1 in absolute value" criteria?

@@drpeyam Aaaah I finelly think to understand it. So the spectral radius of the matrix should be less than or equal the radius of convergence?

PackSciences I was meaning ln(x+1)

Soo helpful! Thank you so much 🥰

Can you use the complex definition of cosine here, plugging in iM to the matrix exponential?

Yep!

nice video. when I studied electrical engineering during my first degree, I met couple of times such problems, where systems of differential equations emerged naturally. it was not so easy to comprehend, since we learnt linear algebra only after that course:D anyway I can imagine that this sort of problem occurs at several areas of our natural and artifical world, since multivariable dinamical systems are quite common in our physical world (especially when we deal with state-space representations).

but could somebody tell me, what to do when the matrix cannot be diagonaized? how can we calculate e.g. the exponential of that matrix? do we have to calculate and go through those long chain of matrix multiplications and additions?

Yeah, you then do it using the definition, but the Jordan form is useful in those cases

@@drpeyam in that sense the diagonalizable case is a special case of this Jordan form? so when a matrix is not perfectly diagonalizable, then we try to diagonalize it as much as we can? :D I guess with this method we can significantly decrease the computational needs (depending on the structure of the actual matrix, of course).

Sir can a series dedicated to pure probability and statistics be expected? Would be of great help.

Would the trigonometric identities such as sin(A+B), sin(2A), sin^2 + cos^2 = 1 and so on still hold in this trigonometric matrix realm?

Many of them are, but not all, for example sin(A+B) does not always hold(it needs multiplication to commute)

Working through them is a kind of fun exersise in the properties of rings!

^ What he said! Even exp(A+B) = exp(A)exp(B) isn’t always true!

Love you Doc!

Is it a convention that the free parameter C is placed to the right than left as they are for normal numbers? or is it a strict thing that it must be on the right.

It’s a necessity because the parameter is a vector not a number

How does order in a matrix play out? For instance [a b , c d] versus [d a , b c]? Just wondering. Thanks!

It would give a different result since the eigenvalues and eigenvectors aren’t the same

Dr Peyam I mean in general mathematics not just this problem

At 7:48, isn't the cosine of zero equal to one, not zero?

行列がわからんから何やってるかわかんぬ!

でもあまり難しそうじゃないのでまた見に来るよ

Let's get Dr.Peyam to 100k subs this year 😃

For LTI systems with multiple inputs and multiple outputs (MIMO), the exponential of a matrix is extremely useful.

State transition matrix 😊🤗

We actually didn't something similar in control theory where differential equations are essential in the modelling of various systems.

Customer: Can I have a Coca-Cola?

Waiter: Is PeDpI okay?

Wow, If this isn't beautiful then I don't know what is...

loved it!!

At 8:05 shouldnt it be cos1 cos0=1

Cos0=1 cos4

Instead of the 2 zeroes?

e^(diag(u_n)) = diag(e^(u_n))

Ioannis Karakoulias no. because when you calculate the exp(D) or cos(D) matrices, you actually calculate the infinity sums (Taylor series). e.g. the N-th term of exp(D) is D^N/N!, which stays diagonal if D was diagonal too (since the multiplication of diagonal matrices always results in a diagonal matrix). and if you sum up diagonal matrices (even if there are infinite of them), you also end up with a diagonal matrix. so exp(D)=[exp(D_11) 0 0 exp(D_22)], and not [exp(D_11) exp(0) exp(0) exp(D_22)]. hope it helped you.

But what is the geometry meaning of it

cool video

BTW. (d/dt)^2(x) + a^2*x = 0 can be transformed to

dx/dt = v

dv/dt = -a^2*x

An there you can apply exp. Result will be he same. However :)

So how do you diagonalize a matrix?

There’s a playlist on that (Eigenvalues and Eigenvectors)

@@drpeyam Thank you.

i was missing it in my ODE course

Can you teach Galois theory?

Don’t know any, sorry

Dr. Peyam's Show no worries doctor, I’m assuming you teach the analysis side of things right? It would be great if you could make a video on meromorphic functions? Or maybe prove the cauchy integral formula! That would be great

Hazza There will be a video on Cauchy’s Integral formula :)

Dr. Peyam's Show thank you Dr Payem

What if we got x' = Ax but the matrix A is a variable of t? I ran into an integral of a matrix lol

That’s ok, I think an integral of a matrix is just the integral of every component

Matrix P is incorrect. P = P = [sqrt(0.5), sqrt(0.2); sqrt(0.5), sqrt(0.8)].

Basic idea is to express cosine as cos(A) = (exp(Ai) + exp(-Ai))/2 where i = sqrt(-1).

We know you're crazy...despite the cool applications

Hahahaha

Sin(i)

f

What about svd that's what really is going places

?

@@drpeyam For the matrix which cannot be diagonalized

Great presentation. Too bad you stood in FRONT of the camera.

Thx

Kheili duset darim

Matrix to a Matrix?

Great idea, will look into it!

Not impressive. Going to all the trouble of preparing a UA-cam video but needs a piece of paper to remind himself of the steps. Presents the whole thing on a whiteboard with such limited space that he needs to be erasing previous steps. The mathematics is good but the presentation is not.