Language Processing with BERT: The 3 Minute Intro (Deep learning for NLP)

Вставка

- Опубліковано 5 чер 2024

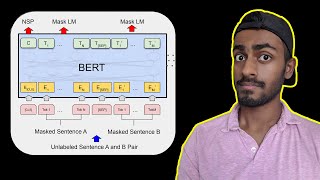

- Since its introduction in 2018, the BERT machine learning model has continued to perform well in a lot of language tasks. This video is a gentle introduction into some of the tasks that BERT can handle (in search engines, for example). The first 3 minutes goes over the some of its applications. Then the video discusses how the model works at a high level (and how you may use it to build a semantic search engine which is sensitive to the meanings of queries and results).

Introduction (0:00)

You have used BERT (applications) (0:25)

How BERT works (2:52)

Building a search engine (4:30)

------

The Illustrated BERT

jalammar.github.io/illustrated...

BERT Paper:

www.aclweb.org/anthology/N19-...

Understanding searches better than ever before

blog.google/products/search/s...

Google: BERT now used on almost every English query

searchengineland.com/google-b...

------

Twitter: / jayalammar

Blog: jalammar.github.io/

Mailing List: jayalammar.substack.com/

More videos by Jay:

Explainable AI Cheat Sheet - Five Key Categories

• Explainable AI Cheat S...

The Narrated Transformer Language Model

• The Narrated Transform...

Jay's Visual Intro to AI

• Jay's Visual Intro to AI

How GPT-3 Works - Easily Explained with Animations

• How GPT3 Works - Easil...

Although we mainly see decoder-only models nowadays with LLMs, this is still super helpful, thanks Jay!

Your videos are so enjoyable! Thanks Jay for this wonderful intro to BERT.

Thank you Jay.

This is an excellent start to understand briefly about BERT.

Excellent video Jay! You are a natural educator.

Great Explanation Jay!! Truly an awesome one!!

I admire you. you are the best, always feel I learn something hard in a easy way

Awesome Video... I love how you explain things... Hats off 👍👍

It's a pleasure to watch your video. God bless you.

Thanks for the introduction!

You explain very well. Thanks.

Great video, I hope you make videos on practical implementations of bert soon.

Great. You're so nice. Well explained. So easy to understand.

Great video. Thanks

Too good. Thanks

Oh man! You are the best!

wonderful video....

Great video, thanks! Do you have any idea which similarity score tends to perform the best when computing the similarities between the sentence embeddings? I suppose cosine similarity, but are there some more exotique ones that you can recommend?

Can I ask you which program you use to make your graphics? They look nice!

Edit: I found out it's keynote! (In case anyone was wondering).

In that case, would you be willing to make a video on how you make your blog posts? They are amazing and you have a real gift at explaining concepts! It's a bit meta, but I think it would be really interesting.

Thank you! I did indeed. It's a video on this channel.

@@arp_ai Thank you! That's very kind of you!

thanks

Great are you going to explain coding for BERT

Great!

I have a doubt: Why should you only look to the first column?

It was part of the training process. BERT is trained on two tasks. One of them uses the first column in a way that leads the model to encode the sentence in that column.

I got lost, when you said the article is represented as a vector or vectors at 4:34. How is it creating only one or 3 vectors from an entire article?

Awesome .please make more video nd blog on new algorithm.How bert create vector of size 768 ?? I have doubt on that

Yeah, It creates a vector of 768 size for each word. As Mr. Jay showed in the video. There are different models of BERT, some generates 1024 too,

How other embeddings are created from search at 5.30

4:21 How does the [CLS] became a representation of entire sentence??? And what about the other word embeddings?

It's one way to get a sentence embedding. I believe in BERT it becomes that way because BERT is trained on two objectives: 1) Masked Language Modeling (where the model is trained to predict words that we had from input text) and 2) Next Sentence Prediction (where the [CLS] token embedding is used to classify whether two sentences come after each other or not). While learning task #2, it associates [CLS] with sentence contents.

Later work, however, like SBERT shows that average pooling the all the tokens provides a better sentence embedding (especially after contrastive training for sentence embeddings).

I'm betting BERT even finds listening to your voice tedious with all of the high frequencies CUT OFF. I don't know who started this trend, and I don't care. It's moronic and it sounds AWFUL. Human speech has most of it's differentiation around 4kHz. When you cut most of that band out, it's harder to understand and tiring to listen to. STOP already. Between that and half the video being taken up by 'Who has used BERT'... Sorry.. downvoted.