Generalized and Incremental Few-Shot Learning by Explicit Learning & Calibration without Forgetting

Вставка

- Опубліковано 3 чер 2024

- Link to the Paper - arxiv.org/pdf/2108.08165.pdf

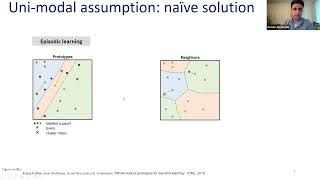

1) What is few-shot learning?

2) What is generalized few-shot learning?

3) What are the difficulties?

4) Our framework to address these difficulties?

5) Extension to incremental learning?

Paper abstract: Both generalized and incremental few-shot learning have to deal with three major challenges: learning novel classes from only a few samples per class, preventing catastrophic forgetting of base classes, and classifier calibration across novel and base classes. In this work, we propose a three-stage framework that allows us to explicitly and effectively address these challenges. While the first phase learns base classes with many samples, the second phase learns a calibrated classifier for novel classes from a few samples while also preventing catastrophic forgetting. In the final phase, calibration is achieved across all classes. We evaluate the proposed framework on four challenging benchmark datasets for image and video few-shot classification and obtain state-of-the-art results for both generalized and incremental few-shot learning.

Speaker Bio:

Anna Kukleva is a research Intern at Facebook & Ph.D. student at Max-Planck Institute for Informatics. She did MSc in Computer Science at Bonn University, Germany, and BSc in Computer Science, Lomonosov Moscow State University, Russia. She previously did research Intern at Inria Paris in Willow team. With Ivan Laptev and Makarand Tapaswi

Research Assistant at Uni Bonn. With Hilde Kuehne and Juergen Gall.

social links:

Twitter: @anna_kukleva_

Github: @Annusha - Розваги