Це відео не доступне.

Перепрошуємо.

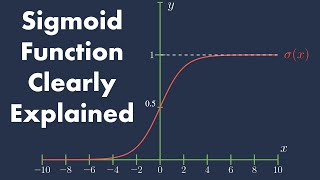

Logistic Regression Part 3 | Sigmoid Function | 100 Days of ML

Вставка

- Опубліковано 15 сер 2024

- Explore the essential Sigmoid Function in the context of Logistic Regression. In this video, we'll break down the role and significance of the Sigmoid function, shedding light on how it transforms input into probabilities.

Code used: github.com/cam...

============================

Do you want to learn from me?

Check my affordable mentorship program at : learnwith.camp...

============================

📱 Grow with us:

CampusX' LinkedIn: / campusx-official

CampusX on Instagram for daily tips: / campusx.official

My LinkedIn: / nitish-singh-03412789

Discord: / discord

E-mail us at support@campusx.in

⌚Time Stamps⌚

00:00 - Intro

00:17 - Problem with perceptron

02:00 - The Solution

08:33 - Understanding the equation

15:55 - Sigmoid Function

27:02 - Impact of Sigmoid Function

38:20 - Code Demo

![The moment we stopped understanding AI [AlexNet]](http://i.ytimg.com/vi/UZDiGooFs54/mqdefault.jpg)

Nitish Sir, you're amongst the rare breed of educators who has the gift instilling intuition in your learners.

Right

A truly amazing teacher is hard to find, difficult to part with and impossible to forget , thank u sir

You remove my fear of logistic regression. India need more teachers like you. As always Amazing.

you are the best teacher in the field of Data Science sir

Never knew this is how Logistic Regression works, Only knew that we use sigmoid but what actually goes in the sigmoid is clear now.

You're awesome man

Even after knowing Log Regression for more than 2 years , today I felt as if I am learning a new algorithm..No one has taught so well anywhere over the internet ..👌👌👌

Nitish I cannot describe in words man. I learnt sigmoid function from andew ng ka video. I had so many confusions.

Your explanation with perceptron and why sigmoid func can work better was fricking amazing man. This is so intuitive. Thanks a ton!

I totally agree with you.

bro agar hume continuous function ki hi jarurat hai toh hum (dot(w.x)>0 ko y=1) or (dot (w.x)

@@abhishektehlan7814normalization ke liye perhaps

@@abhishektehlan7814 Humne "dot(w.x)" ye use nahi kiya because if we do this then this would be a very large number. And while subtracting y-y_hat the number would be so large and model will never converge. So to squeeze the large number between 0 and 1 we use sigmoid.

For deviation with logistic regression, we should also see that the magnitude of X also plays an important role , a correctly positive point near to line will push more aggressively due to its lower sigmoid value ( high 1- sigmoid) compared to a correctly positive point far from line but since value of near to line point itself(X) coordinates might me less than the one being far the push might get diluted.

True Teacher and motivation for student to learn from scratch

Sir you have done lots of research in making these videos. You have done lots of effort in simplifying these videos. I really appreciate the amount of effort you have put into making this logistic regression playlist.

Thanks Nitish for making this concept so crystal clear. The knowledge you are providing through this youtube channel is incredible. I can't thank you enough.

tooo good sir, bhot badiya explanation hai

one of the most exceptional tutor I have ever seen. Kudos to your knowledge and methodology.

you alone are much much better than whole of those big institutes on youtube. please please please make more

today i understand logic behind the sigmoid. one of the best teacher in the world

crystal clear SIMPLY AMAZING

This playlist for machine learning is the best ..........like the best....

Amazing explanation Sir, your content is top notch. Your views should be in Millions

Nice explanation very dept , clean and understanding.. thanks a lot sir

Good work sir, you are the only who gave a clear intuition of this algorithm, thank you so much keep it up you are doing such a great work. 👌👌

Can you please upload other algorithm's intuition like this , for svm kmeans etc..... It will be very helpful....

Wow, the most awesome video I have ever seen for machine learning. Really really helpful.

I saw many videos on UA-cam about this algorithm but no one able to clear but sir you explained the concept very well.. Nice work sir keep it up...

mind blowing explaination sir !! thanks a lot !!

Baap explanation! feels like we are watching MLM (Machine Learning Movie) 👍

Long live the EDUCATOR and long live CampusX❤

Best teacher on this earth!

Give this guy a medal.

Superb Lots of respect from Pakistan

Thank you so much

you are amazing with your teaching skills

sir your way of teaching is brilliant

U r awesome sir. U made it look very intuitive and easy. HATS OFF TO YOU.

Thank you sir

superb explanation, thank you so much for all your efforts 🙏

Sir, you should write a book and I will be first to buy it. Your way of teaching is irreplaceable.

Thanks for the clear intuition that why should we use Sigmoid function :)

bhai agar hume continuous function ki hi jarurat hai toh hum (dot(w.x)>0 ko y=1) or (dot (w.x)

koi b video try kr lu youtube pe ..end me akr yhi smjh ata h

This channel is gold

Thanks

You should upload the OneNotes pdf also, other than this, Nitish sir is beyond any MIT or IIT Professor.

very well explained, sir. hats off toyou

thank you for giving this wonderful lectures sir

That is an excellent explanation; however, a sudden move or change from y^ and sigmoid (y^) calculation at 29:13 min to distance and sigmoid(distance) calculation at 35:25 min was somehow surprising for me. I mean, u were calculating Wn= W0+n(y-sigmoid(y^)).xi and suddenly changed to wn= w0+n(y-sigmoid(distance to line)).xi

maja aaya , funnnn!!!

Thank you sir for this wonderful lecture ☺️

God level explanation bro 🔥

Superb explanation👏💯

Great explanation Nitish

This knowledge is incredible ❤️

Thanks sir, you are great.

clearly understood ❤️👍

Thank you sir 🙏

you are great

BHAI BEST HAI

Awesome

why do we need that yi - yi^ should be zero? at 14:41

Sir your volume is very low in the video. But explanation is very good.

Sir can you please explain what is logit function ?

38:07 FOR LAST TWO REMAINING POINTS,

An incorrectly classified point if on the +ve side have greater distance resulting into a higher value of subtraction of its y_hat from the weights and will result into lower intensity of pulling as compared to a point which is also incorrectly classified but is closer to the line will have a lower value of subtraction and a greater pulling intensity,

Here the logic I think fails , becoz initially u told an incorrect close point have low pulling intensity , and vice versa.

How w1,w2 values are coming ?? 14:19

Sr can yu provide graph images used while explaining

sir agar hume continuous function ki hi jarurat hai toh hum (dot(w.x)>0 ko y=1) or (dot (w.x)

hey bro, I believe sigmoid function makes it easy to create a boundary and it is more easily explainable and optimized.

Sir do you have paid courses on machine learning pls send the link...

to nitish sir and to anyone reading this.....how we get to knw that this value of w0,w1,w2 will be suitable to calculate the z value ???

As in the previous videos sir explained that we start with random values of w0,w1,w2,w3...wn

this w is our models w , which we are trying to correct as much as possible by iterating and in this case by improving the values of the weights[w] by pulling and pushing based on correct points ya toh incorrect points !!

Hope this helps !

@@rushikesh8132 got it brother.thank you for ur help......so i'm left with 2 questions....if okay pls reply for them too :

1. we have to calculate Z for every point right ?

2. suppose for a point we took random value of w0,w1,w2 and then we calculated its Z and put it in sigmoid function and then we chck with conditions right..??..then how we and model will knw its mis classified or correctly classified..???

@@avimishra9253 Sure bhai !

1 st question : Yes we do it for all the points .

2nd : See , as per my understanding , hum even if the point is misclassified or correctly classified are not bothered about that , but weights get adjusted for misclassifed and correctly classified no matter what , this way for every point , our model improves the weight according to sigmoid value of the point ! Hope it clears it !

27:34