Hashing Algorithms and Security - Computerphile

Вставка

- Опубліковано 8 лют 2025

- Audible free book: www.audible.com...

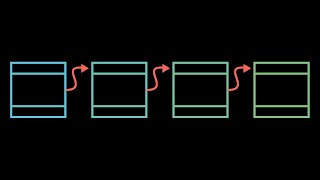

Hashing Algorithms are used to ensure file authenticity, but how secure are they and why do they keep changing? Tom Scott hashes it out.

More from Tom Scott: / enyay and / tomscott

/ computerphile

/ computer_phile

This video was filmed and edited by Sean Riley.

Pigeon Sound Effects courtesy of www.freesfx.co.uk/

Computerphile is a sister project to Brady Haran's Numberphile. See the full list of Brady's video projects at: bit.ly/bradycha...

It'll take you an hour to explain? Great! Who else wants a 1h computerphile special with Tom Scott? :D

Sounds like a great idea!

3:41 That's an… interesting rocket.

I love this guy. he's like the James Grime of programming. he's just so animated and passionate about what he's talking about, all the time, it's really cool.

Damn.... someone who truly breaks things down to the best way ones can understand. Thank You tons! 9yrs later LOL.

My wife loves Numberphile but often doesn't really understand all the content in a video. She really particularly likes James Grime.

Now I know how she feels! I don't understand much of what Tom Scott says, but he's just so utterly watchable and engaging!

he's my personal hero, hands down the most entertaining guest on computerphile :)

Just realized the opening title card says "" and the end title says ""....

Someone clearly had a bit of fun drawing that cocket... er I mean rocket.

Tom Scott is the only reason I subscribe to this channel. He has so much enthusiasm that makes me want to know more about the subject he's explaining.

1:37 It's not always bad to have a very very fast hashing algorithm, it's actually very good to have a fast hashing algorithm when using it for the file checking stuff explained earlier.

The only time you don't want a fast hashing algorithm is when it deals with security, mainly hashing passwords.

Very clear, no bullshit introduction to skip. Right to the core. Thanks a lot.

These videos with Tom Scott are my favorite on computerphile. You should do more with him!

The download verification hash is usually to verify that the download was received without being corrupted during transmission, not really for security.

I was thinking the same thing. Everytime I've seen the MD5 on a download I never thought it implied that it was secure.

That moon rocket looks very similar to.. uhh..

Such a passionate guy.

Kind of a shame this video was mostly an intro to the next episode but i'll definitely be watching out for it!

"if you have 50 pigeons into 25 pigeon holes, you have to stuff 2 of the pigeons into 1 of the holes"

The first audio book I ever listened to, is still to come.

10 years later and he still looks the same

Sometimes webistes deny a password reset since the new password is "too similar" to the old one. How do they know this is all they have is a hash?

well, then run!

If it's the same password, they can tell because the previous password hash will be the same as the current hash. If it's "too similar", it is possible that they have stored other information about the password (eg length), although most likely they have just stored it in a form that is convertible to plain text which is really quite bad.

Watching this video 9 years after it was uploaded, he was right SHA-1 is broken now but still incredible helpful to get a high level view over that topic!

You can use hashes when you're distributing a file through various mirrors - you have some confidence that the version on your site is clean, but to ensure that the version on others' sites match your own, you use the hash to verify.

You are by far the best of the computerphiles. The sorting guy is good too, but wavey-hair is superb.

I would love to see a whole series about computer science, or security, by Tom Scott.

Tom is such a great teacher, I hope that's his job in real life because the world needs more teachers like him

Awesome video Tom! In a previous job i use to test slot game software and for verification of the program we use to use multiple algorithms like MD5, SHA-1 and SHA-2 to ensure what we approved in the lab is what gets used in the fields.

The hash for file downloads is usually used by open source projects, where the executable may be mirrored by countless universities which the software author doesn't have control over. In such a case, it certainly is *not* trivial to compromise both locations.

+Skrapion However, executable hashes are easily manipulated because you can always pad the file with some extra bits that affect the hash but are never actually executed. So if you have a weak hash like MD5 then it's relatively easy (-ish) to make a malicious executable file with the same hash. Of course if you have a good, secure hash like SHA-2 (so far!) then they only way to figure out what bits to use for padding is to try it, check the hash, and keep trying until something matches - i.e., brute force. This takes an impractical amount of time to do, though, as long as they hashing algorithm isn't *too* fast (as Tom mentioned).

Of course this is a different argument. You're talking about whether you can trust the posted hash value to be correct or forged (which would be a problem no matter how secure your hash algorithm is). I'm talking about if the posted hash value is correct, but the hash algorithm itself is weak...

The only thing I'd say to your point is, how many people actually cross-check the posted hash against an independently run mirror/fork? If you do, then I'm sure you're in the minority. ;)

Watching at the end of 2024 and already having heard of SHA-256 used in so many places

Tom Scott for the win with my cyber security assignment

Including a hash with a file to be downloaded is a common practice when the file itself is hosted on various mirrors rather than on the main site. In this case, being able to retrieve the hash from the trusted site, and compare it to the binaries from the potentially untrusted mirrors allows one to be reasonably confident that the mirror didn't send you a modified version of the file.

You explained it significantly better than my lecturer did

The point of publishing hash codes for downloads is still useful for the common case where the download comes from a 3rd party (mirror or torrent), and also to ensure integrity (no transmission errors in the downloaded file or storage media).

Or if you use a download manager to download from multiple sources, to ensure you got all your sources right.

Watching this in 9 years and what he said about SHA-1 is so funny.

LoL who made the rocket, I respect it..

In a few years sha-3 will be the standart. Yeah, still waiting.

3:51 how is the rocket appearing behind the moon? The rest of the moon is still there

My first experience with hashing was keying in programs on my Commodore 64 from magazines like Compute!'s Gazette, RUN, or Ahoy in the 1980s. They included a program that would start a wedge (a daemon, of sorts) to compute and display a checksum for each line of code entered. Each line of code printed in the magazines ended with a comment containing a checksum for that line. If the checksum appearing on the screen didn't match the one printed in the magazine, I'd know I entered the line of code incorrectly and I'd need to look for mistakes.

That's how we did it back then, and WE LIKED IT! 😉

I bet most people here won't mind longer videos if stuff gets explained!

I love seeing Videos with Tom, he is just awesome and the topics are interesting.

1:34 Isn't that the first 6 digits of pi?

About downloading files and using hashes to check what has been downloaded is not supposed to be a "security feature". It's meant to provide a simple check that the download itself went on without disruptions. In other words that one actually received the whole file as intended and that the file wasn't broken due to a poor connection.

Length of the hash is how you get security, not making a slow hash function. In other words, you should always try to make the hash as fast as possible, but have at least *some* fixed overhead. This means a reverse or "dictionary" attack is stuck at brute force -- if a security system relies on the failure of a brute force attack due to the slowness of the hash function, the system should be considered weak.

The issue with MD5 and SHA0 is that they have mathematical weaknesses the reduce their security. In other words you can, in a sense, pre-match some of the hash bits before you start the dictionary attack, effectively reducing the length of the hash. The "rumors about SHA-1" are almost certainly to do with the relatedness of SHA0 and SHA-1. Probably the weakness discovered in SHA0 were generalized and expanded upon then applied to SHA-1.

SHA256 is basically safe because it uses an overkilled sized hash space (256 bits). It means all collisions searches are suppose to take about 2^129 operations, which the sum total computing power of the earth has yet to even come close to doing. The idea is that even if SHA256 is significantly weakened (say, by 32 bits) there would still be enough space left over that it would still be basically impossible to break.

Longer hashes are always a little more inconvenient than smaller ones. This is why SHA1 existed. It only output 160 bits, which assuming the SHA1 algorithm were no weakened in any way, requires 2^81 operations to break which is still too much in practical computing environments. The problem is that attacks which can reduce the number of bits by, say, 52 bits, would be sufficient to reduce the problem space to 2^54 which some super computers may be able to tackle given enough time.

MD5, which only outputs 128 bits, was easily cracked when its output space was reduced below 100 bits, independently by a French team and a Chinese team in the early 2000s (the French team did not reduce the number of bits as much, but used much more computing power). Nowadays, it is easy to construct MD5 hash collisions using the Chinese team's approach.

The remedies to this are to use updated algorithms such as Keccak (aka SHA3) or to use more bits (like 224, or higher like SHA256 does).

would you recommend SHA 512 over SHA 256 in order to avoid collision searches even if weaknesses in SHA 2 are found?

I think that the "rumors about SHA-1" have to do about that the NSA has built in backdoors

Here's a fabulous idea that will extend the life of MD5 and make security much, much harder to circumvent: Use TWO OR MORE hash algorithms and test against multiple signatures. It may be trivial to break one hashing algorithm, but the likelihood of being able to change a file in such a way that multiple hash algorithms still match is infinitesimal, and may even be zero.

MD5 is still used regularly to check file integrity. No one should expect anything but an assurance the file is intact, but if this is all you need to know, it works beautifully. Secondary systems should to be in place to secure the file to be delivered anyways, and if you are checking for malicious modifications via hash check client side after the file is delivered, you are doing all wrong. However, it is very important to make sure the delivered file did not get corrupted, so MD5 in this case is perfect, its fast and extremely reliable for this job.

Richard Smith None of what you said was really relevant to what he said.

ReturnOfTheBlack Sure it was, he is talking about extending the life of md5, however, it still has a long life ahead of it, just not relating to security.

checking for multiple simple hashes may, and usually do, take more time than checking one really secured, not yet breakable, hash, such as SHA2 ou SHA3. Therefore the best option is still, to develop a very secure hash instead of using multiple ones.

using 2 hash algorithms would be worse. an example would be.

hash A has a collision with BOB and FRED both equalling 5.

So you can change bob to fred and noone will know.

if has B has a collision of 5 and 19 both equalling rabbits and on hash A JOHN and NICK both equal 19.

Then you can replace BOB with FRED, JOHN or NICK and noone will know.

Also it will take twice as long, for a non exponential increase in security.

I remember with those download sites, there were some groups that actually did modify the file to make the CRC or later MD5 spell out something interesting in hex. Like the version number or the episode number of the series.

I thought they wrote the hash for 2 reasons, 1. To make sure the mirrors arent tweaked or 2. to avoid bit errors (where a 1 goes to 0 or vice versa) caused by physical factors. Doesn't happen too often now a days though.

This was a very nice video..My first comment on any video on youtube !

Thank you Tom and Computerphile!

I always thought it was odd to check the file integrity by trusting the hash from the same site that you downloaded the file from.

I mean, if the site is compromised, why would I trust their "checksum"?

Definitely should bring up BCrypt during password hashing. It's "work factor" is a design feature to help with the speed concern mentioned in this video.

that is not true anymore - while bcrypt currently is the "safest" key derivation function, it has one major weakpoint: it has a very small memory footprint which allows it to be parallelized - but that is ok, the algorithm is almost 15 years old (and it is based on the 20 year old blowfish) - time for a replacement: scrypt is almost ready to go and solves the memory issue by creating a ridiculously large memory footprint

What exactly do you mean by that? A small memory imprint?

Corey Ogburn it means it uses very little amount of memory (RAM)

Corey Ogburn bcrypt is computed in-place and does not utilize and RAM (besides that, the operating system or the process consumes - and that is very litte) - scrypt on the other hand consumes lots of RAM which makes it considerably slower than bcrypt because RAM is "slow" (even if it is GDDR5)

in practical use scrypt is around 2000 to 4000 times slower than bcrypt

Corey Ogburn it means you can't use ASICS to compute it. Because RAM in ASICS is very expensive. If some algorithm has a current state that consists only of few dozen bytes it can be calculated very cheaply with very very fast speed.

I love this channel! How did I not find it sooner?

3:45 I hope I'm not the only one who sees something completely different in this animation :D

This video was mentioned in a later video so I went looking for this one, but couldn't find it. Now it just appear in my What To Watch list. Weird. Good video. I would like to see something more in-depth, perhaps by numberphile.

actually, the idea of putting the hash file along side the original file is a very good idea, if you are aware of what you are doing.

1- people with such level of security use multiple servers and mirrors, and you should really really get the two files (the hash and the document or program) from different sources/paths. that is internet security 101.

2- mostly the hash is used against man in the middle, hashes does little good if your server is compromised (that is not its intended use). using hashes assumes the source is trusted and the channel is not.

hash could be very useful for example if you are getting the file from a ptp network and you'd like to verify that you got the actual file, not a different one.

and passwords should be encrypted in the database, specifically encrypted with algorithms that have decryption algorithms with key or salt and such. hashing the password in database (with any algorithm, secure or not) forces you to use non secure authentication methods that exposes the password at the client side.

I was hoping for exactly the details he said would take hours to explain. But then again maybe the mathematics of hashing would fit Numberphile better. Here's to hoping James will tackle this!

This explains that lecture I was sitting in on when I visited Cornell.

Okay it's been a while since I last had to code passwords into a database but back then md5 was still perfectly acceptable. It's good to know this is no longer the case and web scripting languages have adapted to provide the extra layer of security. So in PHP where I used to use md5() for passwords I shall now use password_hash()

Thanks :)

Why wasn't I subscribed to this channel before? Love the way you explain.

I'm very much looking forward to the next video, as I greatly disagree about password storing using hashes. Obviously not unsalted and not only one iteration of hashing, but still.

I guess you watched the video, but just in case. In here, he says that hashing algorithms should not be used to store passwords. The alternative I would have suggested when I posted this is exactly what he posted in the next video, or the standard HMAC using a unique salt per user as it's also known.

Almost there guys! Almost got that golden play button! 😀

I forget which game it was, but gaining access to it's protected memory region required generating hash collisions until it overflowed. Once you got access it was just function call to reset the stored hash with your injected code factored in.

Who is this Tom Scott and why is he so knowledgeable about this lightboxes and the language they speak?

Woo, thanks for this video. I've been wanting to learn more about this, and I've had trouble getting through some articles on this topic.

So, here's a analogy that I thought of, it's like the file is an engine and the hash is a photo of that engine: the hash won't work as an engine and you can't figure out how that engine works, But you will be able to see that the engine is the same as the one that is in the photo (probably).

This guy is great, can't wait for the followup!

I've been seeing all the videos. hope you talk about salting hashing in the future tkx. really loving this videos.

@Tom Scott - Actually MD5 HAS been broken with text documents. It's been done with carefully constructed PDFs that say different things and have the same hash. AFAIK it's based on some APPEND attacks on MD5 and the ability of PDFs to overlay stuff.

I love these videos, and I love this guy, Great explanations.

Been waiting for this one :) Great video Brady.

Brady's not actually the one filming and editing; Sean Riley is (description).

Moree of Tom ! These are the best vids

SHA1 officially broken by Google today lol

This is really the kind of video I would love more from on Computerphile. :P (Maybe going a bit more into detail... just slightly but not as superficial as some of the other videos on this channel. - That's also why I like Tom Scott btw :p)

Also those "nostalgia" videos about the times, where most of us probably didn't even exist yet or knew what a computer is about are pretty neat. (Forgot the name of this nice older professor)

Just some feedback - That's all. Bye and thanks for your content, Brady

Tom Scott, YOURE AWESOME!

Great description of hashing.

I was always told that hashing passwords was the safest way to do it, because you never actually store the original password in the server, so it can't be stolen.

I suspect he is referring to using just a hash without adding a salt/nonce.

If you store just hash(password) then the you can look up common passwords in tables, but if you generate a random string, and store {string,hash(string + password)} then the same password would have a unique stored version depending on the random prefix.

Note that it should be a prefix because under certain conditions suffixes can be broken more efficiently.

Yh, the problem is, if people do get hold of the hashed passwords, depending on what hashing algorithm was used, they could easily decrypt them, an example is the MD5 algorithm, which if you used, someone could easily decrypt your hashed passwords with just Google.

Yeah but use salts preferably bcrypt

Pretty much yes, the problem we have today is hackers have 'hashed' every combination you can think of, so they already know all the combinations. Websites can combat this with what is called a salt. Simple they add a word in a fixed position to every password to increase strength. Example 'password1' => j5k32b5k, with the salt it would be 'facebook:password1' => jkb43g5. This was just an example. Salts like passwords can be completely unique. ( A good rule of thumb. Make your passwords a combination of words, like 'batteryhorsestaple' its easy to remember hard to guess and will take hackers years.

***** md5 is no longer safe for passwords. Use SHA

/computerphile ! Finally! YES!!

If I make a hash algorithm in PHP or JS, how do I hide that algorithm securely from users? I could make a kind of secure hash algorithm, but that is useless if everyone can just read the instructions

hash codes on websites offering a download are also used to make sure the download went well and nothing got corrupted (or involuntarily changed by a machine error or noise)

good video, can't wait for the next one

Please let me explain something: Download websites doesn't use MD5 hash verification to guarantee that the file hasn't been changed by a hacker from their server, they know that if a hacker could've did that, then why he couldn't change the hash as well! The hash is only used to check whether there wasn't any network error while downloading the file that flipped or deleted some bits from the file, essentially corrupting it. This is especially important and widely used when downloading OS images (those things are large, take time to download, thus are vulnerable to network corruption) or when downloading files using the Torrent protocol, which downloads the file as chunks then the client glues them together again, so it's a check whether the network or the client didn't miss any piece of the file.

Isn't the last point about verifying file downloads a little off?

The point isn't that the file downloaded is verified to be the same file as the one put on that site. The point is that a file downloaded from 3rd parties (mirror sites etc) is checked to be the same file as a central master copy that the author has certified.

That rocket looks abit sus eh

8:05 Red has won three times and yellow has won two times.

Really interesting stuff! Great explanation!

I think the intent of the MD5 hash under a software program was to be used to validate the download was successful, especially back when we were all on dialup.

2:07 I would not mind listening to him for hours

The software or file download that has the hash along with it is actually secure. Provided they sign the hash. That is they run RSA on the hash using the Private key of the company. So, nobody can change the hash. If they should change the hash, they need the private key of the company.

Or share the hash on a company owned HTTPS server while file download could be provided using plain http mirrors.

If the file format supports signing, that is better. Like signing an exe.

***** Agreed. Using HTTPS is a simpler idea, given that it's popular and has many easily available implementations.

very nicely explained ...thanks alot

This guy is awesome

no, he's not :) There are much smarter people explaining stuff on computerphille

vyli1 I disagree. There may be smarter people talking on computerphile, but the way he talks is much more engaging than most of the spekers that have appeared on this channel. Anyways wheter you find him awesome or not is your opinion.

Yeah man love to see a video on hashing

this guy is amazing! thanks computerphile!

Uhm,... Nice spaceship design lol.

Thanks so much for this video! Really enjoyed it and made me better at my job.

Damn Tom, I'm amazed from your knowledge in every video of yours I watch here and on your personal channel, would love if you could recommend some good books/ resources other then this and your personal channel.

I use hash algorithms in image processing to compare images.

If images return the same hash I know they are equal.

Hashes can be very helpful!

I know this is super old but I always thought it was funny that Kali offered the hash for the exact same reason that you mentioned.

Excellent video!

Surely it is common place for people to be storing passwords with an additional, random, salt nowadays which, combined with the hashing method (sha1, sha2, md5 etc..) makes it a lot more secure than just hashing a password directly?

Yes, it's all about buying time for worst case scenario, everyone is vulnerable (as proved by the recent events at major corporations)

***** Best case scenario you can hash it a few times that it would take years to brute force it.

***** if done properly, and the password is reasonably secure, it should take years to brute force only one password.

If your database has been compromised and you've let customers/end users know, a year SHOULD be plenty of time.. the biggest weakness is any system is the end user..

Yes, a salt should be used, but only in combination with the right hash algorithms (not MD5 or the SHA family)!

A salt prevents the hash being broken using a precomputed hash-password dictionary (a.k.a. "Rainbow Tables"). But on modern hardware, computing hashes such as an MD5 or SHA1 is often faster done directly than having to look it up in a giant file on disk. Attackers probably won't bother using rainbow tables, and salts will therefore not slow them down.

The key is to not use MD5 or SHA. These hash algorithms are designed to be very fast for message authentication, but they are not suitable for hashing passwords!

When hashing passwords you need a very slow and computationally intensive algorithm (such as scrypt or bcrypt), such that it becomes hard to attack using brute force. A salt is then used to mitigate attacks using precomputed hash tables.

Just wanted to say that CyanogenMod (and many other Android-flashables) use md5 for their downloads. It works very well for me and has nothing to do with security.

Sometimes, I get a file, download it onto my pc, then try to transfer it to my phone. Then when I flash the file, it doesn't work. Some transfer process I used changed the file along the way. I really don't know why. Maybe it's because many of them are .zip's, and perhaps they want to use a different method of compression or something.

When the hash code is shared next to the download, I can verify the file's fidelity by the time it reaches my phone.

6:46 I think the hash is not used to verify that the file wasn't manipulated, but just to verify that the file is not damaged.

I would like to listen to that one hour avalanche effect explanation :)

The permission to go to the Moon made me pause the video for a moment to check the comments

Brady, Sean and Co I can't help but feel deep sorrow with this google+ crap and your many, many different channels.

I do hope you guys can make more videos soon but I can understand it will take you guys a while to reorganize.

God speed guys.