Fixing overconfidence in dynamic neural networks (WACV 2024)

Вставка

- Опубліковано 2 січ 2024

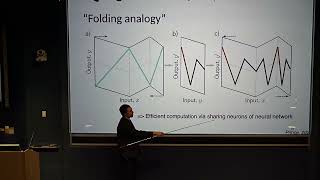

- In this WACV 2024 conference presentation, we explore a novel approach to enhancing dynamic neural networks through post-hoc uncertainty quantification. Dynamic neural networks adapt their computational cost based on input complexity, offering a solution to the growing size of deep learning models. However, differentiating between complex and simple inputs remains a challenge due to unreliable uncertainty estimates in these models.

Our research presents a method for efficient uncertainty quantification in dynamic neural networks, focusing on both aleatoric (data-related) and epistemic (model-related) uncertainties. This approach not only improves predictive performance but also aids in more informed decision-making regarding computational resource allocation.

The presentation includes experimental results demonstrating the effectiveness of our method on datasets such as CIFAR-100, ImageNet, and Caltech-256, showing improvements in accuracy, uncertainty capture, and calibration error.

See the original publication:

Lassi Meronen, Martin Trapp, Andrea Pilzer, Le Yang, and Arno Solin (2024). Fixing overconfidence in dynamic neural networks. In IEEE Winter Conference on Applications of Computer Vision (WACV).

arXiv pre-print: arxiv.org/abs/2302.06359

Codes available on GitHub:

github.com/AaltoML/calibrated... - Наука та технологія

![The moment we stopped understanding AI [AlexNet]](http://i.ytimg.com/vi/UZDiGooFs54/mqdefault.jpg)

![The moment we stopped understanding AI [AlexNet]](/img/tr.png)