Production Inference Deployment with PyTorch

Вставка

- Опубліковано 25 сер 2024

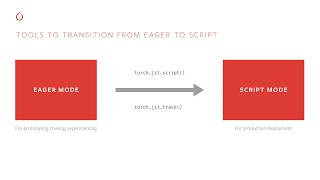

- After you've built and trained a PyTorch machine learning model, the next step is to deploy it someplace where it can be used to do inferences on new input. This video shows the fundamentals of PyTorch production deployment, including Setting your model to evaluation mode; TorchScript, PyTorch's optimized model representation format; using PyTorch's C++ front end to deploy without interpreted language overhead; and TorchServe, PyTorch's solution for scaled deployment of ML inference services.

0:00 - Intro

0:30 - Evaluation Mode

2:25 - TorchScript

5:34 - TorchScript and C++

7:37 - TorchServe

8:39 - TorchServe example

Very informative! Thank you!

in minute 5:17 shouldnt we save scripted_model (scripted_model.save('my_scripted_model.pt'))?

exactly what I want to ask. i think you are right.

Definitely torchserve is a good option to deployment :))

Audio is quite low

The issue is with your hardware. Use a chrome audio plug-in or an external audio device with good amplification.