PyTorch Tutorial 03 - Gradient Calculation With Autograd

Вставка

- Опубліковано 19 тра 2024

- New Tutorial series about Deep Learning with PyTorch!

⭐ Check out Tabnine, the FREE AI-powered code completion tool I use to help me code faster: www.tabnine.com/?... *

In this part we learn how to calculate gradients using the autograd package in PyTorch.

This tutorial contains the following topics:

- requires_grad attribute for Tensors

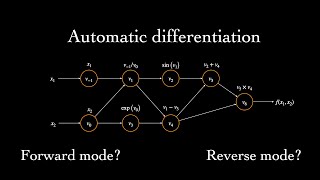

- Computational graph

- Backpropagation (brief explanation)

- How to stop autograd from tracking history

- How to zero (empty) gradients

Part 03: Gradient Calculation With Autograd

📚 Get my FREE NumPy Handbook:

www.python-engineer.com/numpy...

📓 Notebooks available on Patreon:

/ patrickloeber

⭐ Join Our Discord : / discord

If you enjoyed this video, please subscribe to the channel!

Official website:

pytorch.org/

Part 01:

• PyTorch Tutorial 01 - ...

You can find me here:

Website: www.python-engineer.com

Twitter: / patloeber

GitHub: github.com/patrickloeber

#Python #DeepLearning #Pytorch

----------------------------------------------------------------------------------------------------------

* This is a sponsored link. By clicking on it you will not have any additional costs, instead you will support me and my project. Thank you so much for the support! 🙏

I appreciate that you followed the official tutorial from the Pytorch documentation. Also your comments make things much clearer

Thanks! Glad you like it

I appreciate your efforts, you readily explained every detail. Thanks very much.

🙏

This is great work. I really like the detailed explanation using simple practical examples. Please continue doing this exploring the various options in pytorch. Happy holidays

Thank you! Glad you like it. I have more videos planned for the next few days :) Happy holidays!

This video is very clear in explaining things. Thank you so much sir! Keep up the good work pls!

This video is super helpful! Explanations are very understandable. Thank you so much!!🤩👍🙏

what an amazing teacher you are

This tutorial is more than great! Thank you!

This video is extremely useful. Thank you!

Thank you! Your video helps a lot to my undergraduate final project!

that's nice to hear :)

Great work Sir, and Thank you!

Excellent video. Thanks!

This tutorial is brilliant. It is super friendly to people who are new to Pytorch!

glad you like it!

very nice, congrats!

Great Work Sir.

Very well done. An excellent video!

Thanks !

Quite love these courses! Great thanks

Thank you :)

Fantastic work!

slight recommendation of how to improve this: if u use the same naming scheme in the Jacobian and in the code (l vs z), we can follow easier the chain rule!

Great tutorial! Thanks for this material!

thanks :)

Really amazing and very well explained ! Thank You .

Btw what IDE are you using ? looks so cool and handy , love the output option below .

Visual Studio Code

Exactly :)

This video helps me greatly. I like your language speed since English is not my mother tongue. Thank you a lot.

Nice, glad you like it

thankyou for your efforts

Great work!

All in one pytorch.. yeahhh.. fantastic.. thanks a ton🎉🎊🎊

glad you like it!

Very clear, thank you very much

Thank you very much for your tutorial. However, I did not plenty understand some details, like the variable v or the use of the optimizer.

very nice tutorial. thank you!

thanks :)

awesome! Thank you

great work thank you

Just on to my 4 lec in pytorch series. Don't know if it's a complete series on pytorch ,but definitely whatever is there it's depicted nicely.

nice, yes it's a complete beginner series

@ 8:16 do you have a simple real life "example" why we have to use the v for the right size and why it wouldn't work without it? I know silly question, but I don't really grasp the concept behind it..

I just discoverd your channel and it's really good! One question, I dont totally understand why we should use .detach() or no_grad() when updating weights... we are creating a new graph or something like that? what "prevent to tracking the gradient" exactly means? Hope you can help me with that. Keep the good job (:

You should use this for example after the training when you evaluate your model. Because otherwise for each operation you do with your tensor, PyTorch will calculate the gradients. This is time consuming and expensive, so we don't want this anymore after the training because we no longer need backpropagation. It will reduce memory usage and speed up computations.

Great PyTorch Tutorial videos! May I know what is the VS Code extension you use to autocomplete the PyTorch line?

In this video it was only the built-in autocompletion through the official Python extension

thank you

When do you learn the why of things ? why am I making a gradient ? when would I use it ? I feel like these things are often explained in DS videos/classes

Amazing

interesting thing that I realized is that eventhough after set z=z.mean which changes grad_fn of z from mulbackward to meanbackward(so z doesnt have mulbackward anymore), it is still able to track gradient

if requires_grad=true, any operation that we do with z tracks the gradient

Thank you!!

thanks for watching

Thanks for the video. for the tensor v, are the values of any importance or its only the size that is of importance?

Hi, good question! Yes this can be a bit confusion, so I provided some links from the pytorch forum which might help:

discuss.pytorch.org/t/how-to-use-torch-autograd-backward-when-variables-are-non-scalar/4191/4

discuss.pytorch.org/t/clarification-using-backward-on-non-scalars/1059

@@patloeber I can't find anyone describe how to use it there, are you able to give a quick summary? Help much be appreciated as I can't find anything, including values 0.1, 1, 0.001

@@sebimoe Apparently he doesn't know either....Typical

In my understanding, only the size will matter. So you can write v=torch.randn(3). Then pass v in backward function.

Did anyone find a good explanation for v ? I'm also bit confused here

@ 8:00 what is the difference between z.backward() vs z.backward(v)?

z.backward() ==> is calculating dz/dx

is z.backward(v) ==> is calculating dz/dv ?

Really good tutorial, but I wanna know what the app which you use to draw is.

I used an Ipad for this (some random notes app)

@ 8:00 what is the difference between z.backward() vs z.backward(v)?

z.backward() ==> is calculating dz/dx

is z.backward(v) ==> is calculating dz/dv ?

Also, how do we decide the value for v?

z.backward(v) calculates dz/dx*v

@@alanzhu7538 You got any idea now?😂

@@gautame You mean v * (dz/dx)? Or dz/(dx * v)?

@@peixinwu4631 v * (dz/dx)

why can you just specify v as [0.1, 1.0, 0.001], why not some other numbers?

Did you find out?

It doesn't matter. You just need to have the same shape

@5:46 in the image it is J^T . V

pretty good

Thanks!

I had a german professor who also pronounced "value" as "ualue". Can you explain why do you sometimes pronounce it that way? I am very intrigued.

P.S. you have the best pytorch series on youtube

If i want to play with it, suppose that we have y=3x, and we should get the answer of 3 for the gradient. So, how can i do that in pytorch?

At 9:30, isn’t another way to do x.volatile = False ?

I don't get why you need the 3 methods presented at 8:06 for preventing the gradient. Can one not simply put requires_grad=False in the x tensor?

how did you decide values in vector v?

The gradient in 5:46 is the multiplication of the jacobian matrix and a directional vector. When the function which you want to calculate the gradient has a one dimensional output, there is no need to determine a direction for derivative since it is unique, that's why for the mean gradient it accepts no arguments. On other hand, see that for a multidimensional output as x+2 = y (y is a multidimensional output, a vector), you have to specify what direction you want to take your gradient. That is where the v vector enters. He arbitrarily choose components just to show that the function requires an vector to define the directional derivative, but usually for statistical learning it is chosen the direction where the gradient is steepest

Getting this error,

RuntimeError: Trying to backward through the graph a second time (or directly access saved tensors after they have already been freed). Saved intermediate values of the graph are freed when you call .backward() or autograd.grad(). Specify retain_graph=True if you need to backward through the graph a second time or if you need to access saved tensors after calling backward.

That’s good, ja? :)

This is so f**king useful, thank you sooo much!!

I cant understand,why must gradient equal +gradient (gradient=+gradient) in each epoch.Where Can I find consistent mathematic formula?Can you explain me once?

I'm not exactly sure what you mean. Can you point me to the time in the video where I show this?

in the end...it became heavy

Hey, could you explain what it means by tracking the gradient. I mean why its a issue??

Tracking each operation is necessary for the backpropagation. But it is expensive, so after the training we should disable it

When I run the following code, I encountered an error. Could you help me? Thank you very much!

weights = torch.ones(4, requires_grad=True)

optimizer = torch.optim.SGD(weights, lr=0.01)

The error is

Traceback (most recent call last):

File "D:/1pytorch-tutorial/my_try/learning-PythonEngineer/learning.py", line 113, in

optimizer = torch.optim.SGD(weights, lr=0.01)

File "D:\Anaconda3\lib\site-packages\torch\optim\sgd.py", line 68, in __init__

super(SGD, self).__init__(params, defaults)

File "D:\Anaconda3\lib\site-packages\torch\optim\optimizer.py", line 39, in __init__

torch.typename(params))

TypeError: params argument given to the optimizer should be an iterable of Tensors or dicts, but got torch.FloatTensor

torch.optim.SGD([weights], lr=0.01)

I'm not sure how you managed to be unclear on the third video of the series.

What you said about gradients, .backwards(), .step(), and .zero_grad() were not clear at all.

Too many advertisements

Great work!