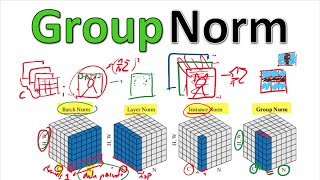

Comparison of Batch, Layer, Instance and Group Normalization

Вставка

- Опубліковано 20 жов 2021

- Subscribe To My Channel www.youtube.com/@huseyin_ozde...

* Comparison of Batch Normalization, Layer Normalization, Instance Normalization and Group Normalization on a Convolutional Layer output

All images and animations in this video belong to me

References

Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift

Sergey Ioffe, Christian Szegedy

arxiv.org/abs/1502.03167

Layer Normalization

Jimmy Lei Ba, Jamie Ryan Kiros, Geoffrey E. Hinton

arxiv.org/abs/1607.06450

Instance Normalization: The Missing Ingredient for Fast Stylization

Dmitry Ulyanov, Andrea Vedaldi, Victor Lempitsky

arxiv.org/abs/1607.08022

Group Normalization

Yuxin Wu, Kaiming He

arxiv.org/abs/1803.08494

#machinelearning #computervision

#layernormalization #deeplearning #ai

#batchnormalization #groupnormalization

#instancenormalization

#artificialintelligence #aitutorial #education

#convolutionalneuralnetwork #neuralnetwork

#convolutionalneuralnetworks #neuralnetworks

#imageprocessing #datascience

#computervisionwithhuseyinozdemir

The rows and columns labels are confusing.

"Feature map i" should be "Data point i"

"Filter j" should be "Feature (map) j"

Figure shows the output of a convolutional layer, not input. And this is written next to the figure.

Because layer normalization works on the output of previous layer, it normalizes the output of previous layer.

The row labelled as "Feature map i" shows the convolution results of all filters with input i of batch.