Fourier Neural Operators (FNO) in JAX

Вставка

- Опубліковано 13 чер 2024

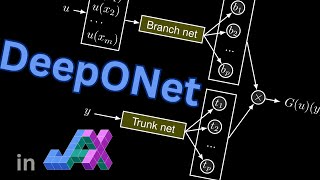

- Neural Operators are mappings between (discretized) function spaces, like from the IC of a PDE to its solution at a later point in time. FNOs do so by employing a spectral convolution that allows for multiscale properties. Let's code a simple example in JAX: github.com/Ceyron/machine-lea...

-------

👉 This educational series is supported by the world-leaders in integrating machine learning and artificial intelligence with simulation and scientific computing, Pasteur Labs and Institute for Simulation Intelligence. Check out simulation.science/ for more on their pursuit of 'Nobel-Turing' technologies (arxiv.org/abs/2112.03235 ), and for partnership or career opportunities.

-------

📝 : Check out the GitHub Repository of the channel, where I upload all the handwritten notes and source-code files (contributions are very welcome): github.com/Ceyron/machine-lea...

📢 : Follow me on LinkedIn or Twitter for updates on the channel and other cool Machine Learning & Simulation stuff: / felix-koehler and / felix_m_koehler

💸 : If you want to support my work on the channel, you can become a Patreon here: / mlsim

🪙: Or you can make a one-time donation via PayPal: www.paypal.com/paypalme/Felix...

-------

⚙️ My Gear:

(Below are affiliate links to Amazon. If you decide to purchase the product or something else on Amazon through this link, I earn a small commission.)

- 🎙️ Microphone: Blue Yeti: amzn.to/3NU7OAs

- ⌨️ Logitech TKL Mechanical Keyboard: amzn.to/3JhEtwp

- 🎨 Gaomon Drawing Tablet (similar to a WACOM Tablet, but cheaper, works flawlessly under Linux): amzn.to/37katmf

- 🔌 Laptop Charger: amzn.to/3ja0imP

- 💻 My Laptop (generally I like the Dell XPS series): amzn.to/38xrABL

- 📱 My Phone: Fairphone 4 (I love the sustainability and repairability aspect of it): amzn.to/3Jr4ZmV

If I had to purchase these items again, I would probably change the following:

- 🎙️ Rode NT: amzn.to/3NUIGtw

- 💻 Framework Laptop (I do not get a commission here, but I love the vision of Framework. It will definitely be my next Ultrabook): frame.work

As an Amazon Associate I earn from qualifying purchases.

-------

Timestamps:

00:00 Intro

01:09 What are Neural Operators?

03:11 About FNOs and their multiscale property

05:05 About Spectral Convolutions

09:17 A "Fourier Layer"

10:18 Stacking Layers with Lifting & Projection

11:01 Our Example: Solving the 1d Burgers equation

12:04 Minor technicalities

13:07 Installing and Importing packages

14:02 Obtaining the dataset and reading it in

15:44 Plot and Discussion of the dataset

17:51 Prepare training & test data

22:23 Implementing Spectral Convolution

34:25 Implementing a Fourier Layer/Block

37:48 Implementing the full FNO

43:14 A simple dataloader in JAX

44:18 Loss Function & Training Loop

52:34 Visualize loss history

53:31 Test prediction with trained FNO

57:13 Zero-Shot superresolution

59:59 Compute error as reported in FNO paper

01:03:45 Summary

01:05:46 Outro

Super cool as always. Some feedback to enhance clarity - when writing modules (SpectralConv1d, FNOBlock1d, FNO1d), overlaying the flowchart on the right hand side to show the block to which the code corresponds would be really helpful. I felt a bit lost in these parts.

Very cool video! The walkthrough write-up of this alternate program of 1D FNO is super useful for newcomers like myself :)

Great to hear! 😊 Thanks for the kind feedback ❤️

thank you for the great video, very clean implementation as well, satisfying to watch. eagerly waiting for your video on the use of FNO for next-state prediction / recurrent NN.

Thanks a lot 😊

Yes, definitely, the autoregressive prediction of transient problems will be one of the future videos 👍

I was looking at the reference code that you mentioned in the jupyter notebook and found that they coded something weird that I can't understand for 2D.

out_ft[:, :, : self.mode1, : self.mode2] = self.compl_mul2d(x_ft[:, :, : self.mode1, : self.mode2], self.weights1)

out_ft[:, :, -self.mode1 :, : self.mode2] = self.compl_mul2d(x_ft[:, :, -self.mode1 :, : self.mode2], self.weights2)

I don't understand why there are two weights (weights1, weights2) and why they take upper mode1 frequencies. Can you explain this? Thanks for your video.

great effort

Thanks a lot 😊

Do I understand correctly, that you add the mesh coordinates as a feature to the input data (somewhere in the beginning you concatenate the mesh to a)? Is that really necessary? I image that this will just add a Fourier transform of equally spaced point coordinates to the latent space, which will be the same for all test data.

Yes, that's right :). I also think that it is not necessary but I wanted to follow original FNO implementation closely, see here: github.com/neuraloperator/neuraloperator/blob/af93f781d5e013f8ba5c52baa547f2ada304ffb0/fourier_1d.py#L99

I was asking myself the same question, you are basically adding a constant input to your network which will likely be ignored. for the sake of being close to original implementation I understand, but you can likely just drop this channel entirely and it would work just as well.

EDIT: now that I think of it, maybe it helps with the zero-shot upsampling? but at the same time you always train with the same grid so the second channel was probably ignored (as it's constant).

Awesome stuff. I am wondering if you can do a similar video for the new neural spectral methods paper?

Thanks 🤗

That's a cool paper. I just skimmed over it. It's probably a good idea to start covering more recent papers. I'll put it on my todo list, still have some other content in the loop I want to do first, but will come back to it later.

Thanks for the suggestion 👍

Thank you for the detailed video! I have a question regarding the zero-shot super-resolution. If we train for 1 second as shown in your video, is it possible to run the test for, let's say, 5 seconds of propagation? Additionally, is it possible to plot the propagation from t=0 to t=1, or can it only provide the result? Since the training data can include the propagation as well, perhaps this information can be utilized in the training, not just the starting point. Thanks for your help!

Hi, thanks a lot for the kind feedback :).

Here, in this video, the FNO is just trained to predict 1 time unit into the future. The dataset also only consisted of these input-output pairs. I assume you are interested in the state at times between 0 and 1 and beyond 1. There are two ways to obtain spatiotemporal information: either the FNO is set up in a way to return not only the state at t=1, but the state at multiple time levels (t=0.1, 0.2, ... 2.9, 3.0), or we use an FNO with a fixed dt (either the 1.0 of this video or a lower value) to obtain a trajectory autoregressive (ua-cam.com/video/NlQ1N3W3Wms/v-deo.html ). For this, a corresponding dataset (or a reference solver to actively create the reference we need) is required.

great. thanks

You are welcome! 😊

I have a question about R , only low frequencies are saved but in some case keeping the high frequencies can be relevant.

Hi, yes that's indeed a valid concern. In the paper by Li et al. it is argued that the nonlinearities recover the higher modes. However, it is up to experimental evidence how well they do this.

For benchmarks against UNets check for instance the pde arena paper ("towards multiscale....") by Gupta and Brandstetter.

Where in the code is the burgers equation used?

Is it possible to mix PINN models with FNO models?

Hi, thanks for the questions 😊

1) the burgers equation is "within the dataset". It contains the (discretized) state for the Burgers equation at two time levels. So let's say you had a simulator for the burger equations, and you would use it to starting at the first state integrate the dynamics for 1 time unit, you would arrive the second state. We want to learn this mapping.

2) Yes, there are definitely approaches to incorporating PDE constraints via autodiff into the neural operator learning process (see for instance the paper by Wang et al. on physics-informed DeepONets or the Li et al paper on physics-informed neural Operators). This can be helpful but does not have to be. In their original conception FNOs are trained purely data driven.

I am having a very hard time understanding your data preparation. It should have been taught more slowly.

Hi, thanks for letting me know. I'm sorry to hear that. 😐

What was too fast for you? The speed of me talking or did I spend too little on explaining the involved commands? Can you also pinpoint specific operations that were hard for you to comprehend? Then, I can do them in greater detail in a future video. 😊