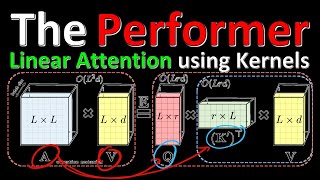

LambdaNetworks: Modeling long-range Interactions without Attention (Paper Explained)

Вставка

- Опубліковано 18 тра 2024

- #ai #research #attention

Transformers, having already captured NLP, have recently started to take over the field of Computer Vision. So far, the size of images as input has been challenging, as the Transformers' Attention Mechanism's memory requirements grows quadratic in its input size. LambdaNetworks offer a way around this requirement and capture long-range interactions without the need to build expensive attention maps. They reach a new state-of-the-art in ImageNet and compare favorably to both Transformers and CNNs in terms of efficiency.

OUTLINE:

0:00 - Introduction & Overview

6:25 - Attention Mechanism Memory Requirements

9:30 - Lambda Layers vs Attention Layers

17:10 - How Lambda Layers Work

31:50 - Attention Re-Appears in Lambda Layers

40:20 - Positional Encodings

51:30 - Extensions and Experimental Comparisons

58:00 - Code

Paper: openreview.net/forum?id=xTJEN...

Lucidrains' Code: github.com/lucidrains/lambda-...

Abstract:

We present a general framework for capturing long-range interactions between an input and structured contextual information (e.g. a pixel surrounded by other pixels). Our method, called the lambda layer, captures such interactions by transforming available contexts into linear functions, termed lambdas, and applying these linear functions to each input separately. Lambda layers are versatile and may be implemented to model content and position-based interactions in global, local or masked contexts. As they bypass the need for expensive attention maps, lambda layers can routinely be applied to inputs of length in the thousands, en-abling their applications to long sequences or high-resolution images. The resulting neural network architectures, LambdaNetworks, are computationally efficient and simple to implement using direct calls to operations available in modern neural network libraries. Experiments on ImageNet classification and COCO object detection and instance segmentation demonstrate that LambdaNetworks significantly outperform their convolutional and attentional counterparts while being more computationally efficient. Finally, we introduce LambdaResNets, a family of LambdaNetworks, that considerably improve the speed-accuracy tradeoff of image classification models. LambdaResNets reach state-of-the-art accuracies on ImageNet while being ∼4.5x faster than the popular EfficientNets on modern machine learning accelerators.

Authors: Anonymous

Links:

UA-cam: / yannickilcher

Twitter: / ykilcher

Discord: / discord

BitChute: www.bitchute.com/channel/yann...

Minds: www.minds.com/ykilcher

Parler: parler.com/profile/YannicKilcher

LinkedIn: / yannic-kilcher-488534136

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar: www.subscribestar.com/yannick...

Patreon: / yannickilcher

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n - Наука та технологія

In a nutshell, my understanding is the Lambda Layer works using a similar rearranging trick as in "Transformers are RNNs". Instead of doing attention over positions (i.e. NxN), it ends up doing attention over features (i.e. DxK). That's why it isn't O(N^2).

This is also why you need to change the positional encoding strategy to use a separate path. Otherwise it will be difficult for the network to properly route info based on positional information.

This is an excellent insight. Thank you!

It feels like the stage is set for a more general theory that can unify all of these ideas into one.

So much! It really feels like we're going around in circles but we're approaching to the right answer!

Lol, was not expecting a shoutout at the end :D Thanks for another great video!

good job! I really like the use of einsum.

thanks for the code! have you tried reproducing some of the results?

I was just thinking about the next Transformer Yannic would cover and here it comes!

Please keep the nice work!

"Attention mechanism extremely briefly, extremely briefly" 🤣😅🤣😅 I guess it's for the people watching your videos for the first time. Love your content

Another day, another great paper explanation.

Grate Channel!!! It would be nice to see a review paper/video comparing all the longformers, sparse transformers, linear transformers, linformers, reformers, performers, lambdanetworks, ...

That would be great

I think the most important thing is in Appendix C : "LambdaNetworks can alternatively be viewed as an extension of HyperNetworks (Ha et al., 2016) that dynamically compute their computations based on the inputs contexts."

It may be much easier to understand the paper from this perspective XD

Thanks Yannic, you made my day.

this is pretty similar to diff pooling in gnn, where we just get an indicator matrix through some blackbox transformation.

27:36 I guess there's (matrix of shape k x v) instead of - a scalar

An implementation of Performer, a linear attention-based transformer variant with a Fast Attention Via positive Orthogonal Random features approach (FAVOR+) video????????????

I was in the middle of asking Yanic to do this paper, and before I even finished - walla!

Interesting explanation, Looking for Yannic Kilcher V2 i.e Code explainer maybe if you could add 10 to 15 min at the end to explain original paper codes. That would be much helpful. Thank you for the great effort.

this would be super helpful, especially to budding researchers!

Hi, I am quite new to the basic transformer .... And there seem to be many new transformers recently. Could any please share the fundamental video for the Attention? I am interested to see where it begins...

23:30 - The authors fix this mistake. probably thanks to you Yannic (:

Bunch of ideas jumped into my head to apply it in vision, since the dimension problem can be solved. Time to hoarding up papers bois!

Hey, what about the diff between local attention and deformable convolutions (DCN)?

“This is Quick Ben’s game, O Elder. The bones are in his sweaty hands and they have been for some time. Now, if at his table you’ll find the Worm of Autumn, and the once Lord of Death, and Shadowthrone and Cotillion, not to mention the past players Anomander Rake and Dessembrae, and who knows who else, well - did you really believe a few thousand damned Nah’ruk could take him down? The thing about Adaephon Delat’s game is this: he cheats.” To give the turtles some prime reading material..

nice :-)

Hi,

Thank you for the great videos.

I have a question, what is the name of the software you are using to read and annotate the paper?

It is OneNote, most likely

He did a video on the tools (including all the software) he uses, and another on how he reads papers, you can find those on his channel

Thank you for your responses. @daniel do you know what is the name of the video please!

@@sohaibattaiki9579 ua-cam.com/video/H3Bhlan0mE0/v-deo.html&ab_channel=YannicKilcher

ua-cam.com/video/Uumd2zOOz60/v-deo.html&ab_channel=YannicKilcher

Hey, I would like to know what app are you using to annotate the pdf. Thank you.

I am here after 40 seconds of upload

did you make a video about efficient Nets ?

i can't seem to find it

not yet

This architecture has the downside that it has a fixed sequence length due to the learned positional embeddings, being independent of the sequence length is a nice property of MHA in the original Transformer.

just in case:

MHA = Multi-Headed Attention

as long as the pictures are the same resolution that shouldnt be a big problem, right?

@@Atlantis357 yeah, for images it shouldn't matter much since you can always resize. But for text you don't have that luxurty.

@@CristianGarcia We can do padding in case of texts. Please correct me if I am going wrong.

So the lambda function is basically like the Embedding matrix E for text sequences? They learn embeddings of patches/pixels?

In. away, yes

I know you said to ignore it, but what does the intra-depth hyperparameter actually mean?

I think intra-depth is the intermediate dimension in the query, perhaps understood as the weight of each key relative to value as it acts on the information of the context element.

noob question: which app are you using for the paper annotations?

OneNote

I may be mistaken but: the speed-accuracy chart they show is unclear. Are we talking about inference speed (I'm really unsure, the paper is not clear)? If so, how come baseline resnet and resnet+se seem better than efficientnet (all appear on the top left of the curve, contradicts the effnet paper)? Could it be because of the use of a bag of tricks during training (ex data augmentation)? In that case, the performance cannot be claimed to come from the architecture used. Also it seems it would contradicts table 6 where we see the amount of flops is marginally reduced for comparable accuracy. As a result, the 4.5x speedup they claim seems a bit missleading.

true, I don't know either

Seems a lot like it's replacing a pooling layer with a learnable fn

sir, you are wrong. lambda is not the direct result of matrix multiplication of K and V transpose, for each element you get a kxd (as in your notation) matrix from multiplying the transpose of each row of K and each row of V, adding m matrixes together, you get the lambda.

hello, Yannic. Thank you for your great review videos! I am currently doing Master degree and want to apply phD to ETH Zurich, once everything goes fine. However, I have almost no information how phD life is in ETH. Would you mind if I ask some question about phD course in ETH via email? (Actually, I couldn't find your email account on web.) Thanks.

you can dm me on twitter / linkedin

Which software do you use to annotate this PDFs?

OneNote

33:40 If the keys are "fixed", doesnt that make it equivalent to a convolutional kernel?

Quite a large number of papers try to get rid of the quadratic attention but I strongly believe that there is some no-free-lunch effect going on. You actually need the bandwidth of a quadratic attention so that enough information can be backpropagated.

Performers claim to be a provably accurate approximation, so idk about that.

Fair, but if the pattern you're looking for is relatively low frequency (i.e big), bandwidth may not be a problem, since you already need to throw out high frequency details.

yea that's a reasonable claim, maybe not the convolution we know, but kind of

What is this program that you're using to annotate pdfs?

OneNote

@@YannicKilcher thx

Why do you keep going on about the "double-blind reviewing" thing? In ML right now, double blind reviewing gives the author an opt-in ability to protect their identity. Moreover, the author is not clearly listed on the page, so a reviewer won't know it by default. Reviewers and readers still have the option to search for the name of the paper and find it if it's on arxiv, where authors have the right to post it.

I think this system is a good compromise, since it gives a pretty good amount of anonymity, especially for those who want the anonymity, and it doesn't restrict the authors much.

Hi Yannic. Loading a large ram with a 40Kx40K matrix is certainly possible, even on modern pc pseudo-home computers. I know it sound ridiculous, but consider... a 4 stick ddr4 ram of size 512 giga bytes is about US$ 3500. A Supermicro motherboard may have 16 ddr4 memory stick slots. This means for about US$ 14000, one may have 2 tera bytes of RAM in a "home" computer. This is certainly enough to contain the matrices in question, and fast enough to do the operations. Some very special programming may be required, but if the task were very easy, it would have been done a long time ago. (Or wait 2 or 3 years, and the price will drop in half.)

If you don't want to pay so much for memory, you might page the matrices in and out of disk memory. A 16tb Red Drive is about US$ 350. If you raid 4 of these together, you will have something like 64 tera bytes of disk space to work with. Your giant multiplies will take time, but so what?

If you have access to a modern supercomputer, you may just be able to write something like Matlab code to do the job, tho I don't know if Matlab is set up for large matrices, or if you will have to get someone to recompile the Matlab kernel (a tough thing).

In any event, it might be worth attempting to analyze some images with this direct very brute force method. Sometimes brute force is fun. And the sheer exercise of it might be valuable for the right student or researcher.

Btw, a fourier xform, or a laplace xform, or some such a thing, of critical parts of the algorithm may make the whole thing a LOT more tractable; although, you would have to work for a while to show this. Cheers. Also: cool channel, dude.

RE: "You can't just wait longer and have more memory..."

Uhhh... this is exactly what swap files were invented for... -- the issue is that the software wants the entire transformer network in memory all at once... which is just a silly limitation of the software.

Tldr; I didn't completely hate this paper because I didn't completely understand it 😉

Before assuming I'm throwing shade, go to 01:48 in the video 😛

Here are simplified the equations from the paper: vaclavkosar.com/ml/Lamda-Networks-Transform-Self-Attention

Why do so many papers not have these nice diagrams for how all their variables interact with each other, like you outlined here? It feels almost intentionally obtuse.

Can I join your discord?

sure, link in the description

Thanks for the videos. Yannic, can you play this back to yourself on high volume? You'll notice that your swallowing is **extremely** loud. This is because your mic is likely right next to your mouth - maybe back up a foot or two? Again, love your posts but they're really hard to listen to sometimes.

No offense to the first plot but who cares about latency in training