- 15

- 204 454

far1din

Приєднався 2 вер 2022

Educate yourself.

X: x.com/far1din_

Github: github.com/far1din

Donations: www.paypal.com/donate/?hosted_button_id=K6ER3TW5J32LN

X: x.com/far1din_

Github: github.com/far1din

Donations: www.paypal.com/donate/?hosted_button_id=K6ER3TW5J32LN

Cubic Formula. Wait what? #SoMEpi

In this video, we'll explore how to solve third-degree polynomial equations using Cardano's method. We'll start with depressed cubic equations and learn how to transform any cubic equation into its depressed form. Then, we'll use the cubic formula to find a solution and finally, translate that solution back to the original equation, allowing us to solve any cubic equation.

Manim code: github.com/far1din/manim?tab=readme-ov-file#the-cubic-formula-wait-what

► SUPPORT THE CHANNEL

➡ Paypal: www.paypal.com/donate/?hosted_button_id=K6ER3TW5J32LN

These videos can take several weeks to make. Any donations towards the channel will be highly appreciated! 😄

► SOCIALS

X: x.com/far1din_

Github: github.com/far1din

----------- Content -----------

0:00 - Introduction

00:25 - Visualizing Depressed Cubic Equations

01:37 - Completing the Cube: Prelude to the Cubic Formula

02:47 - Deriving the Cubic Formula (Algebra)

05:18 - Converting a General Cubic Equation into a Depressed Form

07:02 - Solving Cubic Equations: The Entire Process

07:27 - A Simple Example

► Contributions

Intro background music: pixabay.com/music/pulses-intro-180695/

Background music by BlackByBeats: pixabay.com/music/solo-piano-ambient-piano-114-109400/

Pyramid Illustration by Storyset: www.freepik.com/free-vector/pyramid-concept-illustration_168303227.htm

Greek mythology Illustration by Freepik: www.freepik.com/free-vector/hand-drawn-greek-mythology-illustration_25793984.htm

Fibonacci sequence and other manim examples: slama.dev/manim/camera-and-graphs/

#cubicequation #cubicformula #cardano #cardanosmethod #polynomialequations #math #maths #algebra #visualization #animation #proof #manim #SoME4 #SoMEPI #SoMEπ

Manim code: github.com/far1din/manim?tab=readme-ov-file#the-cubic-formula-wait-what

► SUPPORT THE CHANNEL

➡ Paypal: www.paypal.com/donate/?hosted_button_id=K6ER3TW5J32LN

These videos can take several weeks to make. Any donations towards the channel will be highly appreciated! 😄

► SOCIALS

X: x.com/far1din_

Github: github.com/far1din

----------- Content -----------

0:00 - Introduction

00:25 - Visualizing Depressed Cubic Equations

01:37 - Completing the Cube: Prelude to the Cubic Formula

02:47 - Deriving the Cubic Formula (Algebra)

05:18 - Converting a General Cubic Equation into a Depressed Form

07:02 - Solving Cubic Equations: The Entire Process

07:27 - A Simple Example

► Contributions

Intro background music: pixabay.com/music/pulses-intro-180695/

Background music by BlackByBeats: pixabay.com/music/solo-piano-ambient-piano-114-109400/

Pyramid Illustration by Storyset: www.freepik.com/free-vector/pyramid-concept-illustration_168303227.htm

Greek mythology Illustration by Freepik: www.freepik.com/free-vector/hand-drawn-greek-mythology-illustration_25793984.htm

Fibonacci sequence and other manim examples: slama.dev/manim/camera-and-graphs/

#cubicequation #cubicformula #cardano #cardanosmethod #polynomialequations #math #maths #algebra #visualization #animation #proof #manim #SoME4 #SoMEPI #SoMEπ

Переглядів: 849

Відео

The Quadratic Formula from Scratch.

Переглядів 69511 місяців тому

In this video, we are going to look at how we can solve second-degree polynomial equations without the quadratic formula. We will instead use a technique called completing the square. Finally, we will proceed to prove the quadratic formula. ► SUPPORT THE CHANNEL ➡ Paypal: www.paypal.com/donate/?hosted_button_id=K6ER3TW5J32LN These videos can take several weeks to make. Any donations towards the...

Training a Convolutional Neural Network (CNN)

Переглядів 6 тис.Рік тому

Visualizing a convolutional neural network through the training process. Witness the Evolution of a Cutting-Edge Model, From Untrained to Trained, with comparisons at zero, one, five, and 15 Epochs. ► SUPPORT THE CHANNEL ➡ Paypal: www.paypal.com/donate/?hosted_button_id=K6ER3TW5J32LN These videos can take several weeks to make. Any donations towards the channel will be highly appreciated! 😄 ► S...

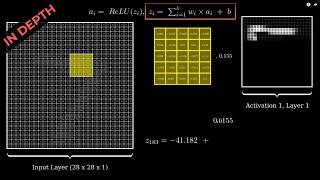

Convolutional Neural Networks from Scratch | In Depth

Переглядів 71 тис.Рік тому

Visualizing and understanding the mathematics behind convolutional neural networks, layer by layer. We are using a model pretrained on the mnist dataset. ► SUPPORT THE CHANNEL ➡ Paypal: www.paypal.com/donate/?hosted_button_id=K6ER3TW5J32LN These videos can take several weeks to make. Any donations towards the channel will be highly appreciated! 😄 ► SOCIALS X: x.com/far1din_ Github: github.com/f...

Backpropagation in Convolutional Neural Networks (CNNs)

Переглядів 43 тис.Рік тому

In this video we are looking at the backpropagation in a convolutional neural network (CNN). We use a simple CNN with zero padding (padding = 0) and a stride of two (stride = 2). ► SUPPORT THE CHANNEL ➡ Paypal: www.paypal.com/donate/?hosted_button_id=K6ER3TW5J32LN These videos can take several weeks to make. Any donations towards the channel will be highly appreciated! 😄 ► SOCIALS X: x.com/far1...

Visualizing Convolutional Neural Networks | Layer by Layer

Переглядів 81 тис.2 роки тому

Visualizing convolutional neural networks layer by layer. We are using a model pretrained on the mnist dataset. ► SUPPORT THE CHANNEL ➡ Paypal: www.paypal.com/donate/?hosted_button_id=K6ER3TW5J32LN These videos can take several weeks to make. Any donations towards the channel will be highly appreciated! 😄 ► SOCIALS X: x.com/far1din_ Github: github.com/far1din Manim code: github.com/far1din/mani...

Great Explanation. Thank you very much.

Nope there are 3 results: 2, 3.17, and 17.1 approx; calculated from the last formula, the ± on the √ gives 4 variations, the 1st and 4th are the same, the 2nd & 3rd give the other 2 results, and there are 2 cubic roots, that's 3 results each

Very much a valid concern! From 5:04, you will see that we first solve for "t". Then, since u=m/t, we substitute in that solution for "t". However, since the baseline is that "u" is a function of t, we must pick the same t. Hence, two variations. In other words, x = t - u/3. Since u = m/t, we substitute u in the formula for x. Therefore, we get that x = t - m/(3t). This practically means that in the written out formula for x, which you see at 5:14, the cube root terms has to be the same. This is why 5.46 and -1.46 (suppose the other two variations) are not valid solutions in 7:47. Don't fully get how you got 3.17 and 17.1 (?) When that's said, I fully understand the confusion which can occur when simply looking at the formula. To avoid the confusion, you could write x = t - m/(3t) and have the definition of "t" written right besides. Let me know if anything! 😃

@@far1din Yes, I'm confused; Hubris, the pretext humans make "when they have figured out the Universe" ha! 😊 People in Marh/Science like to discard results they don't want, as simple as that, even when clearly the results should be more, but people don't search for the truth, they search for some, convenient truth

Sehr gut

Amazing video!! :D

Thanks! 😄

Sehr gut😊😊

Danke 🥺

Thank you! Now I'm one step closer to finishing a model for hw :)

You can do it!

❤❤

excellent explanation thanks

Glad it was helpful!

Beautiful🙌

Thank you 🙌

More amazing content such that will be apprecaited.

Absolutely

Excellent video! Thanks for taking the time and breaking it down so clearly.

Very welcome!

How do we know how many layers or filters we need at each layer ? I mean, how can we construct our architecture.

Thank you for the source code. this will help me to create some content for my syllabus. with love <3

Glad it was helpful although not the most ideal code 😂

“Beautiful, isn’t it?”

Amazing CNN series, super intuitive and easy to understand!❤

Nice. I remember working on digit recognition using handcoded analysis of pixel runs a long time ago. It never worked properly 😂 And it was computationally intensive.

Good job , the explanation is super, I hope you do not stop making videos in this calibre . Did you use manim to make this video or an other video editor?

I'm new to machine learning and neural networks. Your video is very helpful. I have built a small python script just using numpy and I can train numerous samples. So this is a big picture question. Let's say I've trained my program on thousands of inputs and I'm satisfied. Now I want to see if it can recognize a new input, one not used in training. What weight and bias values do I use? After I'm finished with training, how do I modify the script to 'guess?' It would seem to me that back propagation isn't used because I don't actually have a 'desired' value so I'm not going to calculate loss. What weight and bias values do I use from the training sessions? There are dozens of videos and tutorials on training but I think the missing piece is what to do with the training program to make it become the 'trained' program, the one that guesses new inputs without back propagation.

Thank you so much!

This is an exceptional explanation, and I can't thank u more... u have to keep going, u enlighten many student on the planet! that's the best thing a human can do!

Thank you brother, very much appreciate it! 🔥

I'm curious, why is the first convolution using ReLU and then later convolutions using sigmoid? Edit: Also, when convolving over the previous convolution-max pooling output, we have two 2 images, how are the convolutions from these two separate images combined? Is it just adding them together?

Hey Brandon! 1. The ReLU and Sigmoid are just serving as examples to showcase the different activation functions. This video is just a «quick» visualization from the longer in depth version. 2. Not sure if I understood, but if your referring to the filters, they are added. I go through the math behind this with visualizations in the in depth video. I believe it should clarify your doubts! 😄

@@far1din Will be checking that out, thanks!

the best channel❤

That was awesome explanation

What an explanation man 🫡

I racked my brain for hours and couldn't figure out why the features' maps aren't multiplying after each layer and this video just helped me realize they become channels of images , it helped me relax and I think I can go downstairs for dinner now.

Glad it helped! 😄

exceptional explanation u did! I have several questions , but first id like to ask is it ok to support u from the thanks button since i dont have any paypal account? thnks warmest regards ruby

Ofc my friend! Feel free to shoot me a DM on X if you have any questions aswell 💯

Sorry if this sound silly but what actually is inflection point ? is it f" or any other geometric intuition ?

You’re correct. It is the point where the double derivative is equal to 0. There are many geometrical intuitions. For cubic equations, the inflection point serves as the point where there is rotational symmetry. This means that you can rotate a cubic function 180 degrees around the inflection point and still have the same plot. I actually cut this part out as it felt like a digression and I didnt want to prolong the video any more than necessary. Maybe I should have kept it in 😭😂

If you already made it the better idea would have been to keep it...but btw ty❤@@far1din

At 2:03 I have a doubt you took the m×x cube divided it into 3 parts and then place that on the other cube but you only covered 3 sides and not all 6...so vol will be 1/2 of t^3 no ?

Sorry now I got it 😅 it was still somewhat subtle confusion..

Haha nice! You’ll see that it is a «cube» with sidelengths = t once it starts spinning. 😄

great videos

❤❤❤ Liked and subscribed .

Watch the full video: ua-cam.com/video/JboZfxUjLSk/v-deo.html

Great video!! Just one question, why does the inflection point of a depressed cubic fall on x=0?

It is explained at around 06:09 in the video, but maybe not well enough so I’ll try again haha. The «x» value for the inflection point is found by setting f’’(x) = 0. For a general cubic function f(x) = ax^3 + bx^2 + cx + d, the double derivative f’’(x) = 6ax + 2b If we want the inflection point, we have to set f’’(x) = 0, which will give us 0 = 6ax + 2b which in turn will give us x = -b/(3a). This means that we can find the inflection point for any cubic function at -b/(3a). Now, if we want the inflection point at x = 0, we will get that 0 = -b/(3a) which equates to b = 0. If we go back to our initial equation f(x) and set b = 0, we will get f(x) = ax^3 + 0*x^2 + cx + d. This eliminates the x^2 term as we multiply by zero, and leave us with f(x) = ax^3 + cx + d which essentially is a depressed cubic function. I hope this cleared any doubt. Please let me know if there is anything else! 😄

@@far1din Ah I see, thank you, that explains it pretty well. Cheers on your future videos!

beautiful explanation❤

this is a very unique and underrated explanation!beautiful work thank you so much❤

Nice ! Would you do the same for degree 4?

Great suggestion! I’ll give it a try if I can find a compelling way to visualize both the problem and the solution. However, the extra dimension might make it challenging :/

@@far1din you can add colour to visualise it for example, or draw the projection in R³, there is many way to represent a tesseract

What are the criteria for setting filters?

I think this is a great explanation! My only thing would be that some of the equation transformations are hard to follow, since they're so rapid fire. Keep up the good work!

Thank you for the feedback. Rewatching the video now, I understand that the transformation might have been a bit too rapid. Will take that into consideration for the next videos! 😄

That intro was so well done! Still watching but just wanted to say that before I forget

nice work sir

This is gold. Watching this after reading Michael Nielsen makes the concept crystal clear

tks u very much for this video, but it's probably more helpful if you also add a max pooling layer.

what happens if I specify the convo layer 2 have only 2 dimensions? the same kernel will be applied for both 2 images? then be added?

WWOWW! I need a video visualizing CNN from layer to layer like that, and I encountered ur channel. The best CNN visualization for me now. Tks u!

Keep it up and I really encourage you to make more content! Data science community needs more of such high quality contents!

Best video to understand what is going on the under the hood of CNN.

Great explanation!

Amazing work! Please continue to make more videos on other ML topics. I find your videos are really helpful to understand the concepts.

Finally someone who doesn't just say "it convoluted the image and poof one magic later it works"

Can't express my gratitude, albeit here I am. Everything is shown very detailed, explained accurately and understandably. Keep up the good work.

Thanks a lot!